On November 9 last year, Nvidia announced the first consumer GPU with a unified architecture and support for DirectX 10. The novelty was detailed in our Directly Unified: Nvidia GeForce 8800 Architecture Review. Initially, the novelty formed the basis for two new graphics cards - GeForce 8800 GTX and GeForce 8800 GTS. As you know, the older model performed well in games and may well be considered the choice of an enthusiast who is not embarrassed by the price, while the younger model took its rightful place in its price category - less than $ 500, but more than $ 350.

$ 449 is not a very high price for a new generation product that has full support for DirectX 10 and can offer the user a serious level of performance in modern games. Nevertheless, Nvidia decided not to stop there, and on February 12, 2007 presented to the public a more affordable model GeForce 8800 GTS 320MB with an official price of $ 299, which seriously strengthened its position in this sector. These two graphics cards will be discussed in today's review. Along the way, we will find out how critical the video memory capacity is for the GeForce 8 family.

GeForce 8800 GTS: technical specifications

To assess the qualities and capabilities of both GeForce 8800 GTS models, we should remind our readers of the characteristics of the GeForce 8800 family.All three GeForce 8800 models use the same G80 graphics core, consisting of 681 million transistors, as well as an additional NVIO chip containing TMDS transmitters, RAMDACs, etc. Using such a complex chip to produce several graphics models adapters belonging to different price categories are not the best option in terms of the cost of the final product, however, you cannot call it unsuccessful: Nvidia has the opportunity to sell discarded versions of the GeForce 8800 GTX (which have not been rejected by frequencies and / or have some blocks), and the cost of video cards sold for over $ 250 is hardly critical. This approach is actively used by both Nvidia and its sworn rival ATI, it is enough to recall the history of the G71 GPU, which can be found both in the massive inexpensive GeForce 7900 GS video adapter and in the powerful two-chip monster GeForce 7950 GX2.

The GeForce 8800 GTS was created in the same way. As you can see from the table, in terms of technical characteristics, this video adapter differs significantly from its older brother: it not only has lower clock frequencies and some stream processors are disabled, but also the amount of video memory is reduced, the width of the access bus to it is cut, and some TMU and ROP units are inactive. ...

In total, the GeForce 8800 GTS has 6 groups of stream processors, 16 ALUs each, which gives a total of 96 ALUs. The main rival of this card, AMD Radeon X1950 XTX, has 48 pixel processors, each of which, in turn, consists of 2 vector and 2 scalar ALUs - 192 ALUs in total.

It would seem that in pure computing power the GeForce 8800 GTS should be seriously inferior to the Radeon X1950 XTX, but there are a number of nuances that make this assumption not entirely legitimate. The first of them is that stream processors GeForce 8800 GTS, like ALU in Intel NetBurst, operate at a much higher frequency than the rest of the core - 1200 MHz versus 500 MHz, which already means a very serious increase in performance. Another nuance follows from the architecture features of the R580 GPU. In theory, each of its 48 pixel shader execution units is capable of executing 4 instructions per clock, not counting the branch instructions. However, only 2 of them will be of type ADD / MUL / MADD, and the other two are always ADD instructions with a modifier. Accordingly, the efficiency of R580 pixel processors will not be maximum in all cases. On the other hand, G80 stream processors have a fully scalar architecture and each of them is capable of executing two scalar operations per clock cycle, for example, MAD + MUL. Although we still do not have exact data on the architecture of Nvidia stream processors, in this article we will look at how much the new unified architecture of the GeForce 8800 is more progressive than the Radeon X1900 architecture and how this affects the speed in games.

As for the performance of texturing and rasterization systems, judging by the characteristics, the GeForce 8800 GTS has more texture units (24) and rasterizers (20) than the Radeon X1950 XTX (16 TMU, 16 ROP), however, their clock frequency ( 500MHz) is noticeably lower than the clock speed of the ATI product (650MHz). Thus, neither side has a decisive advantage, which means that the performance in games will be influenced mainly by the "success" of the micro-architecture, and not by the numerical advantage of the execution units.

It is noteworthy that both the GeForce 8800 GTS and the Radeon X1950 XTX have the same memory bandwidth - 64GB / s, however, the GeForce 8800 GTS uses a 320-bit video memory bus, it uses GDDR3 memory operating at 1600MHz, while the The Radeon X1950 XTX can be found in 2GHz GDDR4 memory with 256-bit access. Given ATI's claims that the R580 has a superior ring topology compared to a typical Nvidia controller, it will be interesting to see if ATI's Radeon solution gains some edge in terms of high resolutions with full-screen anti-aliasing enabled against a new generation competitor, as was the case with the GeForce 7.

The less expensive version of the GeForce 8800 GTS with 320MB of memory, announced on February 12, 2007 and intended to replace the GeForce 7950 GT in the performance-mainstream segment, differs from the usual model only in the amount of video memory. In fact, all Nvidia had to do to get this card was swapping out the 512 Mbit memory chips for 256 Mbit chips. A simple and technological solution, it allowed Nvidia to assert its technological superiority in the price category of $ 299, which is quite popular among users. In the future, we will find out how this affected the performance of the new product and whether a potential buyer should pay extra $ 150 for a model with 640 MB of video memory.

In our today's review, the GeForce 8800 GTS 640MB will be presented with the MSI NX8800GTS-T2D640E-HD-OC video adapter. Let's tell you more about this product.

MSI NX8800GTS-T2D640E-HD-OC: packaging and contents

The video adapter arrived at our laboratory in retail version - packed in a colorful box along with all accompanying accessories. The box turned out to be relatively small, especially in comparison with the box from MSI NX6800 GT, which at one time could compete with Asustek Computer in terms of dimensions. Despite its modest size, MSI packaging has traditionally been equipped with a convenient carrying handle.

The design of the box is made in calm blue and white colors and does not hurt the eyes; the front side is decorated with an image of a pretty red-haired girl-angel, so there is no talk of aggressive motives so popular among video card manufacturers. Three stickers inform the buyer that the card is pre-overclocked by the manufacturer, supports HDCP and comes with the full version of Company of Heroes. On the back of the box you can find information about Nvidia SLI and MSI D.O.T. technologies. Express. The latter is a dynamic overclocking technology, and according to MSI, it can increase the performance of the video adapter by 2% -10%, depending on the used overclocking profile.

Opening the box, in addition to the video adapter itself, we found the following set of accessories:

Quick Installation Guide

Quick Start Guide

Adapter DVI-I -> D-Sub

YPbPr / S-Video / RCA Splitter

S-Video cable

Power adapter 2хMolex -> 6-pin PCI Express

CD with MSI Drivers and Utilities

Company of Heroes Double Disc Edition

Both guides are in the form of posters; in our opinion, they are too simple and contain only the most basic information. The pursuit of the number of languages, and there are 26 of them in the short user's guide, has led to the fact that nothing particularly useful, except for the basic information on installing the card into the system, can not be learned from it. We think the manuals could be a little more detailed, which would give some advantage to inexperienced users.

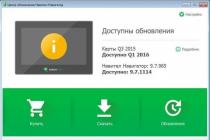

The driver disc contains the outdated version of Nvidia ForceWare 97.29, as well as a number of proprietary utilities, among which MSI DualCoreCenter and MSI Live Update 3 deserve special mention. full functionality, the program requires an MSI motherboard equipped with a CoreCell chip and is therefore of little use to owners of other manufacturers' motherboards. MSI Live Update 3 utility is designed to keep track of driver and BIOS updates and conveniently update them over the Internet. This is a fairly convenient way, especially for those who do not want to understand the intricacies of the manual process. BIOS updates video adapter.

MSI should be commended for the full version of the popular tactical RTS Company of Heroes. This is really a game of the highest category, with excellent graphics and a thoroughly worked out gameplay; many players call her the best game in this genre, which is confirmed by numerous awards, including the title of "Best Strategy Game E3 2006". As we have already noted, despite belonging to the real-time strategy genre, Company of Heroes boasts modern graphics at the level of a good first-person shooter, so the game is perfect for demonstrating the capabilities of the GeForce 8800 GTS. In addition to Company of Heroes, a demo version of Warhammer 40.000: Dawn of War - Dark Crusade can be found on the discs.

We can confidently call the MSI NX8800GTS-T2D640E-HD-OC a good package bundle thanks to the full version of the very popular tactical RTS Company of Heroes and convenient MSI software.

MSI NX8800GTS-T2D640E-HD-OC PCB Design

For the GeForce 8800 GTS, Nvidia has developed a separate, more compact PCB than the one used to manufacture the GeForce 8800 GTX. Since all GeForce 8800s are supplied to Nvidia partners ready-made, almost everything that will be said below applies not only to the MSI NX8800GTS, but also to any other GeForce 8800 GTS model, be it a version with 640 or 320 MB of video memory.

The GeForce 8800 GTS PCB is much shorter than the GeForce 8800 GTX. Its length is only 22.8 centimeters versus almost 28 centimeters in the flagship model GeForce 8. In fact, the dimensions of the GeForce 8800 GTS are the same as those of the Radeon X1950 XTX, even slightly smaller, since the cooler does not protrude beyond the PCB.

Our MSI NX8800GTS sample uses a board covered with a green mask, although the company's website shows the product with a PCB in the more usual black color. Currently, there are both "black" and "green" GeForce 8800 GTX and GTS on sale. Despite numerous rumors circulating on the web, there is no difference between such cards, except for the PCB color itself, which is confirmed by the official Nvidia website. What is the reason for this "return to the roots"?

There are many conflicting rumors on this score. According to some of them, the composition of the black coating is more toxic than the traditional green, while others consider that the black coating is more difficult to apply or more expensive. In practice, this is most likely not the case - as a rule, prices for solder masks of different colors are the same, which eliminates additional problems with masks of certain colors. The most probable is the simplest and most logical scenario - cards of different colors are produced by different contract manufacturers - Foxconn and Flextronics. Moreover, Foxconn probably uses coatings of both colors, since we have seen both "black" and "green" cards from this manufacturer.

The power supply system of the GeForce 8800 GTS is almost equal in complexity to the similar system of the GeForce 8800 GTX and even contains a larger number of electrolytic capacitors, but it has a denser layout and only one external power connector, due to which the PCB was made much shorter. The same digital PWM controller as in the GeForce 8800 GTX, Primarion PX3540, is responsible for power management of the GPU. The memory power is controlled by the second controller, Intersil ISL6549, which, by the way, is absent on the GeForce 8800 GTX, where the memory power circuit is different.

The left part of the PCB, where the main components of the GeForce 8800 GTS - GPU, NVIO and memory are located, is almost identical to the analogous section of the PCB of the GeForce 8800 GTX, which is not surprising, since the development of the entire board from scratch would require significant financial and time costs. In addition, it would probably not have been possible to significantly simplify the board for the GeForce 8800 GTS, designing it from scratch, in light of the need to use the same tandem of G80 and NVIO as on the flagship model. The only visible difference from the GeForce 8800 GTX is the absence of the second "comb" of the MIO (SLI) interface, in place of which there is a place for installing a technological connector with latches, possibly performing the same function, but not wired. Even the 384-bit layout of the memory bus is preserved, and the bus itself was cut to the required width in the simplest way: instead of 12 GDDR3 chips, only 10. Since each chip has a 32-bit bus, 10 microcircuits give the required 320 bits in total. Theoretically, nothing prevents the development of a GeForce 8800 GTS with a 384-bit memory bus, but the appearance of such a card in practice is extremely unlikely, therefore, a full-fledged GeForce 8800 GTX with reduced frequencies has great chances of being released.

MSI NX8800GTS-T2D640E-HD-OC is equipped with 10 GDDR3 Samsung K4J52324QE-BC12 chips with a capacity of 512 Mbit, operating at a 1.8V supply voltage and having a nominal frequency of 800 (1600) MHz. According to the official specifications of Nvidia for the GeForce 8800 GTS, the memory of this video adapter should have exactly this frequency. But the MSI NX8800GTS version we are considering has the letter "OC" in its name - it is pre-overclocked, so the memory operates at a slightly higher frequency of 850 (1700) MHz, which gives an increase in bandwidth from 64 GB / s. up to 68 GB / sec.

Since the only difference between the GeForce 8800 GTS 320MB and the regular model is the halved video memory, this card is simply equipped with 256 Mbit memory chips, for example, Samsung K4J55323QC / QI series or Hynix HY5RS573225AFP. Otherwise, the two GeForce 8800 GTS models are identical to each other down to the smallest details.

The marking of the GPU NX8800GTS is somewhat different from the marking of the GeForce 8800 GTX processor and looks like "G80-100-K0-A2", whereas in the reference flagship card the chip is marked with the symbols "G80-300-A2". We know that the production of the GeForce 8800 GTS can be launched with samples of the G80, which have defects in the part of functional blocks and / or have not passed the selection by frequency. Perhaps it is these features that are reflected in the marking.

The 8800 GTS processor has 96 streaming processors out of 128, 24 TMUs out of 32, and 20 ROPs out of 24. For the standard version of the GeForce 8800 GTS, the base GPU frequency is 500 MHz (513 MHz real frequency), and the shader processor frequency is 1200 MHz (1188 MHz real frequency), but for MSI NX8800GTS-T2D640E-HD-OC these parameters are 576 and 1350 MHz, which corresponds to the frequencies of the GeForce 8800 GTX. How this will affect the performance of the MSI product, we will find out later, in the section on the results of gaming tests.

The configuration of the NX8800GTS output connectors is standard: two DVI-I connectors capable of working in dual-link mode and a universal seven-pin mini-DIN connector, which allows both HDTV devices to be connected via analog interface YPbPr and SDTV devices using S-Video or Composite interface. In MSI's product, both DVI connectors are carefully covered with rubber protective caps - a rather meaningless but pleasant trifle.

MSI NX8800GTS-T2D640E-HD-OC: cooling system design

The cooling system installed on the MSI NX8800GTS, as well as on the overwhelming majority of GeForce 8800 GTS from other graphics card vendors, is a shortened version of the GeForce 8800 GTX cooling system described in the corresponding review.

Shortened heatsink and heatpipe that transfers heat from the copper base in contact with the GPU heat spreader. Also, the flat U-shaped heat pipe is located in a different way, pressed into the base and is responsible for an even distribution of heat flow. Aluminum frame on which all cooler parts are fixed. has a lot of protrusions in the places of contact with memory chips, power transistors of the power supply stabilizer and the crystal of the NVIO chip. Reliable thermal contact is ensured by traditional inorganic fiber pads impregnated with white thermal paste. For the GPU, a different, but also familiar to our readers, thick dark gray thermal paste is used.

Due to the fact that there are relatively few copper elements in the design of the cooling system, its mass is low, and the fastening does not require the use of special plates that prevent fatal bending of the PCB. Eight common spring-loaded bolts that attach the cooler directly to the board are enough. The possibility of damaging the graphics processor is practically excluded, since it is equipped with a heat spreader cover and is surrounded by a wide metal frame that protects the chip from possible distortion of the cooling system, and the board from excessive bending.

A radial fan with an impeller diameter of about 75 millimeters is responsible for blowing off the radiator, which has the same electrical parameters as in the GeForce 8800 GTX cooling system - 0.48A / 12V, and is connected to the board via a four-pin connector. The system is covered with a translucent plastic cover so that hot air is blown out through the slots in the mounting plate.

The design of the GeForce 8800 GTX and 8800 GTS coolers is well thought-out, reliable, time-tested, practically silent in operation and provides high cooling efficiency, so it makes no sense to change it to anything else. MSI replaced only the Nvidia sticker on the casing with its own one, repeating the pattern on the box and provided the fan with another sticker with its own logo.

MSI NX8800GTS-T2D640E-HD-OC: noise and power consumption

To assess the noise level generated by the MSI NX8800GTS cooling system, a Velleman DVM1326 digital sound level meter with a resolution of 0.1 dB was used. Measurements were made using a weighted curve A. At the time of the measurements, the background noise level in the laboratory was 36 dBA, and the noise level at a distance of one meter from a working bench equipped with a graphics card with passive cooling was equal to 40 dBA.

In terms of noise, the cooling system of the NX8800GTS (and any other GeForce 8800 GTS) behaves exactly the same as the system installed on the GeForce 8800 GTX. The noise level is very low in all modes; In this respect, the new Nvidia design surpasses even the excellent GeForce 7900 GTX cooler, which was previously considered to be the best in its class. To achieve complete noiselessness and not lose in cooling efficiency in this case, it is possible only by installing a water cooling system, especially if serious overclocking is planned.

As our readers know, reference samples of the GeForce 8800 GTX from the first batches refused to run on a stand equipped to measure the power consumption of video cards. However, most of the new cards belonging to the GeForce 8800 family, including the MSI NX8800GTS-T2D640E-HD-OC, worked without any problems on this system with the following configuration:

Intel Pentium 4 560 processor (3.60GHz, 1MB L2);

Systemic Intel board Desktop Board D925XCV (i925X);

PC-4300 DDR2 SDRAM (2x512MB);

Samsung SpinPoint SP1213C hard drive (120 GB, Serial ATA-150, 8MB buffer);

Microsoft Windows XP Pro SP2, DirectX 9.0c.

As we have reported, the mainboard, which is the heart of the measuring platform, has been specially upgraded: measuring shunts equipped with connectors for connecting measuring equipment are included in the gap between the power supply circuits of the PCI Express x16 slot. The 2xMolex -> 6-pin PCI Express power adapter is equipped with the same shunt. A Velleman DVM850BL multimeter is used as a measuring tool, with a measurement error of no more than 0.5%.

To create a load on the video adapter in 3D mode, the first graphics test SM3.0 / HDR is used, included in the Futuremark 3DMark06 package and launched in an infinite loop at 1600x1200 resolution with 16x forced anisotropic filtering. 2D Peak Mode is emulated by running the 2D Transparent Windows benchmark, which is part of the Futuremark PCMark05 suite.

Thus, after carrying out the standard measurement procedure, we were able to obtain reliable data on the power consumption level not only of MSI NX8800GTS-T2D640E-HD-OC, but also of the entire Nvidia GeForce 8800 family.

The GeForce 8800 GTX is indeed ahead of the previous "leader" in terms of power consumption, the Radeon X1950 XTX, but only by 7 watts. Considering the enormous complexity of the G80, 131.5 watts in 3D mode can be safely considered a good indicator. Both additional power connectors of the GeForce 8800 GTX consume approximately the same power, not exceeding 45 watts, even in the most severe mode. Although the PCB design of the GeForce 8800 GTX assumes the installation of one eight-pin power connector instead of a six-pin one, it is unlikely to be relevant even in the case of a significant increase in the clock speeds of the GPU and memory. In idle mode, the efficiency of the Nvidia flagship leaves much to be desired, but this is a payback for 681 million transistors and a huge, by GPU standards, frequency of shader processors. Such a high level of power consumption in idle mode is partly due to the fact that the GeForce 8800 family does not lower clock frequencies in this mode.

The performance of both GeForce 8800 GTS variants is noticeably more modest, although they cannot boast of efficiency at the level of Nvidia cards using the core of the previous generation, G71. The single power connector of these cards has a much more serious load, in some cases it can reach 70 watts or more. The power consumption levels of the versions of the GeForce 8800 GTS with 640 and 320 MB of video memory differ insignificantly, which is not surprising - this parameter is the only difference between these cards. MSI's product, operating at higher frequencies, consumes more than the standard version of the GeForce 8800 GTS - about 116 watts under load in 3D mode, which is still less than the same indicator for the Radeon X1950 XTX. Of course, in 2D mode, an AMD card is much more economical, however, video adapters of this class are purchased specifically for use in 3D, therefore, this parameter is not as critical as the level of power consumption in games and 3D applications.

MSI NX8800GTS-T2D640E-HD-OC: Overclocking Features

Overclocking representatives of the Nvidia GeForce 8800 family is associated with a number of features that we consider it necessary to tell our readers about. As you probably remember, the first representatives of the seventh generation GeForce, using the 0.11-micron G70 core, could increase the ROP and pixel processors frequencies only in 27 MHz steps, and if the overclocking turned out to be less than this value, there was practically no performance gain. Later, in cards based on the G71, Nvidia returned to the standard overclocking scheme in 1 MHz increments, however, in the eighth generation of GeForce, the discreteness of changing the clock frequencies appeared again.The scheme of allocating and changing clock frequencies in the GeForce 8800 is rather nontrivial, which is due to the fact that the shader processor units in the G80 operate at a much higher frequency than the rest of the GPU units. The frequency ratio is about 2.3 to 1. Although the main frequency of the graphics core can change in smaller steps than 27 MHz, the frequency of shader processors always changes in 54 MHz (2x27 MHz) steps, which creates additional difficulties during overclocking, because all utilities manipulate the main frequency , and not at all by the frequency of the shader "domain". However, there is a simple formula that allows you to accurately determine the frequency of the GeForce 8800 stream processors after overclocking:

OC shader clk = Default shader clk / Default core clk * OC core clk

Where OC shader clk is the desired frequency (approximately), Default shader clk is the initial frequency of shader processors, Default core clk is the initial frequency of the core, and OC core clk is the frequency of the overclocked core.

Let's look at the behavior of MSI NX8800GTS-T2D640E-HD-OC when overclocking with the RivaTuner2 FR utility, which allows you to track the real frequencies of different regions or, as they are also called, "domains" of the G80 GPU. Since the MSI product has the same GPU frequencies (576/1350) as the GeForce 8800 GTX, the following information is valid for Nvidia's flagship graphics card as well. We will increase the main GPU frequency in 5 MHz steps: this is a rather small step and at the same time it is not a multiple of 27 MHz.

An empirical check has confirmed that the main frequency of the graphics core can really change with a variable step - 9, 18 or 27 MHz, and we could not catch the pattern of change. The frequency of shader processors in all cases was changed in steps of 54 MHz. Because of this, some frequencies of the main "domain" of the G80 turn out to be practically useless during overclocking, and their use will only lead to excessive heating of the GPU. For example, there is no point in increasing the main core frequency to 621 MHz - the shader unit frequency will still be 1458 MHz. Thus, overclocking the GeForce 8800 should be done carefully, using the above formula and checking the monitoring data of Riva Tuner or another utility with similar functionality.

It would be illogical to expect serious overclocking results from the NX8800GTS version already overclocked by the manufacturer, however, the card unexpectedly showed quite good potential, at least from the GPU side. We managed to raise its frequencies from the factory 576/1350 MHz to 675/1566 MHz, while the NX8800GTS steadily passed several 3DMark06 cycles in a row without any additional cooling. The processor temperature, according to Riva Tuner, did not exceed 70 degrees.

The memory yielded to overclocking much worse, since the NX8800GTX OC Edition was equipped with chips designed for 800 (1600) MHz, operating at a frequency higher than the nominal 850 (1700) MHz. As a result, we had to stop at the 900 (1800) MHz mark, since further attempts to raise the memory frequency invariably led to a freeze or crash in the driver.

Thus, the card showed good overclocking potential, but only for the GPU: comparatively slow memory chips did not allow to significantly increase its frequency. For them, the level of the GeForce 8800 GTX should be considered a good achievement, and the 320-bit bus at this frequency is already capable of providing a serious bandwidth advantage over the Radeon X1950 XTX: 72 GB / s versus 64 GB / s. Of course, the result of overclocking may vary depending on a specific instance of MSI NX8800GTS OC Edition and the use of additional tools, such as modifying the card's power supply or installing water cooling.

Test platform configuration and test methods

A comparative study of the performance of the GeForce 8800 GTX was carried out on platforms with the following configuration.

AMD Athlon 64 FX-60 processor (2 x 2.60GHz, 2 x 1MB L2)

Abit AN8 32X (nForce4 SLI X16) motherboard for Nvidia GeForce cards

Asus A8R32-MVP Deluxe (ATI CrossFire Xpress 3200) Motherboard for ATI Radeon Cards

OCZ PC-3200 Platinum EL DDR SDRAM (2x1GB, CL2-3-2-5)

Maxtor MaXLine III 7B250S0 hard drive (Serial ATA-150, 16MB buffer)

Sound Creative card SoundBlaster Audigy 2

Power supply unit Enermax Liberty 620W (ELT620AWT, rated power 620W)

Dell 3007WFP Monitor (30 ", Max 2560x1600)

Microsoft Windows XP Pro SP2, DirectX 9.0c

AMD Catalyst 7.2

Nvidia ForceWare 97.92

Since we consider the use of trilinear and anisotropic filtering optimizations unjustified, the drivers were tuned in a standard way, implying the highest possible texture filtering quality:

AMD Catalyst:

Catalyst A.I .: Standard

Mipmap Detail Level: High Quality

Wait for vertical refresh: Always off

Adaptive antialiasing: Off

Temporal antialiasing: Off

High Quality AF: On

Nvidia ForceWare:

Texture Filtering: High Quality

Vertical sync: Off

Trilinear optimization: Off

Anisotropic optimization: Off

Anisotropic sample optimization: Off

Gamma correct antialiasing: On

Transparency antialiasing: Off

Other settings: default

In each game, the highest possible level of graphics quality was set, while, config files games have not been modified. To take performance data, either the built-in features of the game were used, or, in their absence, the Fraps utility. Wherever possible, minimum performance data was recorded.

Testing was carried out in three standard resolutions for our methodology: 1280x1024, 1600x1200 and 1920x1200. One of the purposes of this review is to evaluate the effect of the video memory size of the GeForce 8800 GTS on performance. In addition, the technical characteristics and cost of both versions of this video adapter allow us to count on a fairly high level of performance in modern games when using FSAA 4x, so we tried to use the "eye candy" mode wherever possible.

FSAA and anisotropic filtering were activated by means of the game; in the absence of such, their forcing was carried out using the appropriate settings of the ATI Catalyst and Nvidia ForceWare drivers. Testing without full-screen anti-aliasing was used only for games that do not support FSAA for technical reasons, or when using FP HDR simultaneously with participation in testing of representatives of the GeForce 7 family, which does not support simultaneous operation of these features.

Since our task was, among other things, to compare the performance of graphics cards that differ only in the amount of video memory, MSI NX8800GTS-T2D640E-HD-OC was tested 2 times: at the factory frequencies, and at frequencies reduced to the reference values for the GeForce 8800 GTS: 513 / 1188/800 (1600) MHz. In addition to the MSI product and the reference Nvidia GeForce 8800 GTS 320MB, the following video adapters took part in the testing:

Nvidia GeForce 8800 GTX (G80, 576/1350 / 1900MHz, 128sp, 32tmu, 24rop, 384-bit, 768MB)

Nvidia GeForce 7950 GX2 (2xG71, 500 / 1200MHz, 48pp, 16vp, 48tmu, 32rop, 256-bit, 512MB)

AMD Radeon X1950 XTX (R580 +, 650 / 2000MHz, 48pp, 8vp, 16tmu, 16rop, 256-bit, 512MB)

As a test software the following set of games and applications was used:

3D first-person shooters:

Battlefield 2142

Call of juarez

Far cry

F.E.A.R. Extraction Point

Tom Clancy "s Ghost Recon Advanced Warfighter

Half-Life 2: Episode One

Prey

Serious sam 2

S.T.A.L.K.E.R .: Shadow of Chernobyl

3D shooters with a third person view:

Tomb Raider: Legend

RPG:

Gothic 3

Neverwinter nights 2

The Elder Scrolls IV: Oblivion

Simulators:

Strategy games:

Command & Conquer: Tiberium Wars

Company of heroes

Supreme commander

Synthetic gaming tests:

Futuremark 3DMark05

Futuremark 3DMark06

Game tests: Battlefield 2142

There is no significant difference between the two versions of the GeForce 8800 GTS with different video memory capacities up to 1920x1200, although in 1600x1200 the younger model is inferior to the older one by about 4-5 frames per second with quite comfortable performance of both. The 1920x1440 resolution, however, is a turning point: the GeForce 8800 GTS 320MB drops out of the game with more than 1.5 times lag on average and twice in the minimum fps. Moreover, it loses to the cards of the previous generation. There is a shortage of video memory or a problem with the implementation of its management in the GeForce 8800 family.

MSI NX8800GTS OC Edition noticeably outperforms the reference model, starting at 1600x1200, but it certainly cannot catch up with the GeForce 8800 GTX, although at 1920x1440 the gap between these cards becomes impressively narrow. It is obvious that the difference in the width of the memory access bus between the GeForce 8800 GTS and GTX is insignificant here.

Game tests: Call of Juarez

Both GeForce 8800 GTS models show the same performance level at all resolutions, including 1920x1200. This is quite natural, considering testing with HDR on but FSAA off. Working at nominal frequencies, the cards are inferior to the GeForce 7950 GX2.

The overclocked version of MSI allows you to achieve parity in high resolutions, which are impractical to use in this game even if you have a GeForce 8800 GTX in your system. For example, at 1600x1200, the average performance of Nvidia's flagship graphics card is only 40 fps, with dips in graphically intensive scenes up to 21 fps. For a first-person shooter, such indicators can hardly be called truly comfortable.

Game tests: Far Cry

The game is far from young and is poorly suited for testing modern video adapters high class... Despite the use of anti-aliasing, noticeable differences in their behavior can be seen only at 1920x1200. The GeForce 8800 GTS 320MB is faced with a shortage of video memory, and therefore yields by about 12% to the model equipped with 640 MB of video memory. However, due to the modest requirements of Far Cry by today's standards, the player is not in danger of losing comfort.

MSI NX8800GTS OC Edition is almost on a par with the GeForce 8800 GTX: in Far Cry, the power of the latter is clearly not in demand.

Due to the nature of the scene recorded at the Research level, the readings are more varied; even at 1600x1200 you can see the differences in performance of various members of the GeForce 8800 family. Moreover, the lag of the version with 320MB of memory is already evident here, despite the fact that the action takes place in a closed space of an underground cave. The difference in performance between the MSI product and the GeForce 8800 GTX at 1920x1200 is much larger than in the previous case, since the performance of shader processors at this level plays a more important role.

In FP HDR mode, the GeForce 8800 GTS 320MB no longer experiences problems with the video memory capacity and is in no way inferior to its older brother, providing a decent level of performance at all resolutions. The variant offered by MSI provides another 15% increase in speed, but even the version operating at standard clock frequencies is fast enough to use the 1920x1200 resolution, and the GeForce 8800 GTX will undoubtedly provide a comfortable environment for the player at 2560x1600.

Game tests: F.E.A.R. Extraction Point

The visual richness of F.E.A.R. requires the corresponding resources from the video adapter, and the 5% lag of the GeForce 8800 GTS 320MB is seen already at 1280x1024, and at the next resolution, 1600x1200, it sharply turns into 40%.

The benefits of overclocking the GeForce 8800 GTS are not obvious: both the overclocked and the usual versions allow you to play equally well at 1600x1200. In the next resolution, the increase in speed from overclocking is simply not enough to reach a level comfortable for first-person shooters. Only GeForce 8800 GTX with 128 active shader processors and 384-bit memory subsystem can do it.

Game Tests: Tom Clancy's Ghost Recon Advanced Warfighter

Due to the use of deferred rendering, the use of FSAA in GRAW is technically impossible, therefore, the data is given only for the mode with anisotropic filtering.

The advantage of MSI NX8800GTS OC Edition over the usual reference card grows as the resolution grows, and at 1920x1200 it reaches 19%. In this case, it is these 19% that make it possible to achieve an average performance of 55 fps, which is quite comfortable for the player.

As for the comparison of two GeForce 8800 GTS models with different video memory sizes, there is no difference in their performance.

Game tests: Half-Life 2: Episode One

At 1280x1024, there is a limitation on the part of the central processor of our test system - all the cards show the same result. In 1600x1200, the differences are already revealed, but they are not fundamental, at least for three versions of the GeForce 8800 GTS: all three provide a very comfortable level of performance. The same can be said about the resolution 1920x1200. Despite the high-quality graphics, the game is undemanding to the amount of video memory and the loss of the GeForce 8800 GTS 320MB to the older and much more expensive model with 640 MB of memory on board is only about 5%. The overclocked version of the GeForce 8800 GTS offered by MSI confidently takes the second place after the GeForce 8800 GTX.

Although the GeForce 7950 GX2 shows better results than the GeForce 8800 GTS at 1600x1200, do not forget about the problems that may arise when using a card that is, in fact, an SLI tandem, as well as the significantly lower quality of texture filtering in the GeForce 7 family. The new solution from Nvidia, of course, also has problems with drivers, but it has promising capabilities, and, unlike the GeForce 7950 GX2, has every chance to get rid of "childhood diseases" in the shortest possible time.

Game tests: Prey

The GeForce 8800 GTS 640MB does not show the slightest advantage over the GeForce 8800 GTS 320MB, possibly because the game uses a modified Doom III engine and does not show any special appetites in terms of video memory requirements. As with GRAW, increased productivity NX8800GTS OC Edition allows the owners of this video adapter to count on a fairly comfortable game at a resolution of 1920x1200. For comparison, a regular GeForce 8800 GTS demonstrates the same figures at 1600x1200. The flagship of the line, the GeForce 8800 GTX, is beyond competition.

Game tests: Serious Sam 2

The brainchild of Croatian developers from Croteam has always strictly demanded 512 MB of video memory from the video adapter, otherwise punishing it with a monstrous drop in performance. The volume provided by the inexpensive version of the GeForce 8800 GTS was not enough to satisfy the appetites of the game, as a result of which it was able to show only 30 fps at a resolution of 1280x1024, while the version with 640 MB of memory on board was more than twice as fast.

For some unknown reason, the minimum performance of all GeForce 8800s in Serious Sam 2 is extremely low, which may be due to both the architectural features of the family, which, as you know, has a unified architecture without dividing into pixel and vertex shaders, and flaws in ForceWare drivers... For this reason, the owners of the GeForce 8800 will not be able to achieve complete comfort in this game.

Game tests: S.T.A.L.K.E.R .: Shadow of Chernobyl

Eagerly awaited by many players, the GSC Game World project, after many years of development, finally saw the light of day, 6 or 7 years after the announcement. The game turned out to be ambiguous, but, nevertheless, multifaceted enough to try to describe it in a few phrases. Let's just note that in comparison with one of the first versions, the project engine has been significantly improved. The game received support for a number of modern technologies, including Shader Model 3.0, HDR, parallax mapping and others, but did not lose the ability to work in a simplified mode with a static lighting model, providing excellent performance on not very powerful systems.Since we are focusing on the highest level of image quality, we tested the game in full dynamic lighting mode with maximum detail. In this mode, which implies, among other things, the use of HDR, there is no FSAA support; at least this is the case in the current version of S.T.A.L.K.E.R. Since when using a static lighting model and DirectX 8 effects, the game loses much in attractiveness, we limited ourselves to anisotropic filtering.

The game does not suffer from modest appetites - even the GeForce 8800 GTX with maximum detail is not able to provide 60 fps in it at a resolution of 1280x1024. However, it should be noted that at low resolutions the main limiting factor is the CPU performance, since the spread between the cards is small and their average results are quite close.

Nevertheless, some lag of the GeForce 8800 GTS 320MB from its elder brother is already evident here, and it only gets worse with increasing resolution, and at 1920x1200 the youngest member of the GeForce 8800 family simply lacks the available video memory. This is not surprising, given the scale of the game scenes and the abundance of special effects used in them.

In general, we can say that the GeForce 8800 GTX does not provide a serious advantage in S.T.A.L.K.E.R. ahead of the GeForce 8800 GTS, and the Radeon X1950 XTX looks just as successful as the GeForce 8800 GTS 320MB. AMD's solution even surpasses Nvidia's solution in some ways, since it works at 1920x1200, however, practical use of this mode is impractical due to the average performance at the level of 30-35 fps. The same applies to the GeForce 7950 GX2, which, by the way, is somewhat ahead of both its direct competitor and the younger model of the new generation.

Game tests: Hitman: Blood Money

Earlier, we noted that the presence of 512 MB of video memory provides such a video adapter with some gain in Hitman: Blood Money at high resolutions. Apparently, 320 MB is also sufficient, since the GeForce 8800 GTS 320MB is practically not inferior to the usual GeForce 8800 GTS, regardless of the used resolution; the difference does not exceed 5%.

Both cards, as well as the overclocked version of the GeForce 8800 GTS offered by MSI, allow you to play successfully at all resolutions, and the GeForce 8800 GTX even allows the use of better FSAA modes than the usual MSAA 4x, since it has the necessary performance headroom.

Game tests: Tomb Raider: Legend

Despite using the settings that provide the maximum graphics quality, the GeForce 8800 GTS 320MB copes with the game as well as the regular GeForce 8800 GTS. Both cards make available to the player the resolution of 1920x1200 in the "eye candy" mode. MSI NX8800GTS OC Edition slightly surpasses both reference cards, but only on average fps - the minimum remains the same. The GeForce 8800 GTX has no more it, which may mean that this figure is due to some peculiarities of the game engine.

Game benchmarks: Gothic 3

The current version of Gothic 3 does not support FSAA, so testing was carried out using anisotropic filtering only.

Despite the lack of support for full-screen anti-aliasing, the GeForce 8800 GTS 320MB is seriously inferior not only to the regular GeForce 8800 GTS, but also to the Radeon X1950 XTX, slightly outperforming only the GeForce 7950 GX2. Due to the performance at 26-27 fps at 1280x1024, this card is not well suited for Gothic 3.

Note that the GeForce 8800 GTX outperforms the GeForce 8800 GTS, at best, by 20%. By all appearances, the game is unable to use all the resources that the flagship model of Nvidia has at its disposal. This is evidenced by the insignificant difference between the regular and overclocked versions of the GeForce 8800 GTS.

Game Tests: Neverwinter Nights 2

Since version 1.04 the game is FSAA enabled, but HDR support is still incomplete, so we tested NWN 2 in "eye candy" mode.

As already mentioned, the minimum playability barrier for Neverwinter Nights 2 is 15 frames per second, and the GeForce 8800 GTS 320MB balances on this edge already at 1600x1200, while for the version with 640 MB of memory 15 fps is the minimum value below which it performance is not compromised.

Game Tests: The Elder Scrolls IV: Oblivion

Without HDR, the game significantly loses its appeal, and although the opinions of the players differ on this, we tested the TES IV in the mode with FP HDR enabled.

The performance of the GeForce 8800 GTS 320MB directly depends on the resolution used: if in 1280x1024 the new product is able to compete with the most productive cards of the previous generation, then in 1600x1200 and especially 1920x1200 it loses to them, yielding up to 10% to Radeon X1950 XTX and up to 25% to GeForce 7950 GX2. Nevertheless, this is a very good result for a solution that has an official price of only $ 299.

The regular GeForce 8800 GTS and its overclocked version offered by MSI feel more confident and provide comfortable first-person shooter performance at all resolutions.

Examining two versions of the GeForce 7950 GT, which differ in the amount of video memory, we did not record any serious differences in performance in TES IV, however, in a similar situation with two versions of the GeForce 8800 GTS, the picture is completely different.

If in 1280x1024 they behave the same, then already in 1600x1200 the version with 320 MB of memory is more than twice inferior to the version equipped with 640 MB, and in the resolution of 1920x1200 its performance drops to the level of the Radeon X1650 XT. It is quite obvious that the point here is not in the amount of video memory, as such, but in the peculiarities of its distribution by the driver. The problem can probably be fixed by tweaking ForceWare, and with the release of new versions of Nvidia drivers, we will check this statement.

As for the GeForce 8800 GTS and MSI NX8800GTS OC Edition, even in the open spaces of the Oblivion world, they provide a high level of comfort at all resolutions, although, of course, not around 60 fps, as in closed rooms. The most powerful solutions of the previous generation simply cannot compete with them.

Game tests: X3: Reunion

The average performance of all members of the GeForce 8800 family is quite high, but the minimum is still at a low level, which means that the drivers need to be improved. The results of the GeForce 8800 GTS 320MB are the same as those of the GeForce 8800 GTS 640MB.

Game tests: Command & Conquer 3: Tiberium Wars

The Command & Conquer real-time strategy series is probably familiar to everyone who is in the slightest degree fond of computer games. The continuation of the series, recently released by Electronic Arts, takes the player into the familiar world of confrontation between GDI and the Brotherhood of Nod, which, this time, has been joined by a third faction in the face of alien invaders. The game engine is made up to date and uses advanced special effects; in addition, it has one feature - the fps limiter, fixed at around 30 frames per second. Perhaps this is done in order to limit the speed of the AI and, thus, to avoid an unfair advantage over the player. Insofar as regular means the limiter does not turn off, we tested the game with it, which means that we paid attention primarily to the minimum fps.

Almost all test participants can provide 30 fps at all resolutions, except for the GeForce 7950 GX2, which has problems with the SLI mode. Most likely, the driver simply lacks the appropriate support, since the last time official windows The XP Nvidia ForceWare driver for the GeForce 7 family was updated over half a year ago.

As regards both GeForce 8800 GTS models, they demonstrate the same minimum fps and, therefore, provide the same level of comfort for the player. Although the model with 320 MB of video memory is inferior to the older model at 1920x1200, 2 frames per second is hardly a critical value, which, with the same minimum performance, again does not affect the gameplay in any way. The complete lack of discrete control can only be provided by the GeForce 8800 GTX, whose minimum fps does not fall below 25 frames per second.

Game Tests: Company of Heroes

Due to problems with FSAA activation in this game, we decided to abandon the "eye candy" mode and tested it in pure performance mode with anisotropic filtering enabled.

Here is another game where the GeForce 8800 GTS 320MB is inferior to the previous generation with an unified architecture. In fact, the $ 299 Nvidia solution is suitable for use at resolutions no higher than 1280x1024, even with anti-aliasing disabled, while the $ 449 model, which differs in the only parameter - the amount of video memory, allows you to play successfully even at 1920x1200. However, this is also available to owners of AMD Radeon X1950 XTX.

Game tests: Supreme Commander

But Supreme Commander, unlike Company of Heroes, does not impose strict requirements on the amount of video memory. In this game the GeForce 8800 GTS 320MB and the GeForce 8800 GTS show equally high results. Some additional gain can be obtained by overclocking, which is demonstrated by the MSI product, but such a step will still not reach the level of the GeForce 8800 GTX. However, the available performance is sufficient to use all resolutions, including 1920x1200, especially since its fluctuations are small, and the minimum fps is only slightly inferior to the average.

Synthetic benchmarks: Futuremark 3DMark05

Since by default 3DMark05 uses a resolution of 1024x768 and does not use full-screen anti-aliasing, the GeForce 8800 GTS 320MB naturally demonstrates the same result as the usual version with 640 MB of video memory. The overclocked version of the GeForce 8800 GTS, supplied to the market by Micro-Star International, boasts a nice even result - 13800 points.

In contrast to the general result obtained in the default mode, we get the results of individual tests by running them in the "eye candy" mode. But in this case it had no effect on the performance of the GeForce 8800 GTS 320MB - no noticeable lag behind the GeForce 8800 GTS was recorded even in the third, most resource-intensive test. MSI NX8800GTS OC Edition in all cases took a stable second place after GeForce 8800 GTX, confirming the results obtained in the overall standings.

Synthetic benchmarks: Futuremark 3DMark06

Both versions of the GeForce 8800 GTS behave in the same way as in the previous case. However, 3DMark06 uses more sophisticated graphics, which, when combined with FSAA 4x in some benchmarks, can give a different picture. Let's get a look.

The results of certain groups of tests are also logical. The SM3.0 / HDR group uses a larger number of more complex shaders, so the advantage of the GeForce 8800 GTX is more pronounced than in the SM2.0 group. AMD Radeon X1950 XTX also looks more advantageous in case of active use of Shader Model 3.0 and HDR, and GeForce 7950 GX2, on the contrary, in SM2.0 tests.

After enabling FSAA, the GeForce 8800 GTS 320MB really starts to lose to the GeForce 8800 GTS 640MB at 1600x1200, and in 1920x1200 the new Nvidia solution cannot pass the tests at all due to lack of video memory. The loss is close to twofold in both the first and second tests of SM2.0, despite the fact that they are very different in the construction of graphic scenes.

In the first test SM3.0 / HDR, the effect of video memory size on performance is clearly visible even at 1280x1024. The younger model GeForce 8800 GTS is 33% behind the older one, then, at 1600x1200, the gap increases to almost 50%. The second test, with a much less complex and large-scale scene, is not so demanding on the amount of video memory, and here the lag is 5% and about 20%, respectively.

Conclusion

Time to take stock. We tested two Nvidia GeForce 8800 GTS models, one of which is a direct competitor to the AMD Radeon X1950 XTX, and the other is targeted at the $ 299 high-performance card sector. What can we say, having the results of game tests?The older model, which has an official price of $ 449, has shown itself to be on the good side when it comes to performance. In most tests, the GeForce 8800 GTS outperformed the AMD Radeon X1950 XTX and only in some cases showed equal performance with the AMD solution and lagged behind the dual-processor GeForce 7950 GX2 tandem. However, given the extremely high performance of the GeForce 8800 GTS 640MB, we would not unambiguously compare it with the products of the previous generation: they do not support DirectX 10, while the GeForce 7950 GX2 has a significantly worse quality of anisotropic filtering, and potential problems caused by incompatibility of one or another games with Nvidia SLI technology.

GeForce 8800 GTS 640MB can be confidently called the best solution in the $ 449- $ 499 price range. However, it is worth noting that the new generation of Nvidia products is still not cured of childhood illnesses: Call of juarez there are still flickering shadows, and Splinter cell: double agent, although it works, it requires a special run on drivers version 97.94. At least until the advent of graphics-based cards on the market. AMD processor the new generation GeForce 8800 GTS has every chance to take its proper place as "the best accelerator costing $ 449". Nevertheless, before purchasing the GeForce 8800 GTS, we would recommend to clarify the issue of compatibility of the new Nvidia family with your favorite games.

The new GeForce 8800 GTS 320MB for $ 299 is also a very good purchase for its money: support for DirectX 10, high-quality anisotropic filtering and not a good level of performance in typical resolutions are just some of the advantages of the new product. Thus, if you plan to play at 1280x1024 or 1600x1200, the GeForce 8800 GTS 320MB is an excellent choice.

Unfortunately, a card that is quite promising from a technical point of view, which differs from the more expensive version only in the amount of video memory, sometimes seriously inferior to the GeForce 8800 GTS 640MB, not only in games with high demands on the amount of video memory, such as Serious sam 2, but also where previously the difference in performance of cards with 512 and 256 MB of memory was not recorded. In particular, these games include TES IV: Oblivion, Neverwinter Nights 2, F.E.A.R. Extraction Point and some others. Considering that 320 MB of video memory is definitely more than 256 MB, the problem is clearly related to its inefficient allocation, but, unfortunately, we do not know if it is caused by flaws in the drivers or something else. Nevertheless, even taking into account the above-described shortcomings, the GeForce 8800 GTS 320MB looks much more attractive than the GeForce 7950 GT and Radeon X1950 XT, although the latter will inevitably lose in price with the advent of this video adapter.

As for the MSI NX8800GTS-T2D640E-HD-OC, we have a well-equipped product that differs from the reference Nvidia card not only in packaging, accessories and a sticker on the cooler. The video adapter is overclocked by the manufacturer and in most games it provides a noticeable performance boost as compared to the standard GeForce 8800 GTS 640MB. Of course, it cannot reach the level of GeForce 8800 GTX, but additional fps are never superfluous. Apparently, these cards are carefully selected for their ability to work at higher frequencies; at least, our sample showed quite good results in the field of overclocking, and it is possible that most of the NX8800GTS OC Editions are capable of overclocking well beyond what has already been done by the manufacturer.

The two-disc edition of Company of Heroes, considered by many game reviewers the best strategy game of the year, deserves special praise. If you are seriously aiming at buying a GeForce 8800 GTS, then this MSI product has every chance of becoming your choice.

MSI NX8800GTS-T2D640E-HD-OC: pros and cons

Advantages:

Improved performance level versus reference GeForce 8800 GTS

High level of performance at high resolutions using FSAA

Low noise level

Good overclocking potential

Good equipment

Flaws:

Insufficiently debugged drivers

GeForce 8800 GTS 320MB: advantages and disadvantages

Advantages:

Highest performance in its class

Support for new modes and methods of anti-aliasing

Excellent quality anisotropic filtering

Unified architecture with 96 shader processors

Future Proof: DirectX 10 and Shader Model 4.0 Support

Efficient cooling system

Low noise level

Flaws:

Insufficiently debugged drivers (problem with video memory allocation, poor performance in some games and / or modes)

High energy consumption

In our last article, we took a look at what is happening in the lower price range, in which new products from AMD have recently appeared - the Radeon HD 3400 and HD 3600 series. Today we will slightly raise our eyes in the hierarchy of cards and talk about the older models. The hero of our review this time will be GeForce 8800 GTS 512MB.

What is the GeForce 8800 GTS 512 MB? The name, frankly, does not give an unambiguous answer. On the one hand, it somehow reminds of the past top solutions based on the G80 chip. On the other hand, the memory size is 512 MB, which is less than that of the corresponding predecessors. As a result, it turns out that the GTS index, coupled with the number 512, is simply misleading a potential buyer. But in fact, we are talking, if not the fastest, then, in any case, one of the fastest graphic solutions provided so far by NVIDIA. And this card is not based on the outgoing G80, but on the much more progressive G92, on which, by the way, the GeForce 8800 GT was based. To make the description of the essence of the GeForce 8800 GTS 512 MB even more clear, let's go directly to the characteristics.

Comparative characteristics of GeForce 8800 GTS 512 MB

| Model | Radeon HD 3870 | GeForce 8800 GT | GeForce 8800 GTS 640 MB | GeForce 8800 GTS 512MB | GeForce 8800 GTX |

|---|---|---|---|---|---|

| Kernel codename | RV670 | G92 | G80 | G92 | G80 |

| Those. process, nm | 65 | 65 | 90 | 65 | 90 |

| Number of stream processors | 320 | 112 | 96 | 128 | 128 |

| Number of texture units | 16 | 56 | 24 | 64 | 32 |

| Number of blending blocks | 16 | 16 | 20 | 16 | 24 |

| Core frequency, MHz | 775 | 600 | 513 | 650 | 575 |

| Shader unit frequency, MHz | 775 | 1500 | 1180 | 1625 | 1350 |

| Effective video memory frequency, MHz | 2250 | 1800 | 1600 | 1940 | 1800 |

| Video memory size, MB | 512 | 256 / 512 / 1024 | 320 / 640 | 512 | 768 |

| Data exchange bus width, Bit | 256 | 256 | 320 | 256 | 384 |

| Video memory type | GDDR3 / GDDR4 | GDDR3 | GDDR3 | GDDR3 | GDDR3 |

| Interface | PCI-Express 2.0 | PCI-Express 2.0 | PCI-Express | PCI-Express 2.0 | PCI-Express |

Take a look at the table above. Based on these data, we can conclude that we have before us a well-formed and strong solution that can compete on a par with the current tops of the Californian company. In fact, we have a full-fledged G92 chip with raised frequencies and very fast GDDR3 memory operating at almost 2 GHz. By the way, we mentioned that in the initial version the G92 was supposed to have exactly 128 stream processors and 64 texture units in our review of the GeForce 8800 GT. As you can see, for the model with the GT index, a somewhat stripped-down chip was singled out for a reason. This move made it possible to somewhat delimit the segment of productive video cards and create an even more perfect product in it. Moreover, right now the production of the G92 is already well established and the number of chips capable of operating at frequencies over 600 MHz is quite large. However, even in the first revisions, the G92 easily conquered 700 MHz. You yourself understand that now this line is not the limit. We will have time to make sure of this in the course of the material.

So, we have before us a product from XFX - a video card GeForce 8800 GTS 512 MB. It is this model that will become the object of our close study today.

Finally, before us is the board itself. The XFX product is based on a reference design, so everything that will be said about this product applies to most solutions from other companies. After all, it's no secret that today the overwhelming majority of manufacturers base their product designs on exactly what NVIDIA offers.

Regarding the GeForce 8800 GTS 512 MB, one cannot fail to notice that the printed circuit board is borrowed from the GeForce 8800 GT. It has been greatly simplified as compared to video cards based on the G80, but it fully meets the requirements of the new architecture. As a result, we can conclude that the release of the GeForce 8800 GTS 512 MB was hardly complicated by anything. We have the same PCB and almost the same chip as its predecessor with the GT index.

As for the dimensions of the video card in question, in this respect it fully corresponds to the GeForce 8800 GTS 640 MB. What you can see for yourself on the basis of the given photo.

NVIDIA considered it necessary to make changes in the cooling system. It has undergone serious changes and now resembles in its appearance the cooler of the classic GeForce 8800 GTS 640 MB, rather than the GeForce 8800 GT.

The design of the cooler is quite simple. It consists of aluminum fins, which are blown by a corresponding turbine. The heat sink fins are pierced by copper heat pipes with a diameter of 6 mm each. Contact with the chip is made by means of a copper base. The heat is removed from the base and transferred to the radiator, where it is subsequently dissipated.

Our GeForce 8800 GTS was equipped with a revision A2 G92 chip from Taiwan. The memory is collected with 8 chips manufactured by Qimonda with a 1.0 ns access time.

Finally, we inserted the graphics card into the PCI-E slot. The board was detected without any problems and allowed to install the drivers. There were no difficulties in relation to auxiliary software... GPU-Z displayed all necessary information.

GPU frequency is 678 MHz, memory - 1970 MHz, shader domain - 1700 MHz. As a result, we again see that XFX does not change its habit of raising frequencies above the factory ones. However, this time the increase is small. But usually the company's assortment has a lot of cards of the same series, differing in frequencies. Some of them, for sure, have great indicators.

Riva Tuner also did not let us down and displayed all the information on the monitoring.

After that, we decided to check how well the stock cooler copes with its task. The GeForce 8800 GT had serious problems with this. The cooling system had a rather dubious efficiency margin. This, of course, was enough to use the motherboard at its nominal value, but for more it required replacing the cooler.

However, the GeForce 8800 GTS is all right with this. After a long load, the temperature only rose to 61 ° C. This is a great result for such a powerful solution. However, each barrel of honey has its own fly in the ointment. Not without it here. Unfortunately, the cooling system is very noisy. Moreover, the noise becomes rather unpleasant already at 55 ° C, which even surprised us. After all, the chip barely warmed up. Why on earth does the turbine start to work intensively?

Here Riva Tuner came to our rescue. This utility is useful not only because it allows you to overclock and adjust the operation of the video card, but also has functions for controlling the fan speed, if, of course, the board itself has support for this option.

We decided to set the RPM to 48% - this is absolutely quiet operation of the card, when you can completely forget about its noise.

However, these adjustments led to an increase in temperature. This time it was 75 ° C. Of course, the temperature is a bit high, but in our opinion, it is worth it to get rid of the howling of the turbine.

However, we did not stop there. We decided to check how the video card will behave when installing any alternative cooling system.

The choice fell on Thermaltake DuOrb - an efficient and stylish cooling system, which at one time coped well with cooling the GeForce 8800 GTX.

The temperature dropped by 1 ° C relative to the standard cooler operating at automatic revs (under load, the revs automatically increased to 78%). Well, of course, the difference in efficiency is negligible. However, the noise level has also become significantly lower. True, Thermaltake DuOrb cannot be called silent either.

As a result, the new stock cooler from NVIDIA leaves only favorable impressions. It is very effective and, moreover, allows you to set any RPM level, which is suitable for lovers of silence. Moreover, even in this case, although he is worse, he copes with the task at hand.

Finally, let's mention the overclocking potential. By the way, it turned out to be the same for a standard cooler and for DuOrb. The core was overclocked to 805 MHz, the shader domain to 1944 MHz, and the memory to 2200 MHz. It is good news that the top-end solution, which initially has high frequencies, still has a solid margin, and this applies to both the chip and the memory.

So, we examined the GeForce 8800 GTS 512 MB video card from XFX. At this point, we could smoothly move on to the test part, but we decided to talk about another interesting solution, this time from the ATI mill, manufactured by PowerColor.

The main feature of this video card is DDR3 memory. Let me remind you that most companies ship the Radeon HD 3870 with DDR4. The reference version was equipped with the same type of memory. However, PowerColor quite often chooses non-standard ways and endows its products with features that are different from the rest.

However, another type of memory is not the only difference between the PowerColor board and third-party solutions. The fact is that the company also decided to equip the video card with not a standard turbine, by the way, a rather efficient one, but an even more productive product from ZeroTherm - the GX810 cooler.

PCB simplified. For the most part, this affected the power supply system. There should be four ceramic capacitors in this area. Moreover, on most boards they are located in a row, close to each other. There are three of them left on the PowerColor board - two of them are located where they should, and one more is brought to the top.

The presence of an aluminum radiator on the power supply circuits somewhat smoothes the impression of such features. During load, they are capable of seriously heating up, so cooling will not be superfluous.

The memory chips are covered with a copper plate. At first glance, this may be good news, because it is an element capable of lowering the temperature when the memory heats up. However, this is not really the case. Firstly, it was not possible to remove the plate on this copy of the card. Apparently, it adheres tightly to hot melt glue. Perhaps, if you make an effort, the radiator can be removed, but we did not become zealous. Therefore, we never found out what memory was installed on the card.

The second thing that PowerColor cannot be praised for is that the cooler has a design capable of directing air onto the PCB, thereby reducing its temperature. However, the plate partially prevents this blowing. This is not to say that it completely interferes. But any memory heatsinks would be a much better option. Or, at least, you should not use a thermal interface, after which the plate cannot be removed.

The cooler itself was not without problems. Yes, of course the ZeroTherm GX810 is a good cooler. Its efficiency is excellent. This is largely aided by an all-copper bowl as a radiator with a long copper tube running through all the fins. But the problem is that there is a 2-pin connector on the board. As a result, the cooler always works in one mode, namely the maximum. From the very moment the computer is turned on, the cooler fan runs at its maximum speed, creating a very serious noise. One could still put up with this, if it refers exclusively to 3D, after all, we were familiar with the noisier Radeon X1800 and X1900, but when the cooler is so noisy in 2D ... It cannot but cause irritation.

The only good news is that this problem can be solved. After all, you can use the speed controller. For example, we did just that. At the minimum speed the cooler is silent, but the efficiency immediately drops dramatically. There is another option - to change the cooling. We tried it too. To do this, we took the same Thermaltake DuOrb and found that installation was not possible.

On the one hand, the mounting holes fit, only the large capacitors on the PCB prevent the fasteners. As a result, we end our examination of the board on a rather negative note. Let's move on to how the video card behaves when installed in a computer.

The core frequency is 775 MHz, which corresponds to the reference. But the memory frequency is much lower than the traditional value - only 1800 MHz. However, this is not particularly surprising. We are dealing with DDR3. So this is quite an adequate indicator. Also, keep in mind that DDR4 has significantly higher latencies. Thus, the final performance will be approximately at the same level. The difference will only show up in bandwidth-hungry applications.

By the way, I would draw your attention to the fact that the board has BIOS 10.065. This is a fairly old firmware and it probably contains a PLL VCO error. As a result, you will not be able to overclock your card above 862 MHz. However, the fix is pretty straightforward. To do this, just update the BIOS.

Riva Tuner displayed all the necessary information about frequencies and temperatures without any problems.

The load temperature was 55 ° C, which means that the card didn't get very hot. The only question that arises is: if there is such a margin of efficiency, why use only the maximum speed for the cooler?

Now about overclocking. In general, it is no secret that the frequency reserve of the RV670 is rather small, but in our sample it turned out to be terribly small. We managed to raise the frequency by only 8 MHz, i.e. up to 783 MHz. The memory showed more outstanding results - 2160 MHz. From this we can conclude that the card most likely uses memory with a 1.0 ns access time.

Researching the performance of video cards

You can see the list of test participants below:

- GeForce 8800 GTS 512 MB (650/1625/1940);

- GeForce 8800 GTS 640 MB (513/1180/1600);

- Radeon HD 3870 DDR3 512MB (775/1800);

- Radeon HD 3850 512MB (670/1650).

Test stand

To find out the performance level of the video cards discussed above, we assembled a test system with the following configuration:

- Processor - Core 2 Duo E6550 (333 × 7, L2 = 4096 KB) @ (456 × 7 = 3192 MHz);

- Cooling system - Xigmatek HDT-S1283;

- Thermal interface - Arctic Cooling MX-2;

- RAM - Corsair TWIN2в6400С4-2048;

- Motherboard - Asus P5B Deluxe (Bios 1206);

- Power supply unit - Silverstone DA850 (850 W);

- Hard drive - Serial-ATA Western Digital 500 GB, 7200 rpm;

- Operating system - Windows XP Service Pack 2;

- Video driver - Forceware 169.21 for NVIDIA video cards, Catalyst 8.3 for AMD boards;

- Monitor - Benq FP91GP.

Drivers used

The ATI Catalyst driver was configured as follows:

- Catalyst A.I .: Standart;

- MipMap Detail Level: High Quality;

- Wait for vertical refresh: Always off;

- Adaptive antialiasing: Off;

- Temporal antialiasing: Off;

The ForceWare driver, in turn, was used with the following settings:

- Texture Filtering: High quality;

- Anisotropic sample optimization: Off;

- Trilinear optimization: Off;

- Threaded optimization: On;

- Gamma correct antialiasing: On;

- Transparency antialiasing: Off;

- Vertical sync: Force off;

- Other settings: default.

Test packages used:

- Doom, Build 1.1- testing in the BenchemAll utility. For a test on one of the levels of the game, we recorded a demo;

- Prey, Build 1.3- testing through HOC Benchmark, demo HWzone. Boost Graphics switched off... Image quality Highest... Double demo run;

- F.E.A.R., Build 1.0.8- testing through the built-in benchmark. Soft shadows turned off;

- Need For Speed Carbon, Build 1.4- maximum quality settings. Motion blur disabled... Testing was done with Fraps;

- TimeShift, build 1.2- detailing was forced in two versions: High Detail and Very High Detail. Testing was done with Fraps;

- Unreal Tournament 3, build 1.2- maximum quality settings. Demo run at VCTF level? Suspense;

- World In Conflict, build 1.007- two settings were used: Medium and High. In the second case, quality filters (anisotropic filtering and anti-aliasing) were disabled. Testing was carried out using the built-in benchmark;

- Crysis, build 1.2- testing in Medium and High modes. Testing was done with Fraps.

* After the name of the game, build is indicated, i.e. the version of the game. We try to maximize the objectivity of the test, so we only use games with the latest patches.

Test results

Similarly, NVIDIA has done the same with the latest G80 chip, the world's first GPU with a unified architecture and support for Microsoft's new API, DirectX 10.

Simultaneously with the flagship GeForce 8800 GTX, a cheaper version called the GeForce 8800 GTS was released. It differs from its older sister by the truncated number of pixel processors (96 versus 128), video memory (640 MB instead of 768 MB for the GTX). The consequence of the decrease in the number of memory chips was a decrease in the bit depth of its interface to 320 bits (for GTX - 384 bits). More detailed characteristics of the graphics adapter in question can be found by examining the table:

The ASUS EN8800GTS video card got into our Test lab, which we will consider today. This manufacturer is one of the largest and most successful partners of NVIDIA, and traditionally does not skimp on packaging and packaging. As the saying goes, "there should be a lot of good video cards." The novelty comes in a box of impressive dimensions:

On its front side is a character from the game Ghost Recon: Advanced Warfighter. The case is not limited to one image - the game itself, as you might have guessed, is included in the set. On the reverse side of the packaging, there are brief characteristics of the product:

ASUS considered this amount of information insufficient, making a kind of book out of the box:

For the sake of fairness, we note that this method has been practiced for quite a long time and, by no means, not only by ASUS. But, as they say, everything is good in moderation. The maximum information content turned out to be a practical inconvenience. A slight breath of wind and the top of the cover opens. When transporting the hero of today's review, we had to contrive and bend the retaining tongue so that it justified its purpose. Unfortunately, folding it up can easily damage the packaging. And finally, let's add that the dimensions of the box are unreasonably large, which causes some inconvenience.

Video adapter: packaging and close inspection

Well, let's go directly to the package bundle and the video card itself. The adapter is packed in an antistatic bag and a foam container, which eliminates both electrical and mechanical damage to the board. The box contains disks, DVI -> D-Sub adapters, VIVO and additional power cords, as well as a case for disks.

Of the disks included in the kit, the GTI racing game and the 3DMark06 Advanced Edition benchmark are notable! 3DMark06 has been spotted for the first time in a bundle of a serial and mass video card! Without a doubt, this fact will appeal to users actively involved in benchmarking.

Well, let's go directly to the video card. It is based on a reference design PCB using a reference cooling system, and it differs from other similar products only with a sticker with the manufacturer's logo, which retains the Ghost Recon theme.

The reverse side of the printed circuit board is also unremarkable - a lot of smd components and voltage regulators are soldered on it, that's all:

Unlike the GeForce 8800 GTX, the GTS requires only one additional power connector:

In addition, it is shorter than its older sister, which will surely appeal to owners of small bodies. There are no differences in terms of cooling, and ASUS EN8800GTS, like the GF 8800 GTX, uses a cooler with a large turbine-type fan. The radiator is made of a copper base and an aluminum casing. Heat transfer from the base to the fins is carried out in part through heat pipes, which increases the overall efficiency of the structure. Hot air is discharged outside system unit, however, part of it, alas, remains inside the PC due to some holes in the casing of the cooling system.

However, the problem of strong heating is easily solved. For example, a slow-speed 120mm fan improves the temperature conditions of the motherboard quite well.

In addition to the GPU, the cooler cools the memory chips and power supply elements, as well as the video signal DAC (NVIO chip).

The latter was removed from the main processor due to the high frequencies of the latter, which caused interference and, as a result, interference in operation.

Unfortunately, this circumstance will cause difficulties when changing the cooler, so NVIDIA engineers simply had no right to make it of poor quality. Let's take a look at the video card in its "naked" form.

The PCB contains a G80 chip of revision A2, 640 MB of video memory, accumulated with ten Samsung chips. The memory access time is 1.2 ns, which is slightly faster than the GeForce 8800 GTX.

Please note that the board has two slots for chips. If they were soldered to the PCB, the total memory size would be 768 MB, and its capacity would be 384 bits. Alas, the video card developer considered such a step unnecessary. This scheme is used only in professional video cards of the Quadro series.

Finally, we note that the card has only one SLI slot, unlike the GF 8800 GTX, which has two.

Testing, analysis of results

The ASUS EN8800GTS video card was tested on a test bench with the following configuration:- processor - AMD Athlon 64 [email protected] MHz (Venice);

- motherboard - ASUS A8N-SLI Deluxe, NVIDIA nForce 4 SLI chipset;

- RAM - 2х512MB [email protected] MHz, timings 3.0-4-4-9-1T.

The RivaTuner utility has confirmed the compliance of the video card's characteristics with the declared ones:

The frequencies of the video processor are 510/1190 MHz, memory - 1600 MHz. The maximum heating achieved after multiple runs of the Canyon Flight test from the 3DMark06 package was 76 ° C at a fan speed of the standard cooling system equal to 1360 rpm: