Last year, ATI surreptitiously dealt Nvidia a serious blow when it introduced a line of excellent graphics cards that were not only fast but also competitively priced.

Nvidia immediately had to seriously cut prices on their first GeForce GTX. But that was not the end, and for the next year the companies played the price-cutting game, which is very beneficial for consumers.

The year was ending, and Nvidia was still using the GeForce 9 series to fight at the mainstream level, and positioning the GTX as a high-performance solution. In the meantime, ATI has unveiled some interesting Radeon 4800 series graphics cards. Among them is the Radeon HD 4870 X2, a dual-GPU card that snatched the crown from Nvidia's hands even though ATI was asking over $550 for it.

By pairing two Radeon HD 4870s with a gigabyte of memory each, ATI created a monster graphics card that, with the right drivers, was far faster than any single GPU solution you could buy. Since its release to the market in August last year, the Radeon HD 4870 X2 has not yet met with resistance. But now everything goes to the fact that the situation will change.

The new Nvidia GeForce GTX 295 dual-GPU graphics card uses 1792 MB of memory, which is equivalent to the memory size of two GeForce GTX 260s. However, unlike the Radeon HD 4870 X2, which is essentially two Radeon HD 4870s glued together, the GeForce GTX 295 is more suitable to the definition of "hybrid", as it has the characteristics of both Geforce GTX 260 and Geforce GTX 280.

The GeForce GTX 295 has the same core, memory, and shader clocks as the GeForce GTX 260. But the cores themselves are more similar to the GeForce GTX 280 core, each with 240 SPUs, 80 TAUs, and 28 ROPs.

So what we end up with is an insanely fast graphics card that should be more than capable of outperforming the Radeon HD 4870 X2.

In the near future, the situation with prices will be delicate - the GeForce GTX 295 began to appear on the shelves at a price of about $ 500, and price cuts from competitors were not long in coming. The Radeon HD 4870 X2 is already on sale for $450, which is about the same as buying a pair of Radeon HD 4870s with 1GB of memory. And prices for the GeForce GTX 280, on the other hand, have plummeted from $450-500 a few weeks ago to $350, making this card a bargain.

This drop may be caused by the imminent appearance on the market of its replacement - a slightly accelerated GeForce GTX 285, manufactured using the 55nm process technology. Because of this, it should heat up less and accelerate better. In any case, the GTX 285 isn't on sale yet, and lowering the price of the GTX 280 could be a simple strategy to empty the stock.

But let's get back to the icing on the cake. How fast is the GeForce GTX 295? Well, we'll see right now.

GeForce GTX 295 board

In terms of dimensions, the GeForce GTX 295 is a monster, its width is 26.5 cm, which is wider than a full-size ATX motherboard. However, the GeForce GTX 280 and Radeon HD 4870 X2 are almost as wide, so that's okay.

Although the GeForce GTX 295, like the Radeon HD 4870 X2, has two GPUs, its design is very different.

The Radeon HD 4870 X2 has both GPUs and 2 GB of memory on the same PCB, while the GeForce GTX 295 has two PCBs, each with a GT200B core and 896 MB of GDDR3 memory. This is similar to the GeForce 9800 GX2 design. The cooling system in the GeForce GTX 295 is based on the principle of a sandwich.

This means that Nvidia took the usual cooling system and placed it between two video cards - that is, one piece of aluminum cools both GPUs and all 1792 MB of memory. Nvidia claims that this method will lead to better cooling and stable operation at higher frequencies.

Such a rather complex design means great difficulties for third-party manufacturers, so we are unlikely to see coolers from them on sale, if at all - just as we did not see them in the case of the GeForce 9800 GX2.

As we have seen several times, the GeForce GTX 295 comes with 1.79 GB of memory (896 MB per GPU) running at the default 2000 MHz. At the same time, each GPU runs at 576 MHz.

This means that the GTX 295 cores are only 26 MHz behind the GTX 280 core, while the memory is 214 MHz slower, and the theoretical bandwidth for each GPU is 112 Gb / s.

Core i7 system specifications

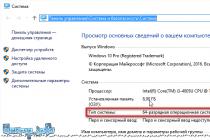

- Intel Core i7 965 Extreme Edition(Overclocked to 3.70GHz)

- x3 2GB G.Skill DDR3 PC3-12800 (CAS 9-9-9-24)

- ASUS P6T Deluxe (Intel X58)

- OCZ GameXStream (800W)

- Seagate 500GB 7200-RPM (Serial ATA300)

- Radeon HD 4870 (1GB)

- Radeon HD 4870 X2 (2GB)

- GeForce GTX 260 (896MB)

- GeForce GTX 280 (1GB)

- GeForce GTX 260 (896MB) SLI

- GeForce GTX 295 (1792MB)

Software

- Microsoft Windows Vista Ultimate SP1 (64-bit)

- Nvidia Forceware 181.20 WHQL

- ATI Catalyst 8.12 WHQL (Hotfix)

In the 3Dmark Vantage test at 2560x1600, the new GeForce GTX 295 is 13% faster than the Radeon HD 4870 X2. This synthetic test also shows that the GeForce GTX 295 is about 3% faster than a pair of GeForce GTX 260s running in SLI mode. In any case, scoring 6500 points in 3Dmark Vantage at such a high resolution is very impressive.

Tests: Crysis, Crysis Warhead

In Crysis in DirectX 10 mode and 2560x1600 resolution, the GeForce GTX 295 is still able to deliver an average of 39 frames per second, which is also very impressive. Although, say, the Radeon HD 4870 X2 is capable of 38 frames per second, as is the GeForce GTX 260 SLI.

The GeForce GTX 295 is an incredible 70% faster than the GeForce GTX 280 and twice as fast as a single-core Radeon HD 4870 with 1GB of memory.

Interestingly, the same test in Crysis Warhead looks a bit different. Here the GeForce GTX 295 is 24% faster than the Radeon HD 4870 X2, but again only one frame per second ahead of the GeForce GTX 260 SLI.

Tests: Company of Heroes, Supreme Commander

For some reason, the Radeon 4800 series cards never did well with Company Of Heroes: Opposing Fronts in DirectX 10 mode.

The Radeon HD 4870 X2 is only 11% faster than the GeForce GTX 280 here, giving the GeForce GTX 295 a solid lead. The GeForce GTX 295 at 2560x1600 rendered 56% more frames than the Radeon HD 4870 X2. This card was the only one able to provide 125 frames per second in Company Of Heroes: Opposing Fronts at a resolution of 2560x1600 at the highest possible graphics settings!

But with the Supreme Commander, the Radeon HD 4800 line has never had any problems. Here the Radeon HD 4870 X2 stays on top, delivering 89fps at 2560x1600. GeForce GTX 295 - 13% slower at 77 fps.

Tests: Enemy Territory: Quake Wars, Dead Space

Here's another game where the Radeon HD 4800 series excels - Enemy Territory: Quake Wars. Here the 4870 X2 manages an incredible 93fps at 2560x1600. Surprisingly, the Radeon HD 4870 with 1 GB of memory is next at 59 frames per second. Apparently, these ATI video cards work very well with games based on OpenGL.

But using Nvidia's SLI is practically useless - two GeForce GTX 260s were only 5 frames per second faster than one at 2560x1600. And the GeForce GTX 295 was even slower than the GeForce GTX 280.

Scene one, take one. Dead Space is owned by Nvidia.

The Radeon HD 4870 X2 could only match the single GeForce GTX 260 at 2560x1600, while the GeForce GTX 280 was 15% faster. Two GeForce GTX 260 graphics cards in SLI provide an 83 percent increase over one. And the GeForce GTX 295 was slightly faster, hitting an insane 191fps at 2560x1600.

Tests: Fallout 3, Left 4 Dead

The Radeon HD 4870 X2 is ahead of the GeForce GTX 295 at 2560x1600 due to more memory, but only a little.

The same can be said about the single GeForce GTX 260 and Radeon HD 4870. More RAM allowed the Radeon HD 4870 to outperform the GTX 260 in Fallout 3 at 2560x1600.

Left 4 Dead is another fairly new game, and one that seems to sympathize with Nvidia's cards. At 2560x1600, the GeForce GTX 295 averaged 98 frames per second, which is 31% faster than the Radeon HD 4870 X2. What's more, the GeForce GTX 295 was an incredible 69% faster than the GeForce GTX 280.

Tests: grand theft Auto IV, World in Conflict

Grand Theft Auto IV is the newest of the games included in our testing, and it looks like ATI has yet to find out about its existence.

While Crossfire does work, both the Radeon HD 4870 and Radeon HD 4870 X2 performed terribly at 2560x1600, though not too bad at 1680x1050.

It is worth noting that the results of the GeForce GTX 260 and GTX 295 cannot be compared with the results of other video cards, since these cards cannot be set to the maximum image quality due to insufficient memory. So beware: textures High Quality were used only in the tests GeForce GTX 280, Radeon HD 4870 X2 and Radeon HD 4870, while the GeForce GTX 260 and GTX 295 were content with average quality.

Performance in World in Conflict at 2560x1600 for both top cards is almost identical. The GeForce GTX 280 was able to deliver 41 frames per second and beat the two GeForce GTX 260s in SLI mode by an eight percent margin.

Tests: Unreal Tournament 3, Far Cry 2

The test results in Unreal Tournament 3 also look disputable, although the new GeForce GTX 295 outperforms the Radeon HD 4870 X2 by 10% at 2560x1600. It also means that the GeForce GTX 295 beat the GeForce GTX 280 by an impressive 70%.

Far Cry 2 was by far one of the most important games of 2008. Here the GeForce GTX 295 beat the Radeon HD 4870 X2 by 8%. Also, the dual-GTX 295 is 56% faster than the GeForce GTX 280 here.

Tests: Call of Duty 5: World at War

Call of Duty 5: World at War is another serious game released in 2008. Perhaps, for many, it became the game of the year after CoD4.

Finishing strong, the GeForce GTX 295 outperformed the Radeon HD 4870 X2 by 47% at 2560x1600 and by 70% over the GeForce GTX 280.

Power consumption and temperatures

The power consumption of the GeForce GTX 295 is simply extreme; However, what else to expect from such a high-performance graphics card? Under load, a system with a GeForce GTX 295 consumes 434 watts, which is not very good for electricity bills. However, let's look at it from the other side - two GeForce GTX 260s in SLI mode not only work slower, but also consume even more power.

The GeForce GTX 295 draws slightly less under load than the 4870 X2, but slightly more at idle. What's more, the GeForce GTX 295 used only 7% more power under load than the GeForce GTX 280, but 34% more idle.

In general, if we talk about power consumption, then the GeForce GTX 295 requires a lot of energy, however, like other rival video cards.

Despite the fact that it's still a dual processor card, the GeForce GTX 295's load temperatures aren't that bad.

Although far from cool, the card reached only 75 degrees in the test, which made it colder than the GeForce GTX 280. 88 degrees Celsius.

On the subject of noise, the GeForce GTX 295 gave us a strong signal as soon as we started playing. Its cooler will definitely outperform any other video card from this review.

Final thoughts. What to buy?

The balance with the introduced Nvidia GeForce GTX 295 has been restored once again, this card is ready to fight for the crown of the fastest in the world.

Nvidia's biggest plus is that it provides best support games released in the recent past. Games such as Call Of Duty 5, Dead Space and Left 4 Dead, for example, are much better served by Nvidia graphics cards, and this helped the GeForce GTX 295 outperform the Radeon HD 4870 X2.

However, owners of the Radeon HD 4870 X2 may not give up - this video card proved to be very powerful and competitive, losing new map GeForce in only a few games.

Like all multi-GPU cards, the GeForce GTX 295 relies heavily on the right drivers to run at its fastest. Every time a new game is released, the GeForce GTX 295 will most likely not handle it properly without updated ForceWare drivers. But, let's say there, Nvidia has recently proved that the team of driver programmers is always on time and releases new versions of them along with all significant games.

If the GeForce GTX 295 doesn't work properly - in SLI mode - it's only marginally faster than the GeForce GTX 260. While it doesn't slow down the process in this case, it means a serious dip in performance.

Latest drivers Nvidia ForceWare (181.10 WHQL-certified) was released on January 8th. They added support for the GeForce GTX 295 and GTX 285. Although they mostly work fine, we still had to deal with some problems here and there that caused some games to crash.

Left 4 Dead crashed to the desktop quite often under 64-bit Windows Vista, the same thing happened when using two GeForce GTX 260 - it seems to be a problem in SLI mode. Grand Theft Auto IV faces the same problem, although crashes have been fairly rare. World In Conflict and Company Of Heroes: Opposing Fronts also crashed when changing the screen resolution. In other words, swimming in the pool with the GeForce GTX 295 is not so comfortable - we will follow the release of drivers and monitor errors.

Overall, we are very pleased with the results of the GeForce GTX 295, and also very pleased with its price. $500 is not that very cheap, but for this level of performance it is acceptable. On the other hand, we have the ATI/AMD alliance, which is cutting prices very aggressively. Radeon HD 4870 X2 has dropped in price to $450, and sometimes it can be purchased even for $400. At this point, you will think twice before purchasing a new graphics card.

As we discussed when we announced the GTX 295, prices have yet to stabilize. The good news is that the first decline followed just a week after the card hit the shelves.

Summarizing all of the above, let's say - if you do not stand up for the price and you definitely need the fastest video card on planet Earth, then the GeForce GTX 295 is your drug.

Since the GeForce GTX 295 claims to be the fastest "single" video card, the following video cards will participate in this test - the GeForce GTX 280 and a bunch of two such video cards in SLI mode, as well as, of course, the Radeon HD4870X2. To evaluate the effectiveness of the "internal" SLI of the GeForce GTX 295 video card, we also tested the performance of one "half" of this card, disabling SLI in the driver settings. test standIn the charts, the results for the GeForce GTX 280 in singles and doubles are shown in dark green. The results of the work of the “half” of the GeForce GTX 295 and in normal mode are indicated in light green. The results for Radeon HD4870X2 are traditionally shown in red. GeForce video cards were tested on drivers version 181.20, Radeon HD4870X2 - on Catalyst 8.12.

Testing in 3DMark Vantage Performance mode showed that the GeForce GTX 295 really pulls out the "leader's jersey" from the previous king of 3D graphics - the Radeon HD4870X2.

If we take the 3DMark score without taking into account the points of the central processor (GPU Score), then here the advantage of the novelty compared to the Radeon HD4870X2 is about 9%. Not so much, but quite noticeable and you can’t write off the measurement error. Especially when you consider that in the fight for the "crown" every percentage counts.

When tested in 3DMark Vantage, the graphics settings mode High increases the advantage of the GeForce GTX 295 to almost 30%, which is somewhat strange. It is quite possible that due to the use of a wider memory bus, the GeForce GTX 295 can get an additional advantage when switching to a “heavy” graphics mode compared to the Radeon HD4870X2. However, the figure of 30% looks somewhat dubious.

However, the mode Extreme testing 3DMark Vantage sums up the testing in this application. Indeed, with an increase in the "severity" of the mode, the advantage of the GeForce GTX 295 in comparison with the Radeon HD4870X2 increases. In Extreme mode, we see a difference in the GPU Score results of these video cards of about 12%.

When tested in Crysis at low resolutions, we again see the GeForce GTX 295 outperforming the Radeon HD4870X2 by 25%.

With an increase in resolution, the difference in the results of the GeForce GTX 295 and Radeon HD4870X2 somewhat decreases, but still remains noticeable - about 10% in favor of the new product.

Enabling the most severe graphics mode VeryHigh in Crysis is still a very difficult test even for the most powerful video cards. In absolute terms, the results of the GeForce GTX 295 and Radeon HD4870X2 sag noticeably even at low resolutions, but the advantage of about 10% still remains with the new product.

But increasing the screen resolution comes as a surprise. Among the "single" video cards, the victory remains with the Radeon HD4870X2, however, the GeForce GTX 295 is only a little behind it.

Nevertheless, in the game Far Cry 2 with Ultra graphics quality settings, the GeForce GTX 295 in a dispute with the Radeon HD4870X2 takes a convincing revenge and its advantage is about 15-20%. Interestingly, in this test, the results of the novelty come close to the results of the GeForce GTX 280 in SLI mode, and this is for a "single" video card!

In the game Race Driver: Grid, the new GeForce GTX 295 initially outperforms the Radeon HD4870X2 at low resolutions, but then the balance of power in this pair changes to the opposite, and the Radeon HD4870X2 takes the lead. However, the difference in results by a few percent cannot be called significant, especially since all video cards show quite comfortable FPS in this game.

GeForce GTX 295 performance scalability

Now, based on the above results, we can find out how efficiently the two "halves" of the GeForce GTX 295 work in the SLI bundle. As a starting point, we will take the results shown by one "half" of the GeForce GTX 295 (SLI mode for the video card is disabled) and compare them with the performance of the same video card, but in maximum performance mode (SLI mode for the video card is enabled). The chart below shows the percentage performance gain when "internal" SLI mode is enabled for the GeForce GTX 295 graphics card.As you might expect, in the synthetic tests of 3DMark Vantage, the scalability of the “internal” SLI fluctuates between 80%-90%, which is quite close to the theoretical maximum of 100%. In Crysis, the SLI efficiency is slightly lower, from 65% to 75%, but this is still a very good result. The Far Cry 2 game reacts very positively to the increase in the number of GPUs in the video system, here the gain from using the SLI bundle in high resolutions exceeds 90%! Good results were also obtained in the game Race Driver: Grid - the efficiency of SLI ranged from 73% to almost 90%. Next, we'll look at scalability in a slightly different way. Namely, how the performance of the GeForce GTX 295 will change if you install it in a motherboard that supports the PCI Express 1.0 bus, whose bandwidth is half that of the PCI Express v2.0 bus. To do this, we taped a part of the PCI Express connector on the GeForce GTX 295 video card to make it work in PCI-E 2.0 8x mode.

This screenshot shows (we glued two images so that all the parameters of this menu item in the NVIDIA control panel can be seen) that after such a simple operation, the video card uses half as many PCI Express 2.0 bus lines, while the resulting total bus bandwidth is equal to the interface bandwidth PCI Express 1.0 16x. The performance test results for the GeForce GTX 295, obtained after the bus "cut", will be displayed as a percentage of the results obtained earlier with the speed of the PCI Express 2.0 16x bus.

As can be seen from the diagram, the GeForce GTX 295 video card practically does not react to a halving of the PCI Express speed. And this means that having bought a GeForce GTX 295 video card, owners of motherboards with support for the PCI Express v1.0 bus. virtually no performance loss. Of course, provided that the CPU is able to fully unlock the potential of this powerful graphics card.

Power Consumption Test

And, finally, we will give data on the power consumed by video cards when various modes loads. In the table below you can see the results obtained for the power consumption of GeForce GTX 295 and Radeon HD4870X2 video cardsAs you can see, in the "rest" mode, the novelty has a very moderate power consumption, which is greatly facilitated by lowering the GPU frequency to 300 MHz and the video memory frequency to 100 MHz. But the main purpose of a gaming video card is to please with high FPS at maximum graphics settings. As it turned out, the appetite of the GeForce GTX 295 is quite modest here, especially in comparison with the Radeon HD4870X2. However, the maximum load test generated FurMar, in Xtreme burning mode, showed the real hot ardor of the GeForce GTX 295 graphics card, whose power consumption came close to 300 watts. Note that the temperature of the GPU in this mode reached 93 and 96 degrees (the "leading" GPU heats up a little more). At the same time, the cooler of the GeForce GTX 295 video card accelerated to a speed of 3400 rpm, which is about 80% of the maximum. Of course, at this speed, the noise of the cooler was quite noticeable. At the same time, during testing in the game Far Cry 2, the cooler speed did not exceed 2400 rpm, while its noise was barely noticeable. Well, so that you can evaluate the power requirements of the power supply, we present the total power consumption of our test bench on the base processor Core i7 965 Extreme overclocked to 3.84 GHz.

As you can see, the minimum requirements for the power supply indicated on the packaging of the Zotac GeForce GTX 295 video card are not so far from reality. However, it is well known that it is better not to save on the power supply for top-end configurations, it will come out more expensive.

conclusions

The battle for the "crown" of the fastest 3D accelerator continues! Today we got acquainted with another "two-headed" NVIDIA video card, which can rightly be called the fastest video accelerator to date. The novelty has demonstrated excellent performance results in the most difficult modes and is not the most "hot" temper and noise (if you do not warm it up on purpose). Moreover, the new product can be used in Quad-SLI mode, which means we can expect even higher FPS and the number of "parrots" in 3DMark. But we will tell this story next time.About six months ago, AMD released its most powerful graphics card. AMD Radeon 4870x2, which for a long time became the leader among single video cards. It combined two RV770 chips, very high temperatures, fairly high noise levels, and, most importantly, the highest performance in games among single video cards. And only now, at the beginning of 2009, NVIDIA finally responded with the release of a new video card, which was supposed to become a new leader among single solutions. The new video card is based on the 55nm GT200 chip, has 2x240 stream processors, but there is one “but” - a reduced memory bus (448 bits vs. 512 for the GTX280), reduced ROPs (28 vs. 2GHz versus 2.21GHz for the GTX280). Today I want to introduce you, dear readers, to the speed of the new TOP, its advantages and disadvantages, and, of course, to compare the speed of the new video card with its main competitor - AMD 4870x2.

Review of the Palit GeForce GTX295 video card

The Palit GeForce GTX295 came into our test lab as an OEM product that only included a Windows XP/Vista driver disk. Let's look at the video card itself:

advertising

The video card has, probably, the length of 270mm, which is standard for top-level video cards, is equipped with two DVI-I (dual link) ports and an HDMI port - like its younger sister - GeForce 9800GX2:

But there are some changes: the DVI-I ports are now located on the right, and the hot air exhaust grille has become narrower and longer, which should clearly improve the quality of cooling of the video card. Not all of the hot air is expelled outside the case - most of it remains inside the case, as in the case of the 9800GX2, you can see the cooling system radiator fins in the upper part of the video card.

The new most productive graphics accelerator with a not so noisy cooling system and ... a good bundle.

In order to “climb the mountain again”, NVIDIA has switched its “top” GT200 GPU to a thinner manufacturing process - 55 nm. The new GT200b chip architecturally remained the same, but became less hot, which made it possible to release an accelerated version of the single-chip GTX280 - the GTX285 video card (it was discussed in the review ZOTAC GeForce GTX 285 AMP! Edition). But this was not enough to overthrow the Radeon HD4870X2, and simultaneously with the GTX285, a dual-chip one was released, based on two slightly simplified GT200b chips. The resulting accelerator, in the name of which there is no hint of "double-headedness", should provide unsurpassed performance.

To begin with, let's give a comparative table showing the history of the development of two-chip NVIDIA video cards and analogues from the direct competitor AMD-ATI.

| Graphics chip |

GT200-400-B3 |

|||

| Core frequency, MHz | ||||

| Frequency of unified processors, MHz | ||||

| Number of unified processors | ||||

| Number of texture addressing and filtering blocks | ||||

| Number of ROPs | ||||

| Memory size, MB | ||||

| Effective memory frequency, MHz |

2000 |

2250 |

3600 |

|

| Memory type | ||||

| Memory bus width, bit | ||||

| Technical process of production | ||||

| Power consumption, W |

up to 289 |

Let's start by summarizing and refining the GTX 295 specification.

| Manufacturer | |

| Model | ZT-295E3MA-FSP ( GTX 295) |

| Graphics core | NVIDIA GTX 295 (GT200-400-B3) |

| Conveyor | 480 unified streaming |

| Supported APIs | DirectX 10.0 (Shader Model 4.0) OpenGL 2.1 |

| Core (shader domain) frequency, MHz | 576 (1242) |

| Volume (type) of memory, MB | 1792 (GDDR3) |

| Frequency (effective) memory, MHz | 999 (1998 DDR) |

| Memory bus, bit | 2x448 |

| Tire standard | PCI Express 2.0 x16 |

| Maximum Resolution | Up to 2560 x 1600 in Dual-Link DVI mode Up to 2048 x 1536 @ 85Hz over analog VGA Up to 1920x1080 (1080i) via HDMI |

| Outputs | 2x DVI-I (VGA via adapter) 1xHDMI |

| HDCP support HD video decoding |

There is H.264, VC-1, MPEG2 and WMV9 |

| Drivers | Latest drivers can be downloaded from: - support site ; - GPU manufacturer website . |

| Products webpage | http://www.zotac.com/ |

The video card comes in a cardboard box, which is exactly the same as for the GTX 285 AMP! Edition, but has a slightly different design, all in the same black and gold colors. On the front, except for the name GPU, noted the presence of 1792 MB of GDDR3 video memory and an 896-bit memory bus, hardware support for the newfangled physical API NVIDIA PhisX and the presence of a built-in HDMI video interface.

The back of the package lists the general features of the graphics card with a brief explanation of the benefits of using NVIDIA PhisX, NVIDIA SLI, NVIDIA CUDA and 3D Vision.

All this information is briefly duplicated on one of the sides of the box.

The other side of the package lists the exact frequency response and the operating systems supported by the drivers.

A little lower, information is carefully indicated on the minimum requirements for the system in which the “gluttonous” GTX 295 will be installed. So, the future owner of such a powerful graphics accelerator will need a power supply unit with a capacity of at least 680 W, which can provide up to 46 A via the 12 V line. must have the required number of PCI Express power outputs: one 8-pin and one 6-pin.

By the way, the new packaging is much more convenient to use than the previously used ones. It allows you to quickly access the contents, which is convenient both when you first build the system and when you change the configuration, for example, if you need some kind of adapter or maybe a driver disk when you reinstall the system.

The delivery set is quite sufficient for installing and using the accelerator, and in addition to the video adapter itself, it includes:

- disk with drivers and utilities;

- CD with bonus game "Race Driver: GRID";

- disc with 3DMARK VANTAGE;

- paper manuals for quick installation and use of the video card;

- branded sticker on the PC case;

- adapter from 2x peripheral power connectors to 6-pin PCI-Express;

- adapter from 2x peripheral power connectors to 8-pin PCI-Express;

- adapter from DVI to VGA;

- HDMI cable;

- cable for connecting the SPDIF output of a sound card to a video card.

The appearance of the video card owes its unusual appearance to the design. This is striking at first glance when you see a virtually rectangular accelerator completely enclosed by a casing with a slot through which you can see the turbine of the cooling system, hidden somewhere in the depths.

The reverse side of the video card looks a little simpler. This is due to the fact that, unlike the GeForce 9800 GX2, there is no second half of the casing here. This, it would seem, indicates a greater predisposition of the GTX 295 graphics accelerators to disassembly, but as it turned out later, everything is not so simple and safe.

On top of the video card, almost at the very edge, there are additional power connectors, and due to the rather high power consumption, you need to use one 6-pin and one 8-pin PCI Express.

Next to the power connectors there is a SPDIF digital audio input, which should provide mixing of the audio stream with video data when using the HDMI interface.

Further, a cutout is made in the casing, through which heated air is ejected directly into the system unit. This will clearly worsen the climate inside it, because, given the power consumption of the video card, the cooling system will blow out a fairly hot stream.

And already near the panel of external interfaces there is an NV-Link connector, which will allow you to combine two such powerful accelerators in an uncompromising Quad SLI configuration.

Two DVIs are responsible for image output, which can be converted to VGA with the help of adapters, as well as a high-definition HDMI multimedia output. There are two LEDs next to the top video outputs. The first one displays the current power status - green if the level is sufficient and red if one of the connectors is not connected or an attempt was made to power the card from two 6-pin PCI Express. The second one indicates the DVI output to which the master monitor should be connected. A little lower is a ventilation grill through which part of the heated air is blown out.

However, disassembling the video card turned out to be not as difficult as it was in the case of the 9800 GX2. First you need to remove the top casing and ventilation grill from the interface panel, and then just unscrew a dozen and a half spring-loaded screws on each side of the video card.

Inside this "sandwich" there is a single cooling system, consisting of several copper heat sinks and copper heat pipes, on which a lot of aluminum plates are strung. All this is blown by a sufficiently powerful and, accordingly, not very quiet turbine.

On both sides of the cooling system there are printed circuit boards, each of which represents a half: it carries one video processor with its own video memory and power system, as well as auxiliary chips. Please note that the 6-pin auxiliary power connector is located on the half more saturated with chips, which is logical, because. even up to 75 watts is provided by the PCI Express bus located in the same place.

The boards are interconnected using special flexible SLI bridges. Moreover, they themselves and their connection connectors are very capricious, so we do not recommend opening your expensive video card without special need.

But bridges alone are not enough to ensure that parts of the GTX 295 work in harmony with the rest of the system. In real conditions, the chipset or the increasingly used NVIDIA nForce 200 extension with PCI Express 2.0 support is responsible for the operation of the SLI-bundle of a pair of video cards. It is the NF200-P-SLI PCIe switch that is used in.

The boards also have two NVIO2 chips that are responsible for the video outputs: the first one provides support for a pair of DVI, and the second one for one HDMI.

It is thanks to the presence of the second chip that multi-channel audio is mixed from the SPDIF input to a convenient and promising HDMI output.

The video card is based on two NVIDIA GT200-400-B3 chips. Unfortunately, on printed circuit boards there wasn't enough room for a few more memory chips, so the full-fledged GT200-350 had its memory bus reduced from 512 to 448 bits, which in turn led to a reduction in rasterization channels to 28, like in the GTX260. But on the other hand, there are 240 unified processors, like a full-fledged GTX285. The GPU itself runs at 576 MHz, and the shader pipelines at 1242 MHz.

The total volume of 1792 MB of video memory is typed by Hynix chips H5RS5223CFR-N0C, which at an operating voltage of 2.05 V have a response time of 1.0 ms, i.e. provide operation at an effective frequency of 2000 MHz. At this frequency, they work, without providing a backlog for overclockers.

Cooling System Efficiency

In a closed but well-ventilated test case, we were unable to get the turbine to automatically spin up to maximum speed. True, even with 75% of the cooler's capabilities, it was far from quiet. In such conditions, one of the GPUs, located closer to the turbine, heated up to 79ºC, and the second - up to 89ºC. Given the overall power consumption, these are still far from critical overheating values. Thus, we can note the good efficiency of the cooler used. If only it were a little quieter...

At the same time, acoustic comfort was disturbed not only by the cooling system - the power stabilizer on the card also did not work quietly, and its high-frequency whistle cannot be called pleasant. True, in a closed case, the video card became quieter, and if you play with sound, then good speakers completely hide its noise. It will be worse if you decide to play in the evening with headphones, and next to you someone is already going to rest. But that's exactly the price you pay for a very high performance.

Testing

| CPU | Intel Core 2 Quad Q9550 (LGA775, 2.83GHz, L2 12MB) @3.8GHz |

| motherboards | NForce 790i-Supreme (LGA775, nForce 790i Ultra SLI, DDR3, ATX) GIGABYTE GA-EP45T-DS3R (LGA775, Intel P45, DDR3, ATX) |

| Coolers | Noctua NH-U12P (LGA775, 54.33 CFM, 12.6-19.8 dB) Thermalright SI-128 (LGA775) + VIZO Starlet UVLED120 (62.7 CFM, 31.1 dB) |

| Additional cooling | VIZO Propeller PCL-201 (+1 slot, 16.0-28.3 CFM, 20 dB) |

| RAM | 2x DDR3-1333 1024MB Transcend PC3-10600 |

| Hard drives | Hitachi Deskstar HDS721616PLA380 (160 GB, 16 MB, SATA-300) |

| Power supplies | CHIEFTEC CFT-850G-DF (850W, 140+80mm, 25dB) Seasonic SS-650JT (650W, 120mm, 39.1dB) |

| Frame | Spire SwordFin SP9007B (Full Tower) + Coolink SWiF 1202 (120×120x25, 53 CFM, 24 dB) |

| Monitor | Samsung SyncMaster 757MB (DynaFlat, 2048× [email protected] Hz, MPR II, TCO'99) |

Having in its arsenal the same number of stream processors as a pair of GTX280s, but in terms of other characteristics it looks more like a 2-Way SLI bundle from the GTX260, the dual-chip video card in terms of performance is just between the pairs of these accelerators and only occasionally outperforms all possible competitors. But since it doesn't impose SLI support on the motherboard and should cost less than two GTX280s or GTX285s, it can be considered a really promising high-performance solution for true gamers.

Energy consumption

Having repeatedly made sure during the tests of power supplies that often the system requirements for performance components indicate overestimated recommendations regarding the required power, we decided to check whether the GTX 295 really requires 680 watts. At the same time, let's compare the power consumption of this graphics accelerator with other video cards and their bundles, complete with a quad-core overclocked to 3.8 GHz Intel processor Core 2 Qyad Q9550.

| Power consumption, W |

We are grateful to the company ELKO Kiev» official distributor Zotac International in Ukraine for video cards provided for testing. |

This problem was solved by the only method - by creating a dual-processor video adapter based on the G200. Of course, this can be considered a departure from the principles professed by Nvidia, which consists in relying on the most powerful single-core solutions, but in war as in war - no increase in the frequency potential of the G200, achieved by optimizing the technical process, would allow it to fight alone with a friendly pair RV770 running in CrossFire mode. The ATI doctrine proved its superiority in practice, so Nvidia had no choice but to cast aside prejudices and try to outplay its main competitor in its own field with its own tricks. Previously, such a move was not possible, since a two-chip solution based on the 65nm version of the G200 would have turned out to be excessively hot and uneconomical, but the transition to the 55nm process technology made this enterprise a reality.

Yes, high-performance and expensive high-end graphics cards bring little income to their creators - the bulk of sales come from mass solutions costing up to $ 200, but they have another, no less, and in some ways even more important role than simple extraction arrived. As already mentioned, the flagship determines the face of the squadron. Powerful solutions are a kind of battle banner, indicating the technological capabilities of the developer company, which plays important role in attracting potential buyers, and therefore, ultimately, affects the market share occupied by the company. Suffice it to recall ATI's position before the release of the Radeon HD 4000 - the company had something to offer its customers in the sector of inexpensive solutions, and, nevertheless, it was quickly losing its positions in the market.

Although the G200, even in its 55nm variant, is not the most suitable GPU for creating a dual-GPU video adapter, but, given the above point of view, the release of the GeForce GTX 295 should be considered as a necessary step taken by Nvidia in response to the too long dominance of the Radeon HD 4870 X2 . While some observers believe that Nvidia's move away from betting on maximum performance single-chip solutions is a temporary measure, we believe that the company will continue to pursue a new strategy that has proven its superiority. This assumption is supported by our preliminary data on next-generation graphics cores being developed by Nvidia.

But back to the goals of today's review. In it, we will try to comprehensively cover the new flagship solution from Nvidia and put it against the Radeon HD 4870 X2 in a number of popular games in order to find out how much the GeForce GTX 295 can claim to be the champion of 3D gaming graphics.

Nvidia GeForce GTX 295 vs. ATI Radeon HD 4870 X2: face to face

Previous round, in which the GeForce GTX 280 and Radeon HD 4850 X2 fought in the ring, Nvidia lost. The new fighter has more impressive characteristics and claims to be the absolute leader, but it will also have to face a very serious opponent in the face of the Radeon HD 4870 X2. Let's compare their specifications:Both fighters look very impressive, but each in their own field: if the Radeon HD 4870 X2 has a monstrous head start in computing power, partly, however, offset by the features of the superscalar architecture of shader processors, then the GeForce GTX 295 takes revenge when it comes to texture and raster operations , at least in theory. Given the higher frequency of execution units, this gives the new product every chance of winning in real conditions. A nice addition is support for PhysX hardware acceleration, moreover, the GeForce GTX 295 can use both the usual scheme with dynamic allocation of computing resources, and assign the task of accelerating physical effects to one GPU, while fully preserving the resources of the second to accelerate graphics.

However, there is a characteristic in which the GeForce GTX 295 is inferior to the Radeon HD 4870 X2 - this is the amount of local video memory, available to applications. It is 896 MB versus 1024 MB for the opponent, which, theoretically, can affect performance when using high resolutions in conjunction with full-screen anti-aliasing, especially in new games that place more serious demands on video memory. As for bandwidth, thanks to the use of 448-bit access buses, the GeForce GTX 295 practically does not lag behind the Radeon HD 4870 X2 in this parameter. Among other shortcomings, one can note the lack of support for DirectX 10.1, an integrated sound core and a full-fledged hardware VC-1 decoder, but given that the GeForce GTX 295 claims to be the “fastest gaming card” that is clearly not intended for use in HTPC systems, these shortcomings can be attributed to not too significant.

Features of the implementation of SLI technology GeForce GTX 295 completely inherited from its predecessor, GeForce 9800 GX2.

In general, the new product is clearly armed to the teeth and is determined to remove the 3D king of the Radeon HD 4870 X2, which has been sitting on it, from the throne. But before we find out how well Nvidia did what they set out to do, let's take a closer look at one of the GeForce GTX 295 variants. Today's guinea pig will be the EVGA GeForce GTX 295+.

EVGA GeForce GTX 295+: packaging and bundle

The entire series of EVGA products that use the Nvidia G200 as the graphics core has a unified package with a very slight design difference from model to model. The EVGA GeForce GTX 295+ was no exception, delivered to store shelves in a standard black box relatively small dimensions, decorated with a bright stripe and wrapped in a plastic film.

There are few differences from the packaging of the EVGA GeForce GTX 260 Core 216 Superclocked video adapter we reviewed earlier: the strip crossing the box has become dark red and received a pattern of EVGA logos, and the letters from gray have become silver. Nevertheless, the design has not lost a sense of rigor and solidity. Unfortunately, in addition to a very common mistake, expressed in specifying the wrong type of memory - DDR3 instead of GDDR3 - another one has been added, and, probably, intentional. We are talking about the amount of video memory: although its total value is 1792 MB, in reality only half of this amount, 896 MB, is available to applications, since in modern homogeneous multi-GPU systems, data is duplicated for each GPU.

On the back of the box, as before, there is a window through which you can see the area of the board with a sticker with serial number, providing a warranty, and also entitles the user to participate in the EVGA Step-Up Program. In this case, the latter looks rather strange: the EVGA GeForce GTX 295+ is by far the highest performing gaming graphics adapter, and it is unlikely that during the 90-day Step-Up program from the date of purchase, the company will be able to offer something significantly more as an upgrade powerful than the described product. Hypothetically, the EVGA GeForce GTX 200 lineup could see a more overclocked GeForce GTX 295 model with, say, "Superclocked" in the name, but it is known that in the vast majority of cases, factory overclocking does not lead to a serious increase in performance, for which it would be worth considering about replacing an already purchased graphics card with the same, but pre-overclocked model.

The protective properties of the packaging are commendable: instead of the plastic container used in less expensive EVGA models, a polyurethane foam tray with cut-out recesses is used here, in which, covered with lids, the video adapter and its accompanying set of accessories rest. Given the very high price of the product, the latter is somewhat surprising in its asceticism:

DVI-I → D-Sub adapter

Adapter 2xPATA → one 6-pin PCIe

Adapter from two 6-pin PCIe to one 8-pin PCIe

S/PDIF connection cable

Quick Installation Guide

User guide

EVGA logo sticker

CD with drivers and utilities

Everything you need to install and fully operate the card as part of a powerful gaming platform included in the kit, but nothing more - no frills, like free version Far Cry 2, which came with the EVGA GeForce GTX 260 Core 216 Superclocked, is not in the box. The lack of a DVI-I → HDMI adapter could be explained by the fact that the card is equipped with a dedicated HDMI port, but, looking ahead, we can say that the presence of such an adapter in the package would be useful, due to the support for multi-monitor configurations inherent in dual-processor Nvidia graphics cards .

On the driver disk, in addition to the drivers themselves and the electronic version of the user manual, you can find useful utilities such as Fraps and EVGA Precision. The latter is a fairly handy tool for overclocking, fan speed control and graphics adapter temperature control.

In general, the packaging of the EVGA GeForce GTX 295+ deserves high praise - both for the design and for the protective properties, but we see the package bundle as clearly unworthy of a solution related to the highest price category. The presence of one of the popular games seems logical, especially since one of the EVGA products we reviewed earlier, despite a significantly lower cost, could please the buyer with a full Far version Cry 2.

EVGA GeForce GTX 295+ PCB design

The representative of the new, third generation of Nvidia dual-processor graphics cards is very similar to the second generation, GeForce 9800 GX2, both externally and by the design solutions implemented in it.

However, if in the case of the competing Radeon 4870X2 it was quite possible to do without a two-board layout, which AMD did, then for the GeForce GTX 295 such a layout is an urgent need. This need is explained very simply: placement of two huge G200b chips on one board, coupled with the wiring of two 448-bit memory buses, would be impossible without a significant increase in the size of the entire structure, and the 27 cm length of a card based on single G200 chips is already the maximum allowable size for most modern ATX cases. Thus, the use of a two-board layout in this case is dictated by technological necessity, and not at all by engineering miscalculations of the developers. Going forward, Nvidia's move to GDDR5 memory should pave the way for simpler, cheaper dual-GPU graphics cards.

As in the GeForce 9800 GX2, GeForce GTX 295 boards are deployed "face" to each other and use a single cooling system. This solution is rather controversial in terms of thermal efficiency, since even the 55nm G200 variant cannot be called cold, and the components of both boards will definitely heat each other through a common heatsink, but, as mentioned above, this arrangement is the only way to create a dual-processor graphics adapter based on the G200, while keeping within the allowable dimensions in length and height. Developers of the GeForce GTX 295 should also be praised for the fact that the mounting plate of the video adapter is not cluttered with connectors, as it was in the GeForce 9800 GX2: almost the entire "second floor" is occupied by slots that serve to eject heated air outside the system case; however, part of the air is also thrown into the case.

Unlike the GeForce 9800 GX2, the disassembly procedure for the GeForce GTX 295 is not too complicated: it is enough to remove the protective cover, the mounting plate and unscrew all the screws that secure the boards to the cooling system, which is also the main load-bearing element, after which all that remains is to carefully separate the structure into component parts, carefully overcoming the resistance of thermal paste.

The extremely dense layout of both boards confirms the idea that a single-board version of the GeForce GTX 295 is impossible, even though part of the area of each of the two boards is occupied by a figured cutout serving as an air intake. The boards communicate with each other using two flexible cables connecting the connectors located on the left side.

Each of the GeForce GTX 295 boards carries an independent four-phase GPU power regulator controlled by a Volterra VT1165MF PWM controller, however, if the top board is entirely powered by an 8-pin PCI Express 2.0 connector, designed for a load of up to 150 W, then the bottom board clearly receives part power supply from the power section of the PCI Express x16 slot. The Anpec APW7142 controller seems to be responsible for powering the memory.

Next to the 6-pin power connector there is a 2-pin S/PDIF input connector, which is used to organize the translation of an external audio stream coming from the sound card to HDMI. Its presence on the bottom board is natural, since it is on it that the HDMI connector is also installed. It is interesting to note that the presence of a dedicated HDMI port required the installation of a second NVIO chip, so there are two of them in the GeForce GTX 295. However, simultaneous operation of three interfaces is only supported when SLI is disabled, which is rather pointless, since in this case the GeForce GTX 295 loses its main advantage in high performance in games. Support for dual-monitor configurations in SLI mode has been implemented since version 180 of the GeForce drivers, however, it is not as complete as it is done in ATI CrossFireX technology - a slave monitor can turn off if a game is running on the master in full screen mode.

The nForce 200 chip is used as a bridge, which is also installed on some motherboards to support Nvidia SLI technology. It is a PCI Express 2.0 bus switch that supports 48 PCIe lanes and dual GPU direct mode.

Each of the GeForce GTX 295 boards has 14 GDDR3 Hynix H5RS5223CFR-N0C chips with a capacity of 512 Mbps (16Mx32), designed for a supply voltage of 2.05 V and a frequency of 1000 (2000) MHz. It is at this frequency that the memory should work according to the official specifications of Nvidia, but EVGA subjected it to a slight overclocking, therefore, the memory in this version of the GeForce GTX 295 operates at a frequency of 1026 (2052) MHz.

Of course, it would be tempting to equip the new flagship of the GeForce GTX 200 line with two GB memory banks with a 512-bit access bus, but this would significantly complicate the already complicated design, therefore, the developers made a compromise, endowing their brainchild with two banks with a capacity of 896 MB each. with a 448-bit bus. Thus, the total amount of video memory of the GeForce GTX 295 is 1792 MB, and available for three-dimensional applications, as in all homogeneous multi-GPU solutions, is half of the total. This should be enough even at 2560x1600, and yet, in some cases, a tandem consisting of two separate GeForce GTX 280s can theoretically demonstrate higher performance due to more video memory. The peak performance of the GeForce GTX 295 memory subsystem should be 224 GB / s, but in the EVGA version it is slightly higher, reaching 229.8 GB / s, which is almost equal to the performance of the Radeon HD 4870 X2 (230.4 GB / s).

The GPUs are labeled as G200-400-B3, meaning they are a newer revision of the G200b than those installed on the GeForce GTX 260 Core 216, labeled as G200-103-B2. The chips were produced in week 49, 2008, between November 30 and December 6. The configuration of the cores is atypical for solutions based on the G200: although all 240 shader and 80 texture processors are active in each of the two GPUs, some RBE units are disabled, since the memory controller configuration is tightly tied to them. Of the eight raster operation sections present in the kernel, seven are active, which is equivalent to 28 RBEs per core. Thus, each of the "halves" of the GeForce GTX 295 is a cross between the GeForce GTX 280 and the GeForce GTX 260 Core 216. The official clock speeds correspond to the latter - 576 MHz for the main domain and 1242 MHz for the shader processor domain, but in the considered version of the GeForce The GTX 295 EVGA bumped those numbers up to 594 and 1296 MHz, respectively.

The card is equipped with three connectors for connecting monitors - two DVI-I and one HDMI, moreover, the first ones are connected to the leading graphics processor and can be used simultaneously in SLI mode, but the last one is connected to the slave core, as a result, it can only be used when this mode is disabled . A rather strange technical solution that devalues the very presence of a dedicated HDMI port; for comparison, in the GeForce 9800 GX2, such a port was connected to the leading GPU, along with one of the DVI-I ports.

There is a blue LED next to one of the DVI ports, indicating that this port is the master port and should be used to connect the main monitor. Another LED, located at the HDMI connector, indicates the presence of problems with the card's power, and if there are none, it glows green. In addition, there is a single MIO port on the bottom board, which serves to organize two GeForce GTX 295s into a quad SLI system.

EVGA GeForce GTX 295+: Cooling Design

The cooling system of the GeForce GTX 295 is similar to the cooling system of the GeForce 9800 GX2, and, in addition to performing its main function, it is the supporting element of the video adapter design, since both boards are attached to it. Technically, the system is a kind of double-sided "sandwich", on the outer sides of which there are copper heat exchangers that are in direct contact with the graphics cores, as well as protrusions that serve to remove heat from other elements that require cooling, and the filling is a thin-finned aluminum radiator connected to flat heat pipe heat exchangers.

We did not dare to disassemble the cooling system, since its parts are securely connected to each other with glue, however, the main structural features are visible in the pictures without it. The radiator fins are at an angle to the mounting plate, so only part of the heated air leaves the system case through the slots in it, and the rest is thrown inside from the end of the card, where a special cutout is provided for this in the casing. However, compared to the GeForce 9800 GX2, the proportion of hot air thrown out has increased significantly. As already mentioned, the aluminum bases of the system have a number of protrusions that serve to provide thermal contact with memory chips, NVIO chips, and power elements of GPU power regulators. In the first two cases, fibrous pads, traditional for Nvidia solutions, impregnated with white thermal paste, are used as a thermal interface, and in the last case, a very thick gray thermoplastic mass is used. In addition to the two main heat exchangers responsible for cooling graphics cores, there is a third, which has a much smaller area and cools the PCI Express switch chip. Here, the dark gray thick thermal paste familiar to most modern graphics cards is used.

At the back of the “sandwich” there is a 5.76 W radial fan that is responsible for blowing the radiator and is connected to the top board via a four-pin connector. Air intake is carried out both from above and from below the video adapter, through the corresponding holes in the printed circuit boards. The boards themselves are screwed to the bases of the cooling system with 13 spring-loaded screws each. From above, the cooling system is covered with a metal protective casing with a pleasant to the touch rubberized coating, but from below there is only a plastic overlay with an EVGA sticker covering the ferrite cores of the power stabilizer coils.

In general, this design can hardly be called optimal, especially considering the expected level of heat dissipation of the GeForce GTX 295 in the region of 220-240 W, however, like the described video adapter as a whole, it is a product of a compromise that developers, forced to fit into the given dimensions, had to go not from a good life. Most likely, the described cooling system will cope with its task, but it would be unreasonable to expect outstanding thermal or noise characteristics from it. However, in the next chapter of our review, this assumption will be subjected to experimental verification.

EVGA GeForce GTX 295+ Power Consumption, Thermals, Overclocking and Noise

Unlike its ideological predecessors, which were only temporary solutions, the GeForce GTX 295 seriously claims to be the flagship designed to demonstrate the technical superiority of Nvidia, therefore, its characteristics such as power consumption, temperature and noise are of significant interest. In order to find out how the novelty is doing with them, we carried out the corresponding measurements.To study the level of energy consumption, a specially equipped stand with the following configuration was used:

Processor Intel Pentium 4 560 (3.6 GHz, LGA775)

Motherboard DFI LANParty UT ICFX3200-T2R/G (ATI CrossFire Xpress 3200)

Memory PC2-5300 (2x512 MB, 667 MHz)

Western Digital Raptor WD360ADFD Hard Drive (36 GB)

Block Chieftec supply ATX-410-212 (Power 410W)

Microsoft Windows Vista Ultimate SP1 32-bit

Futuremark PCMark05 Build 1.2.0

Futuremark 3DMark06 Build 1.1.0

According to the standard methodology, the first SM3.0/HDR test of the 3DMark06 package was used to create a load in 3D mode, running in a loop at a resolution of 1600x1200 with forced FSAA 4x and AF 16x. Peak 2D mode was emulated using the 2D Transparent Windows test included in PCMark05. The last test is relevant in light of the fact that the Windows Vista Aero window interface uses the capabilities of the graphics processor.

The transfer of the G200 to the 55nm process technology had a very beneficial effect on its electrical characteristics, as a result of which the maximum recorded level of power consumption of the GeForce GTX 295 did not exceed 215 W, that is, it turned out to be significantly lower than the demonstrated Radeon HD 4870 X2. Contrary to preliminary forecasts, the GeForce GTX 295 did not become a fire-breathing monster at all, which gives ATI a reason to seriously think about the efficiency of the technologies it uses, because it turns out that with the same technical process, a pair of RV770s in total consumes significantly more than two G200b, and this, taking into account a much smaller number of transistors!

As for the layout of the power lines, as expected, one of the GeForce GTX 295 boards is entirely powered by an 8-pin PCIe 2.0 connector, while the other, along with a 6-pin PCIe 1.0 connector, uses the power lines of the PCI Express x16 slot . Note that under load, the chokes of the power stabilizers of the card emitted a distinctly audible squeak, but we still cannot say whether this behavior is typical of all instances of the GeForce GTX 295 without exception, or is it a feature of the sample we got. Regarding the power requirements of the power supply, we can say the following: Nvidia recommends power supplies from 680 W, providing a total load current on the +12 V line at a level of at least 46 A. Taking into account the power consumption data we received on the GeForce GTX 295, these recommendations look frankly overpriced , and for the new Nvidia, we can safely recommend any high-quality power supply with a power of 500-550 watts.

We already know that the 55nm version of the G200 demonstrates significantly better overclocking potential than the old one, so we made an attempt to overclock the GeForce GTX 295 we have. Despite the fact that EVGA already subjected it to a slight factory overclocking to 594/1296 MHz for the core and up to 1026 (2052) MHz for memory, we managed to achieve frequencies of 650/1418 MHz and 1200 (2400) MHz, respectively. Pretty good result for a card equipped with two G200b, cooled by one, and, moreover, not the biggest heatsink. Unfortunately, due to time constraints, we did not have time to test the overclocked card in all tests, limiting ourselves to games such as Crysis and Far Cry 2, as well as the popular 3DMark Vantage test suite.

During overclocking, the thermal regime of the card was controlled. As a result of measurements, the following data were obtained:

Miracles don't happen. Two cores are larger than one, and they are cooled by one heatsink, so their temperatures turned out to be higher than in single-processor cards based on the G200 even in 2D mode, in which the clock speeds of both cores were automatically reduced to 300/600 MHz. Quite high temperatures were also recorded in 3D mode, but, by the way, indicators at the level of 82-86 degrees Celsius have not been something transcendent for modern high-performance video adapters for a long time. The only concern is the fact that not all hot air is thrown out by the GeForce GTX 295 cooling system outside the system case - some of it remains circulating inside the computer, therefore, before buying a video card, you should take care of good ventilation in the case.

Despite the tight layout, the GeForce GTX 295 demonstrated very good noise characteristics for its class:

The reference GeForce GTX 295 cooling system is not only quieter than the Radeon HD 4870 X2 cooling system, which has received a lot of fair criticism, but also slightly outperforms the reference GeForce GTX 280 cooling system in terms of acoustic characteristics. Yes, the card cannot be called completely silent, but, in - firstly, we did not manage to force it to increase the fan speed even after long testing, and secondly, the spectral composition of the noise is quite comfortable, and this noise is perceived by ear as a slight rustling of air, while in the noise spectrum Radeon HD 4870 X2 clearly audible annoying buzz of the fan turbine. As such, Nvidia continues to lead the way in developing quiet and reasonably efficient cooling solutions for its graphics cards. In addition to the question of the disproportionately high power consumption of the RV770, ATI should also think about this, since the designs of reference cooling systems it currently uses cannot even be called quiet even by a stretch.

Test platform configuration and testing methodology

The EVGA GeForce GTX 295+ performance comparison study was conducted on a test platform with the following configuration:

Processor Intel Core i7-965 Extreme Edition (3.2 GHz, 6.4 GT/s QPI)

Systemic asus motherboard P6T Deluxe (Intel X58)

Memory Corsair XMS3-12800C9 (3x2 GB, 1333 MHz, 9-9-9-24, 2T)

Maxtor MaXLine III 7B250S0 hard drive (250 GB, SATA-150, 16 MB buffer)

Power supply Enermax Galaxy DXX EGX1000EWL (power 1 kW)

Dell 3007WFP Monitor (30”, maximum resolution [email protected] Hz)

Microsoft Windows Vista Ultimate SP1 64-bit

ATI Catalyst 8.12 for ATI Radeon HD

Nvidia GeForce 181.20 WHQL for Nvidia GeForce

Graphics card drivers have been tuned to provide the highest possible quality of texture filtering with minimal impact from default software optimizations. Transparent texture anti-aliasing was enabled, while multisampling was used for both architectures, since ATI solutions do not support supersampling for this function. As a result, the list of ATI Catalyst and Nvidia GeForce driver settings looks like this:

ATI Catalyst:

Smoothvision HD: Anti-Aliasing: Use application settings/Box Filter

Catalyst A.I. Standard

Mipmap Detail Level: High Quality

Wait for vertical refresh: Always Off

Enable Adaptive Anti-Aliasing: On/Quality

NVIDIA GeForce:

Texture filtering - Quality: High quality

Texture filtering - Trilinear optimization: Off

Texture filtering - Anisotropic sample optimization: Off

Vertical sync: Force off

Antialiasing - Gamma correction: On

Antialiasing - Transparency: Multisampling

Multi-display mixed-GPU acceleration: Multiple display performance mode

Set PhysX GPU acceleration: Enabled

Select the multi-GPU configuration: Enable multi-GPU mode

Other settings: default

The composition of the test package has been subjected to some revision to better match the current realities. As a result of the revision, the following set of games and applications was included in it:

3D First Person Shooters:

Call of Duty: World at War

Crysis Warhead

Enemy Territory: Quake Wars

Far Cry 2

Left 4 Dead

S.T.A.L.K.E.R.: Clear Sky

Three-dimensional shooters with a third-person view:

dead space

Devil May Cry 4

RPG:

Fallout 3

mass effect

Simulators:

Race Driver: GRID

X³: Terran Conflict

Strategies:

Red Alert 3

world in conflict

Synthetic tests:

Futuremark 3DMark06

Futuremark 3D Mark Vantage

Each of the items in the test set software The game has been set up in such a way as to provide the highest possible level of detail, and, moreover, only the tools available in the game itself to any uninitiated user were used. This means a fundamental rejection of manual modification of configuration files, since the player is not required to be able to do this. For some games, exceptions were made, dictated by one or another consideration of necessity; each of these exceptions is mentioned separately in the relevant section of the review.

In addition to the EVGA GeForce GTX 295+, the testers included the following graphics cards:

Nvidia GeForce GTX 280 (G200, 602/1296/2214 MHz, 240 SP, 80 TMU, 32 RBE, 512-bit memory bus, 1024 MB GDDR3)

Nvidia GeForce GTX 260 Core 216 (G200b, 576/1242/2000 MHz, 216 SP, 72 TMU, 28 RBE, 448-bit memory bus, 896 MB GDDR3)

ATI Radeon HD 4870 X2 (2xRV770, 750/750/3600 MHz, 1600 SP, 80 TMU, 32 RBE, 2x256-bit memory bus, 2x1024 MB GDDR5)

The first two cards from the above list were also tested in SLI mode, and the Radeon HD 4870 X2 was supplemented with a single Radeon HD 4870 1GB to set up and test a three-way Radeon HD 4870 3-way CrossFireX system.

Testing was carried out at resolutions of 1280x1024, 1680x1050, 1920x1200 and 2560x1600. Wherever possible, standard 16x anisotropic filtering was supplemented with 4x MSAA anti-aliasing. Activation of anti-aliasing was carried out either by means of the game itself, or, in their absence, was forced using the appropriate settings of the ATI Catalyst and Nvidia GeForce drivers. By popular demand from readers, some games were additionally tested with forcing CSAA 16xQ anti-aliasing modes for Nvidia solutions and CFAA 8x + Edge-detect Filter for ATI solutions. Both modes use 8 color samples per pixel, but the Nvidia algorithm provides twice as many samples for the coverage mesh, and the ATI algorithm applies an additional edge smoothing filter, which the company says makes it equivalent to the MSAA 24x mode.

To obtain performance data, the tools built into the game were used, with the obligatory recording of original test videos, if possible. Whenever possible, data were recorded not only on the average, but also on the minimum productivity. In all other cases, the Fraps 2.9.8 utility was used in manual mode with a three-time test pass and subsequent averaging of the final result.

Playtests: Call of Duty: World at War

All test participants, with the exception of the GeForce GTX 280, are so powerful that they easily reach the performance ceiling in this game. Only at 2560x1600 do we manage to get some data, from which it follows that the ATI Radeon HD 4870 X2 cannot resist its new dual-processor enemy: a successful rivalry requires help in the form of another RV770 core.

When using extreme anti-aliasing modes, Nvidia solutions have an obvious advantage in the form of a less resource-intensive CSAA 16xQ algorithm, which provides 16 samples per coverage mesh, but only 8 color samples, while ATI's CFAA 8x + Edge-detect Filter algorithm imposes on the GPU, an additional load in the form of an edge smoothing filter. As a result, the Radeon HD 4870 X2 shows the worst result among all cards tested in these modes. Moreover, the scope of extreme FSAA modes is limited to a resolution of 1280x1024, and the gain in image quality is so meager that it is just right to look at it under a microscope.

Nvidia GeForce GTX 295

MSAA 4xCSAA 16xQ

ATI Radeon HD 4870 X2

MSAA 4xCFAA 8x + Edge-detect

The verdict is simple and logical: the minimum gain in fine detail smoothing is clearly not worth such a monstrous drop in performance.

Playtests: Crysis Warhead

Nvidia's new dual-GPU flagship is a solid leader in all resolutions, trailing only slightly behind the much bulkier and hotter GeForce GTX 280 SLI tandem. However, at 2560x1600 what we feared happens - the GeForce GTX 295 lacks 896 MB of local video memory. However, the overall results are so low that this loss is not terrible and is only of theoretical interest.

Playtests: Enemy Territory: Quake Wars

ET: Quake Wars has an average performance limiter fixed at 30 fps, as all events are synced at 30 Hz in multiplayer. In order to obtain more complete data on the performance of graphics cards in Quake Wars, this limiter has been disabled via the game console. Since the testing uses the internal capabilities of the game, there is no information on the minimum performance.

In this case, the GeForce GTX 295 is also somewhat inferior to the GeForce GTX 280 SLI tandem, which can be explained by the lower bandwidth of the memory subsystem, coupled with the use of textures of large volume and resolution in the game. Nevertheless, it outperforms the Radeon HD 4870 X2, especially at 2560x1600, where the advantage of the novelty reaches 22%.

But an attempt to use extreme anti-aliasing modes reveals the failure of Nvidia cards, moreover, in the field where they have traditionally been strong - that is, in games using the OpenGL API. If at 1280x1024 the picture doesn't differ much from the one that can be seen with the usual MSAA 4x, then at higher resolutions ATI's solutions take a sharp lead. At the same time, the strange behavior of the GeForce GTX 295 cannot be explained by a lack of video memory - there was no significant difference in performance between it and a pair of GeForce GTX 280 SLI. As for the image quality, the differences are almost invisible to the naked eye, especially at resolutions from 1680x1050, so there is not just little point in using extreme modes, it is practically non-existent.

Playtests: Far Cry 2

The behavior of the GeForce GTX 295 is within the framework of preliminary forecasts - the performance it demonstrates is approximately at the level of the GeForce GTX 260 Core 216 SLI tandem and slightly lower than the performance of the GeForce GTX 280 SLI tandem. The advantage over the Radeon HD 4870 X2 is insignificant and does not exceed 12-15%.

An attempt to use extreme anti-aliasing modes does not entail immediate retribution in the form of a drop in performance levels below acceptable values, however, it significantly affects the minimum performance, and also makes it impossible to use a resolution of 2560x1600.

Nvidia GeForce GTX 295

MSAA 4xCSAA 16xQ

ATI Radeon HD 4870 X2

MSAA 4xCFAA 8x + Edge-detect

As with Call of Duty: World at War, the screenshots show no significant improvement in image quality. There is a difference, but you need to look for it under magnification using special utilities, such as The Compressonator; in dynamics, it is simply impossible to notice an improvement in the quality of smoothing. Another argument in favor of the fact that the extreme modes, so actively mentioned by the leading developers of graphics solutions, are more of a publicity stunt than a way to really improve image quality in practice.

Playtests: Left 4 Dead

The game is based on the Source engine and has built-in testing tools, which, unfortunately, do not provide information about the minimum performance.

Due to the use of the Source engine, the game is quite modest in its requirements, and all test participants can easily provide a comfortable level of performance in it at resolutions up to 2560x1600 inclusive. Only a single GeForce GTX 280 stands out from the overall picture. Note that the factory overclocking of the EVGA GeForce GTX 295+ allowed it to slightly outperform the GeForce GTX 280 SLI tandem.

The use of high-quality (according to the developers) anti-aliasing modes gives more interesting results: firstly, ATI solutions are losing their positions, and secondly, at a resolution of 2560x1600, the GeForce GTX 295 is noticeably inferior to the GeForce GTX 280 SLI bundle due to the smaller volume available to applications video memory - 869 MB versus 1024 MB. The difference in image quality is even less noticeable than in previous tests, since Left 4 Dead belongs to the genre of "survival shooters" and most of the scenes in it are quite dark.

Game Tests: S.T.A.L.K.E.R.: Clear Sky

To ensure an acceptable level of performance in this game, it was decided to abandon the use of FSAA, as well as such resource-intensive options as "Sun rays", "Wet surfaces" and "Volumetric smoke". During testing, the "Enhanced full dynamic lighting" (DX10) mode was used, for ATI cards, the DirectX 10.1 mode was additionally used

Thanks to a number of concessions we made, described above, all test participants coped with the task of providing an acceptable level of performance, with the exception of a single GeForce GTX 280, and Nvidia solutions demonstrated a slightly higher level of minimum performance at resolutions up to 1920x1200 inclusive. But at 2560x1600, ATI solutions took the first place in this indicator, and the Radeon HD 4870 3-way CrossFireX system even set a kind of record, outperforming its rivals by more than 25%.

Playtests: Dead Space

Unlike the Radeon HD 4870 X2, the GeForce GTX 295 does not have problems with multi-GPU support, but at the same time, it does not demonstrate outstanding scalability compared to a single GeForce GTX 280. It remains only to wait for multi-processor support to receive similar support. graphic solutions ATI, although it should be noted that this expectation is practically painless, since even in the current state they demonstrate acceptable performance at a resolution of 2560x1600.

Playtests: Devil May Cry 4

All multi-processor graphics solutions in this game are superbly scalable, and ATI's three-processor system naturally takes the lead, since it has the largest number of GPUs. The GeForce GTX 295 outperforms the Radeon HD 4870 X2 by 11-26%, depending on the resolution, but against the background of indicators that do not fall below 70 frames per second, this difference looks insignificant and, of course, does not affect the comfort of the gameplay.

High-quality anti-aliasing modes seriously increase the load on the graphics subsystem, but only the Radeon HD 4870 X2 noticeably loses ground, perhaps due to the presence of only 32 RBE blocks, while its rival has 56 such blocks. Nevertheless, at 2560x1600 the ATI solution still provides an acceptable level of performance, albeit balancing on the verge of a foul. The GeForce GTX 295, on the other hand, feels great, but this is largely due to the less resource-intensive anti-aliasing algorithm. However, in both cases, it is almost impossible to notice improvements in the quality of the picture, since the game is very dynamic.

Playtests: Fallout 3

Starting with a resolution of 1920x1200, a certain advantage of ATI's solutions becomes obvious, and at a resolution of 2560x1600 it no longer raises any doubts. Nevertheless, the GeForce GTX 295 looks quite worthy, yielding extremely slightly to the GeForce GTX 280 SLI tandem in performance, but significantly surpassing it in other consumer qualities, including cost.

Playtests: Mass Effect

At 1280x1024, the advantage of the GeForce GTX 295 over the Radeon HD 4870 X2 is almost imperceptible, at the next two resolutions it increases to 14, and then to 26%, but at 2560x1600 it again drops to almost zero, and the Radeon HD 4870 3- way CrossFireX. However, in the latter case, none of the participants can provide the minimum acceptable performance.

Game Tests: Race Driver: GRID

Throughout the test, ATI solutions retain their advantage in average speed, but up to a resolution of 2560x1600 their minimum performance is almost the same as that of Nvidia solutions. With a general performance level in the region of 100-140 frames per second, it is ridiculous to say that the player can feel a difference of the order of 10-20 frames per second. However, even at 2560x1600 the minimum multi-GPU resolution does not fall below 60 frames per second, which is an excellent result, especially against the backdrop of the GeForce GTX 280, one of the most powerful single-chip graphics cards. It seems that multi-GPU solutions have finally achieved victory, at least in the sector of the most productive gaming cards.

Playtests: X³: Terran Conflict

As noted earlier, the game prefers ATI architectural solutions and, at the same time, is not too picky about Nvidia solutions - unlike most other tests, in X³ a single GeForce GTX 280 is practically not inferior to multi-GPU solutions. At the same time, of all the Nvidia solutions presented in the review, only the new GeForce GTX 295 and the GeForce GTX 280 SLI tandem can provide an acceptable level of minimum performance at a resolution of 1680x1050

Playtests: Red Alert 3

The game contains a non-disableable average performance limiter, fixed at around 30 frames per second.

Although with brute force, the GeForce GTX 295 managed to overcome the performance problem of Nvidia solutions in Red Alert 3. At least when using FSAA 4x, the speed remains acceptable at resolutions up to 1920x1200 inclusive. It is noteworthy that SLI tandems using discrete cards cannot do this, although they exchange data through the same nForce200 switch, but located on system board.

Game Tests: World in Conflict

All dual-processor solutions from Nvidia demonstrate almost the same level of performance and are noticeably ahead of their rivals from the ATI camp. Only at a resolution of 2560x1600 does the Radeon HD 4870 3-way CrossFireX platform take the lead, and it becomes the only one capable of providing an acceptable minimum speed at this resolution.

Synthetic benchmarks: Futuremark 3DMark06