4 is considered the most successful in comparison with other modifications of the manufacturer, because for many years of work it has proved its right to exist. In this article, the reader will be able to find out why these processors are so good, find out their technical characteristics, and testing and reviews will help a potential buyer make a choice in the computer components market.

Race for frequencies

As history shows, generations of processors were replaced one after another due to the race of manufacturers for frequencies. Naturally, new technologies were also introduced, but they were not in the foreground. Both users and manufacturers understood that the day would come when the effective frequency of the processor would be reached, and this happened after the introduction of the fourth generation of Intel Pentium. 4 GHz - the frequency of one core - has become the limit. The crystal needed too much electricity to work. Accordingly, the dissipated power in the form of colossal heat generation cast doubt on the operation of the entire system.

All subsequent modifications, as well as analogues of competitors, began to be produced within 4 GHz. Here they already remembered technologies using several cores and the introduction of special instructions that can optimize the work of data processing as a whole.

The first pancake is lumpy

In the field of high technologies, a monopoly in the market cannot lead to anything good, many electronics manufacturers have already seen this from their own experience (DVD-R discs have been replaced by DVD + R, and the ZIP drive has generally sunk into oblivion). However, Intel and Rambus decided to still make good money and released a promising joint product. So the first Pentium 4 appeared on the market, which worked on Socket 423 and communicated with Rambus RAM at a very high speed. Naturally, many users wished to become the owners of the fastest computer in the world.

The two companies were prevented from becoming monopolists in the market by the discovery of a dual-channel memory mode. The tests carried out on the novelty showed a tremendous increase in performance. All manufacturers of computer components immediately became interested in the new technology. And the first Pentium 4 processor, along with socket 423, became history, because the manufacturer did not provide the platform with the possibility of upgrading. At the moment, components for this platform are in demand, as it turned out, a number of state-owned enterprises managed to purchase ultra-fast computers. Naturally, replacing components is an order of magnitude cheaper than a full upgrade.

A step in the right direction

Many owners of personal computers who do not play games, but prefer to work with documentation and view multimedia content, still have Intel Pentium 4 (Socket 478) installed. Millions of tests conducted by professionals and enthusiasts show that the power of this platform is sufficient for all the tasks of an ordinary user.

This platform uses two kernel modifications: Willamette and Prescott. Judging by the characteristics, the differences between the two processors are insignificant, the latest modification adds support for 13 new instructions for data optimization, shortly called SSE3. The frequency range of the crystals is in the range of 1.4-3.4 GHz, which, in fact, satisfies the requirements of the market. The manufacturer took the risk of introducing an additional branch of processors for socket 478, which were supposed to attract the attention of gamers and overlockers. The new line was named Intel Pentium 4 CPU Extreme Edition.

Advantages and disadvantages of 478 socket

Judging by the reviews of IT specialists, the Intel Pentium 4 processor, running on the 478 socket platform, is still quite in demand. Not every computer owner can afford an upgrade that requires the purchase of three basic components (motherboard, processor and RAM). After all, for most tasks, to improve the performance of the entire system, it is enough to install a more powerful crystal. Fortunately, the secondary market is full of them, because the processor is much more durable than the same motherboard.

And if you upgrade, then attention should be paid to the most powerful representatives in this category, Extreme Edition, which still show decent results in performance tests. The disadvantage of powerful pod processors is the high power dissipation, which requires good cooling. Therefore, the need to purchase a decent cooler will be added to the user's expenses.

Processors at a low price

The reader has unequivocally come across models of Intel Pentium 4 processors on the market with the inscription Celeron in the marking. In fact, this is a younger line of devices, which has less power due to the reduction of instructions and the disabling of microprocessor internal memory blocks (cache). The Intel Celeron market is aimed at users who are primarily concerned with the price of a computer, and not its performance.

There is an opinion among users that the younger line of processors is a rejection during the production of Intel Pentium 4 crystals. The source of this assumption is the hype in the market back in 1999, when a group of enthusiasts proved to the public that the Pentium 2 and its younger model Celeron are one and the same processor. However, over the past years, the situation has changed radically, and the manufacturer has a separate line for the production of an inexpensive device for undemanding customers. In addition, we should not forget about AMD's competitor, which claims to force Intel out of the market. Accordingly, all price niches should be occupied by worthy products.

A new round of evolution

Many experts in the field of computer technology believe that it was the appearance on the market of the Intel Pentium 4 Prescott processor that ushered in the era of devices with multiple cores and ended the race for gigahertz. With the advent of new technologies, the manufacturer had to switch to socket 775, which helped to unlock the potential of all personal computers in working with resource-intensive programs and dynamic games. According to statistics, more than 50% of all computers on the planet run on the legendary Socket 775 from Intel.

The appearance of the Intel processor led to a stir in the market, because the manufacturer managed to run two instruction streams on one core, creating a prototype of a dual-core device. The technology is called Hyper-threading and today is an advanced solution for the production of the most powerful crystals in the world. Not stopping there, Intel presented Dual Core, Core 2 Duo and Core 2 Quad technologies, which at the hardware level had several microprocessors on one chip.

Two-faced processors

If we focus on the "price-quality" criterion, then processors with two cores will definitely be in focus. Their low cost and excellent performance complement each other. Microprocessors Intel Pentium Dual Core and Core 2 Duo are the best-selling in the world. Their main difference is that the latter has two physical cores that work independently of each other. But the Dual Core processor is implemented in the form of two controllers that are installed on the same chip and their joint work is inextricably linked.

The frequency range of devices with two cores is slightly underestimated and ranges from 2-2.66 GHz. The whole problem is in the power dissipation of the crystal, which gets very hot at high frequencies. An example is the entire eighth line of Intel Pentium D (D820-D840). They were the first to receive two separate cores and operating frequencies over 3 GHz. The power consumption of these processors averages 130 watts (in winter, a quite acceptable room heater for users).

Busting with four cores

The new four-core Intel(R) Pentium(R) 4 was clearly designed for users who prefer to buy components with a large margin for the future. However, the software market suddenly stopped. Development, testing and deployment of applications is done for devices with one or two cores maximum. But what about systems consisting of 6, 8 or more microprocessors? A common marketing ploy aimed at potential buyers who want to purchase a heavy-duty computer or laptop.

As with megapixels on a camera - better not the one where 20 megapixels is written, but a device with a larger matrix and focal length. And in processors, the weather is made by a set of instructions that process the program code of the application and give the result to the user. Accordingly, programmers must optimize this very code so that the microprocessor processes it quickly and without errors. Since there are a majority of weak computers on the market, it is beneficial for developers to create non-resource-intensive programs. Accordingly, a large computer power at this stage of evolution is not needed.

For owners of the Intel Pentium 4 processor who want to upgrade at minimal cost, professionals recommend looking towards the secondary market. But first you need to find out the technical characteristics of the motherboard installed in the system. You can do this on the manufacturer's website. Interested in the section "processor support". Further, in the media, you need to find and, comparing with the characteristics of the motherboard, select several worthy options. It does not hurt to study the reviews of owners and IT professionals in the media on selected devices. After that, you can start searching for the necessary used processor.

For many platforms that support microprocessors with four cores, it is recommended to install the Intel Core Quad 6600. If the system can only work with dual-core crystals, then you should look for the Intel Xeon server version or the Intel Extreme Edition overlocker tool (naturally, for socket 775). Their cost on the market is in the range of 800-1000 rubles, which is an order of magnitude cheaper than any upgrade.

Mobile device market

In addition to desktop computers, Intel Pentium 4 processors were also installed on laptops. For this, the manufacturer created a separate line, which had the letter "M" in its marking. The characteristics of mobile processors were identical to stationary computers, but the frequency range was clearly underestimated. So, the most powerful processor for laptops is the Pentium 4M 2.66 GHz.

However, with the development of platforms in mobile versions, everything is so confused that the manufacturer Intel itself has not yet provided a processor development tree on its official website. Using the 478-pin platform in laptops, the company changed only the processing technology of the processor code. As a result, a whole "zoo" of processors was bred on one socket. The most popular, according to statistics, is the Intel Pentium Dual Core chip. The fact is that this is the cheapest device in production, and its power dissipation is negligible compared to analogues.

The race to save energy

If for the processor power is not critical for the system, then for a laptop the situation changes dramatically. Here, Intel Pentium 4 devices were supplanted by less volatile microprocessors. And if the reader gets acquainted with the tests of mobile processors, he will see that the performance of the old Core 2 Quad from the Pentium 4 line is not far behind the more modern Core i5 chip, but the power consumption of the latter is 3.5 times less. Naturally, this difference affects the autonomy of the laptop.

Following the market for mobile processors, you can find that the manufacturer has again returned to the technologies of the last decade and begins to actively install Intel Atom products in all laptops. Just do not need to compare them with low-power processors installed on netbooks and tablets. These are completely new, technologically advanced and very productive systems with 2 or 4 cores on board and are able to take part in testing applications or games on a par with Core i5 / i7 crystals.

Finally

As you can see from the review, the legendary Intel Pentium 4 processor, the characteristics of which have changed over the years, not only has the right to coexist with the new lines of the manufacturer, but also successfully competes in the price-quality segment. And if we are talking about upgrading a computer, then before taking an important step, it is worth understanding whether it makes sense to change the awl for soap. In most cases, especially when it comes to productive games, professionals recommend upgrading by replacing the video card. Also, many users do not know that the weak link of a computer in dynamic games is a hard magnetic disk. Replacing it with an SSD drive can increase the performance of your computer several times.

For mobile devices, the situation is somewhat different. The operation of the entire system is highly dependent on the temperature inside the laptop case. It is clear that a powerful processor at peak loads will lead to slowdowns or a complete shutdown of the device (many negative reviews confirm this fact). Naturally, when buying a laptop for gaming, you need to pay attention to the efficiency of the processor in terms of power consumption and decent cooling of all components.

It would seem that the Pentium 4 2.8 GHz was released not so long ago, but the restless company Intel seems to be so proud of the ability of its new processor core to constantly “overclock” that it does not give us rest with announcements of more and more new processors :). However, our today's hero differs from the previous top model not only by 200+ megahertz - what some especially advanced users have long dreamed of has finally come true: the technology of emulating two processors on one processor core, which was previously the property of only ultra-expensive Xeons, finally -something "liberated" and sent to "free desktop swimming". Want a dual processor home computer? We have them! All subsequent Pentium 4 models, starting with the one considered in this article, will have Hyper-Threading support. However, someone may quite reasonably ask: “Why do I need a two-processor machine at home? I don't have any kind of server! And indeed why? This is what we have tried to explain below. So: Hyper-Threading what is it and why might it be needed in ordinary personal computers?

SMP and Hyper-Threading: "Crossing Europe"

To begin with, let's pretend that we are starting "from scratch", i.e., the mechanisms of functioning of multiprocessor systems are unknown to us. We are not going to start a cycle of monographs on this issue with this article :), so we will not touch on difficult issues related, for example, to interrupt virtualization and other things. In fact, we just need to understand how the classic SMP (Symmetric Multi-Processor) system works from the point of view of ordinary logic. This is necessary, if only because there are not so many users who have a good idea of how the SMP system works, and in which cases a real increase in performance can be expected from using two processors instead of one, and in which cases not. Honestly, one of the authors of this material somehow ruined an hour and a half of time, proving to his, let's say, "not poor" friend, that Unreal Tournament on his multiprocessor machine will work no faster than on a regular one :). Funny? I assure you only from the outside. So, let's imagine that we have, for example, two processors (let's focus on this, the simplest example) instead of one. What does this give us?

Basically, nothing. Because in addition to this, we also need an operating system that can use these two processors. By definition, this system should be multitasking (otherwise, there simply cannot be any point in having two CPUs), but besides this, its core must be able to parallelize calculations on several CPUs. A classic example of a multitasking OS that cannot do this is all OS from Microsoft, usually called “Windows 9x” for short 95, 95OSR2, 98, 98SE, Me. They simply cannot determine the presence of more than one processor in the system and, in fact, there is nothing further to explain :). SMP is supported by operating systems of the same manufacturer, built on the NT kernel: Windows NT 4, Windows 2000, Windows XP. Also, by virtue of their roots, all operating systems based on the Unix ideology have this support various Free-Net-BSDs, commercial Unix (such as Solaris, HP-UX, AIX), and numerous flavors of Linux. Yes, by the way MS DOS multiprocessing in the general case also “does not understand” :).

If two processors are still determined by the system, then the further mechanism for their activation is generally (at the "logical", let's emphasize, level!) quite simple. If one application is running at a given time then all the resources of one processor will be given to it, while the second will simply be idle. If there are two applications, the second will be given to the second CPU for execution, so in theory the execution speed of the first should not decrease at all. It's in the primitive. However, in reality, everything is more complicated. For starters, we can only have one executable user application running, but the number of processes (that is, fragments of machine code designed to perform a certain task) in a multitasking OS is always much larger. Let's start with the fact that the OS itself is also an application well, we will not go deep the logic is clear. Therefore, in fact, the second CPU is able to “help” even a single task a little, taking over the maintenance of processes generated by the operating system. Again, speaking of simplifications, of course, it’s still not possible to divide the CPU between the user application and the OS in this way, ideally, but at least the processor busy with the execution of a “useful” task will be less distracted.

In addition, even one application can spawn threads, which, if there are several CPUs, can be executed on them separately. For example, almost all rendering programs do this - they were specially written taking into account the possibility of working on multiprocessor systems. Therefore, in the case of using streams, the gain from SMP is sometimes quite significant. O m even in a "single-tasking" situation. In fact, a thread differs from a process in only two ways firstly, it is never spawned by a user (a process can be started by both a system and a person, in the latter case, a process = an application; the appearance of a thread is initiated exclusively by a running process), and secondly the thread dies along with the parent process, regardless of its desire for example, if the parent process “glitches and crashes” the OS considers all the threads generated by it to be ownerless and “kills” itself, automatically.

Also, do not forget that in a classic SMP system, both processors each work with their own cache and set of registers, but they have a common memory. Therefore, if two tasks simultaneously work with RAM, they will interfere with each other anyway, even if each CPU has “its own”. And finally, the last thing: in reality, we are dealing with not one, not two, or even three processes. In the above collage (this is really a collage, because all user processes, i.e. applications launched "for work" were removed from the Task Manager screenshot), it is clearly seen that the "bare" Windows XP, by itself, without launching a single one application, has already spawned 12 processes, many of which are also multi-threaded, and the total number of threads reaches two hundred and eight (!!!).

Therefore, it is absolutely not necessary to count on the fact that we will be able to come to the “own CPU for each task” scheme, and the processors will still switch between code fragments both physical and virtual, and even if they are at least virtual in a square and 10 pieces for each physical core :). However, in reality, everything is not so sad with well-written code, a process (or thread) that is currently not doing anything takes almost no processor time (this is also visible in the collage).

Now, having dealt with the "physical" multiprocessing, let's move on to Hyper-Threading. In fact, this is also multiprocessing, only virtual. For the Pentium 4 processor is actually one here it is, standing in the socket, the cooler is slapped on top :). There is no second socket . And the OS sees two processors. Like this? In general, it's very simple. Let's look at the picture.

Here we still have to go a little deeper into the technical details, because otherwise, alas, we won’t be able to explain anything. However, those who are not interested in these details can simply skip this paragraph. So, in our case, the classic “single-core” processor was added another AS IA-32 Architectural State block. Architectural State contains the state of registers (general purpose, control, APIC, service). In fact, AS#1 plus the only physical core (branch prediction units, ALUs, FPUs, SIMD units, etc.) is one logical processor (LP1), and AS#2 plus the same physical core is the second logical processor ( LP2). Each LP has its own interrupt controller (APIC Advanced Programmable Interrupt Controller) and a set of registers. For the correct use of registers by two LPs, there is a special table RAT (Register Alias Table), according to the data in which you can establish a correspondence between the general-purpose registers of a physical CPU. Each LP has its own RAT. As a result, we have obtained a scheme in which two independent code fragments can be freely executed on the same core, that is, a de facto multiprocessor system!

Hyper Threading Compatibility

In addition, returning to practical and mundane things, I would like to touch on another important aspect: not all operating systems, even those that support multiprocessing, can work with such a CPU as with two. This is due to such a "thin" moment as the initial determination of the number of processors during the initialization of the operating system. Intel explicitly says that OS without ACPI support will not be able to see the second logical processor. In addition, the BIOS of the motherboard must also be able to detect the presence of a processor with Hyper-Threading support and report it to the system accordingly. In fact, with regard to, for example, Windows, this means that not only the Windows 9x line is “in flight”, but also Windows NT the latter, due to the lack of ACPI support, will not be able to work with one new Pentium 4 as with two. But what's nice is that despite the blocked ability to work with two physical processors, with two logical ones obtained using Hyper-Threading, Windows XP Home Edition will be able to work. And Windows XP Professional, by the way, despite the limitation of the number of physical processors to two, with two installed CPUs with Hyper-Threading support honestly “sees” four :).

Now a little about the hardware. The fact that new CPUs with a frequency of more than 3 GHz may require a replacement of the motherboard is probably already known to everyone the earth (more precisely Internet) has been full of rumors for a long time. Unfortunately, this is actually the case. Even with the nominal preservation of the same Socket 478 processor socket, Intel failed to keep the power consumption and heat dissipation intact in the new processors they consume more and heat up, respectively, too. It can be assumed (although this has not been officially confirmed) that the increase in current consumption is associated not only with an increase in frequency, but also with the fact that due to the expected use of "virtual multiprocessing", the load on the core will increase on average, therefore, the average power consumption. "Old" motherboards in some cases may be compatible with new CPUs but only if they were made “with a margin”. Roughly speaking, those manufacturers who made their PCBs in accordance with Intel's own recommendations regarding the power consumption of the Pentium 4 turned out to be the loser in relation to those who "played it safe" a little, putting a VRM on the board with a margin and dividing it accordingly. But that's not all. In addition to OS, BIOS and board electronics, with Hyper-Threading technology the chipset must also be compatible. Therefore, only those whose mainboard is based on one of the new chipsets with 533 MHz FSB support: i850E, i845E, i845PE/GE can become happy owners of two processors for the price of one :). The i845G stands out a bit the first revision of this Hyper-Threading chipset do not support, later is already compatible.

Well, now, it seems that we figured out the theory and compatibility. But let's not rush. OK, we have two "logical" processors, we have Hyper-Threading, wow! it's cool. But as mentioned above, physically we have the processor as it was alone, and remained. Why, then, is such a complex "emulation" technology needed, discarding what can be proudly demonstrated by Task Manager with graphs of two CPUs to friends and acquaintances?

Hyper-Threading: why is it needed?

Against the usual, in this article we will pay a little more attention than usual reasoning i.e. not technical prose (where, in general, everything is fairly unambiguously interpreted and on the basis of the same results, completely independent people most often draw very similar conclusions, nevertheless), but “technical lyrics” i.e., an attempt to understand what Intel offers us and how to treat it. I have repeatedly written in the "Editor's Column" on our website, and I will repeat here that this company, if you look closely, has never been different absolute perfection of their products, moreover, variations on the same themes from other manufacturers sometimes turned out to be much more interesting and conceptually harmonious. However, as it turned out, it is not necessary to do absolutely everything perfect the main thing is that the chip personifies some idea, and this idea came at the right time and in the right place. And yet so that others simply do not have it.

This was the case with Pentium, when Intel countered AMD Am5x86, which is very fast in the “integer”, with a powerful FPU. This was the case with the Pentium II, which received a thick tire and a fast second level cache, due to which all Socket 7 processors could not keep up with it. So it was (well, at least I consider this a fait accompli) with the Pentium 4, which opposed everyone else with the presence of SSE2 support and the rapid increase in frequency and also de facto won. Now Intel offers us Hyper-Threading. And we do not call in sacred hysteria to beat your forehead against the wall and shout “Lord have mercy”, “Allah is great” or “Intel rulez forever”. No, we just offer to think about why a manufacturer, known for the literacy of its engineers (not a word about marketers! :)) and the huge sums that it spends on research, offers us this technology.

Declaring Hyper-Threading as "another marketing gimmick" is, of course, as easy as shelling pears. However, do not forget that this technology, it requires research, money for development, time, effort Wouldn't it be easier to hire another hundred PR managers for less money or make another dozen beautiful commercials? Apparently it's not easier. So, "there is something in it." So now we will try to understand not even what happened as a result, but what the IAG (Intel Architecture Group) developers were guided by when they made a decision (and such a decision was certainly made!) develop "this interesting idea" further, or postpone in the chest for ideas that are funny but useless.

Oddly enough, in order to understand how Hyper-Threading functions, it is enough to understand how any multitasking operating system works. And indeed well, after all, it performs in some way one processor at once dozens of tasks? This “secret” has been known to everyone for a long time in fact, only one is still running at the same time (on a single-processor system), it’s just that switching between pieces of code of different tasks is so fast that an illusion of simultaneous operation of a large number of applications is created.

In fact, Hyper-Threading offers us the same thing, but implemented in hardware, inside the CPU itself. There are a number of different executing units (ALU, MMU, FPU, SIMD), and there are two "simultaneously" executing pieces of code. A special block keeps track of which commands from each fragment need to be executed at the moment, and then checks whether they are loaded with work All executing units of the processor. If one of them is idle, and it is he who can execute this command she is transferred to him. Naturally, there is also a mechanism for forced “sending” of a command for execution otherwise, one process could seize the entire processor (all executing units) and the execution of the second section of code (executed on the second “virtual CPU”) would be interrupted. As far as we understand, this mechanism (yet?) is not intelligent, that is, it is not capable of operating various priorities, but simply alternates commands from two different chains in a first-come-first-served basis, i.e., simply according to the principle “I executed your command now give way to another thread”. Unless, of course, a situation arises when the commands of one chain in terms of executing blocks do not compete anywhere with the commands of another. In this case, we get really 100% parallel execution of two code fragments.

Now let's think about what Hyper-Threading is potentially good for and what it's not. The most obvious consequence of its application is an increase in the efficiency of the processor. Indeed if one of the programs uses mostly integer arithmetic, and the second performs calculations with floating point, then during the execution of the first FPU simply does nothing, and during the execution of the second , on the contrary, the ALU does nothing. It would seem that this could be the end of it. However, we considered only the ideal (from the point of view of using Hyper-Threading) option. Let's now look at another one: both programs use the same processor blocks. It is clear that it is quite difficult to speed up execution in this case, because the physical number of executing blocks has not changed from “virtualization”. But won't it slow down? Let's figure it out. In the case of a processor without Hyper-Threading, we simply have a "fair" alternate execution of two programs on the same core with an arbiter in the form of an operating system (which itself is another program), and the total time of their work is determined by:

- program code execution time #1

- program code execution time #2

- time costs for switching between code fragments of programs No. 1 and No. 2

What do we have in the case of Hyper-Threading? The scheme becomes a little different:

- program execution time #1 on processor #1 (virtual)

- program execution time #2 on processor #2 (virtual)

- time to switch one physical core (as a set of executing units required by both programs) between two emulated "virtual CPUs"

It remains to be recognized that and here Intel acts quite logically: only items number three compete with each other in terms of speed, and if in the first case the action is performed by software and hardware (the OS controls the switching between threads, using processor functions for this), then in the second case we actually have fully hardware solution The processor does everything itself. Theoretically, a hardware solution is always faster. We emphasize theoretically. The practice is still ahead of us.

But that's not all. Also, one of the most serious no, not shortcomings, but rather unpleasant moments is that, alas, commands are not executed in a vacuum, but instead Pentium 4 has to deal with classic x86 code, which actively uses direct addressing of cells and even entire arrays that are outside the processor in RAM. And in general, by the way, most of the processed data is most often located there :). Therefore, our virtual CPUs will “fight” among themselves not only for registers, but also for the common processor bus for both, bypassing which data simply cannot get into the CPU. However, there is one subtle point: today "honest" dual-processor systems based on Pentium III and Xeon are in exactly the same situation! For our good old AGTL + bus, inherited by all today's Intel processors from the famous Pentium Pro (later it was only modified, but the ideology was practically not touched) ALWAYS ONE, no matter how many CPUs were installed in the system. Here is such a "processor coax" :). Only AMD tried to deviate from this scheme on x86 with its Athlon MP for AMD 760MP/760MPX, each processor goes to the northbridge of the chipset separate tire. However, even in such an “advanced” version, we still run away from problems not very far for something, but we have exactly one memory bus and in this case it is already everywhere (recall, we are talking about x86 systems).

However, every cloud has a silver lining, and even from this, in general, not very pleasant moment, Hyper-Threading can help to get some benefit. The fact is that, in theory, we will have to observe a significant performance increase not only in the case of several tasks using different functional blocks of the processor, but also if the tasks work differently with data in RAM. Returning to the old example in a new capacity if one application heavily considers something “inside itself”, while the other constantly pumps data from RAM, then their total execution time in case of using Hyper-Threading should, in theory, decrease even if they use the same instruction execution blocks if only because commands to read data from memory can be processed while our first application is hard to read something.

So, let's sum it up: from a theoretical point of view, Hyper-Threading technology looks very good and, we would say, “adequately”, i.e. corresponds to the realities of today. It is already quite rare to find a user with one lonely open window on the screen everyone wants to listen to music at the same time, and surf the Internet, and record discs with their favorite MP3s, and maybe even play some kind of shooter or strategy against this background, which, as you know, the processor "love" well, just with terrible force :). On the other hand, it is well known that a specific implementation is sometimes capable of killing any most excellent idea with its “curvature”, and we have also encountered this more than once in practice. Therefore, having finished with the theory, let's move on to practice tests. They should help us answer the second main question: is Hyper-Threading so good now and no longer as an idea, but as a concrete implementation of this idea “in silicon”. Testing

Test stand:

- Processor: Intel Pentium 4 3.06 GHz with Hyper-Threading Technology, Socket 478

- Motherboard: Gigabyte 8PE667 Ultra (BIOS F3) based on i845PE chipset

- Memory: 512MB PC2700(DDR333) DDR SDRAM DIMM Samsung, CL 2

- Video card: Palit Daytona GeForce4 Ti 4600

- Hard disk: IBM IC35L040AVER07-0, 7200 rpm

Software:

- OS and drivers:

- Windows XP Professional SP1

- DirectX 8.1b

- Intel Chipset Software Installation Utility 4.04.1007

- Intel Application Accelerator 2.2.2

- Audio drivers 3.32

- NVIDIA Detonator XP 40.72 (VSync=Off)

- Test applications:

- (with support for multiprocessing and Hyper-Threading technology)

- RazorLame 1.1.5.1342 + Lame codec 3.92

- VirtualDub 1.4.10 + DivX codec 5.02 Pro

- Winace 2.2

- Discreet 3ds max 4.26

- BAPCo & MadOnion SYSmark 2002

- MadOnion 3DMark 2001 SE build 330

- Gray Matter Studios & Nerve Software Return to Castle Wolfenstein v1.1

- Croteam/GodGames Serious Sam: The Second Encounter v1.07

Contrary to custom, today we will not test the performance of the new Pentium 4 3.06 GHz against previous models or against competing processors. Because it's largely pointless. The tests that make up our methodology have not changed for quite a long period of time, and those who wish to make the necessary comparisons can use the data from previous materials, but we will focus on the main point, without being sprayed on the details. And the main thing in this material, as you might guess, is the study of Hyper-Threading technology and its impact on performance on performance what? Not such an idle question, as it turns out. However, let's not get ahead of ourselves. Let's start with traditional tests, through which we will smoothly approach (in the context of this material) to the main ones.

WAV to MP3 encoding (Lame)

VideoCD to MPEG4 (DivX) encoding

Archiving with WinAce with a 4 MB dictionary

Hyper-Threading did not demonstrate any obvious advantage, but I must say that we didn’t give this technology any special chances almost all applications are “single-processor”, simultaneously executing threads do not generate (checked!), And, therefore, in these cases, we are dealing with the usual Pentium 4, which has slightly increased the frequency. It is hardly appropriate to talk about some trends against the background of such miserable discrepancies, although if you still suck them out of your finger, they are even a little in favor of Hyper-Threading.

3ds max 4.26

A classic test, but at the same time, the first application in this review that explicitly supports multiprocessing. Of course, the advantage of a system with enabled Hyper-Threading support cannot be called a colossal one (it is about 3%), but let's not forget that in this case Hyper-Threading worked far from the best situation for itself: 3ds max implements SMP support due to offspring flows, and all of them are used for the same purpose (rendering a scene) and, therefore, contain approximately the same commands, and therefore they also work in the same way (according to the same scheme). We already wrote that Hyper-Threading is better suited for the case when different programs are executed in parallel, using different CPU blocks. It is all the more pleasant that even in such a situation, the technology was able to “out of the blue” provide, albeit a small, but performance increase. Rumor has it that 3ds max 5.0 has more to gain by enabling Hyper-Threading, and given the zeal with which Intel pushes its technologies into the domain of software vendors, this should at least be tested. Undoubtedly, we will do so, but in later materials on this topic.

3DMark 2001SE

The results, in general, are quite natural, and can hardly surprise anyone. Perhaps it's better to use 3D benchmarks for exactly what they are intended for - testing the speed of video cards, and not processors? That's probably how it is. However, the results, as you know, are not superfluous. A little bit disturbing less score for a system with Hyper-Threading enabled. However, given that the difference is about 1%, we would not draw far-reaching conclusions from this.

Return to Castle Wolfenstein,

Serious Sam: The Second Encounter

Approximately the same situation. However, we have not even gotten close to tests that can somehow demonstrate the pros (or cons) of Hyper-Threading. Sometimes (by an imperceptibly small amount) the use of "pseudo-multiprocessor" gives a negative result. However, these are not the sensations we are waiting for, are they? :) Even testing with sound does not help much, which, in theory, should be calculated by a separate thread and therefore give a chance to the second logical processor to prove itself.

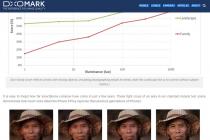

SYSmark 2002 (Office Productivity and Internet Content Creation)

And now I really want to shout at the top of my voice: "Well, who doubted that Hyper-Threading is really capable of increasing performance on real tasks ?!". Result: +1620% really staggering. Moreover, what is most interesting after all, SYSmark is trying to emulate exactly the scheme of work that Intel considers the most “successful” for Hyper-Threading technology launch various applications and work with them at the same time. Moreover, in the process of executing its script, SYSmark 2002 acts quite competently from the point of view of simulating the user's work, “sending to the background” some applications that have already received their “long-term task”. For example, video encoding takes place against the background of the execution of other applications from the Internet Content Creation script, and the ubiquitous anti-virus software and speech-to-text decoding using Dragon Naturally Speaking work in the office subtest. In fact, it is the first test in which more or less "free" conditions are created for Hyper-Threading technology, and it immediately showed its best side! However, we decided not to rely in everything on tests that were not written by us, and conducted several demonstrative experiments of our own “to consolidate the effect”. Experimenting with Hyper-Threading

Simultaneous rendering in 3ds max and archiving in WinAce

First, against the background of a deliberately longer archiving process, a standard test scene was rendered in 3ds max. Then, against the background of rendering a specially stretched scene, a standard test archiving of the file in WinAce was performed. The result was compared with the end time of sequential execution of the same standard tests. Two correction factors were applied to the obtained figures: to equalize the execution time of tasks (we believe that the effect of acceleration from the parallel execution of two applications can be correctly calculated only if the duration of the tasks being executed is the same) and to "remove" the effect of the unevenness of the allocated processor resources for the foreground -/background-applications. As a result, we "counted" the positive effect of acceleration by 17% from the use of Hyper-Threading technology.

So, the impressive results of SYSmark were confirmed in the test with the neighborhood of two real programs. Of course, the acceleration is not twofold, and we chose the tests in a pair ourselves, based on the most favorable, in our opinion, situation for enabling Hyper-Threading. But let's think about these results in this context: the processor whose performance we are now examining in general, with the exception of Hyper-Threading support is just a long-familiar Pentium 4. In fact, the column “without Hyper-Threading” this is what we could see if this technology had not been translated into desktops. A slightly different feeling immediately arises, right? Let's still not complain (according to domestic tradition) that "everything is not as good as it could be", but just think that, together with the new processor, they gave us another way to speed up some operations.

Background archiving in WinAce + movie playback

Rendering in 3ds max + background music

The test procedure is completely trivial: in pair with watching a movie pre-compressed in MPEG4 format using the DivX codec, archiving in WinAce was launched in the background (of course, in the case of skipping frames and slowdowns during playback, this test would not make practical sense, but there would be complaints about viewing quality was not there). Similarly, during the rendering of a normal test scene in 3ds max, music from an MP3 file was played in the background (via WinAmp) (and the “stuttering” of sound that was never noticed as a result was tracked). Note the natural distribution of master-background roles in each pair of applications. As a result, as usual, we took the time of archiving and full rendering of the scene, respectively. The effect of Hyper-Threading in numbers: + 13% and + 8%.

A fairly real situation, that's exactly what we tried to reproduce. In general (and this will be discussed later), Hyper-Threading is not as obvious as it seems. A simple head-on approach (“we see two processors in the OS let's treat them as two processors”) does not give a tangible effect, and there is even a certain feeling of being deceived. However, returning to the above, let's try to evaluate the results from several other positions: tasks that normally take one time to complete are completed in less time when Hyper-Threading is enabled. Who would argue that "something" is worse than "nothing"? This is the whole point we are by no means offered a panacea, but “only” a means to speed up the already existing processor core, which has not undergone cardinal changes. It turns out? Yes. Well, what, by and large, can there be other questions? Of course, in most cases it turns out to be far from the 30% promised in the press release, but you should not pretend that it happens in life, comparing the press release of company X with the press release of company Y, make sure that the first promises are less and they are more "marketable". :)

Testing in CPU RightMark 2002B

The new version of CPU RM supports multithreading (respectively, Hyper-Threading), and, of course, we could not help but take the opportunity to test the new processor using this benchmark. Let's make a reservation that so far this is only the first “output” of CPU RM in tests of multiprocessor systems, so we can say that the study was “mutual” we tested Hyper-Threading as a special case of SMP on a system with a Pentium 4 3.06 GHz, and this system, in turn, tested our benchmark :) for the validity of the results, and, accordingly, the correct implementation of multithreading support in it. It is no exaggeration to say that both sides were satisfied with the results :). While the RM CPU is still "not fully multiprocessor" (multiple threads are created only in the render block, the Math Solving block remains single-threaded), our results clearly indicate that SMP and Hyper-Threading support is present, and the benefits from their presence is visible to the naked eye. By the way, the implementation of multithreading in the "solver" block is generally a much less trivial task than in the render block, so if any of the readers have some ideas about this we are waiting for your comments, ideas, and suggestions. We remind you that the CPU RightMark project is an open source benchmark, so that those interested in programming can not only use it, but also make suggestions for improving the code.

Before moving on to the diagrams, let's take a closer look at the methodology. By the column labels, it is easy to see that the system performance was tested in as many as twelve (!) Variants. However, there is nothing wrong with this, and it is quite simple to figure it out. So, the following factors were changed:

- The tests were run with Hyper-Threading enabled and disabled.

- The CPU RM settings for the number of threads created were used: one, two, and four.

- The CPU RM settings were used for the type of instructions used in the calculation module: SSE2 and "classic" x87 FPU.

Let's explain the latter. It would seem that refusing to use SSE2 on Pentium 4 is complete, excuse me, nonsense (which we have already written about many times before). However, in this case purely theoretical it was a good chance to test the functioning and effectiveness of Hyper-Threading technology. The fact is that the FPU instructions were used only in the calculation module, while the renderer still had SSE support enabled. Thus, those who carefully read the theoretical part, probably already understood “where the dog is buried” we forced different parts of the benchmark use different CPU computing units! In theory, in the event of a forced rejection of SSE2, Math Solving, the CPU RM block should have left the blocks of execution of SSE / SSE2 instructions “untouched”, which made it possible to use them to the fullest for the rendering block of the same CPU RM. Now is the time to move on to the results and see how correct our assumptions turned out to be. Also note that in order to increase the validity and stability of the results, another setting was changed: the number of frames (default 300) was increased to 2000.

Here, in fact, there is practically nothing to comment on. As we said above, the "solver" block (Math Solving) remained untouched, so Hyper-Threading does not have any effect on its performance. However, at the same time, it is gratifying that it does not harm! After all, we already know that, theoretically, the occurrence of situations when "virtual multiprocessing" can interfere with the work of programs is possible. However, we advise you to keep one fact in mind: look how much the performance of the solver block is affected by not using SSE2! We will return to this topic a little later, and in a very unexpected way.

And here is the long-awaited triumph. It is easy to see that as soon as the number of threads in the render unit becomes more than one (in the latter case, it is rather difficult to use the Hyper-Threading capabilities :) immediately this provides this configuration with one of the first places. It is also noticeable that exactly two threads are optimal for systems with Hyper-Threading. True, perhaps someone will remember the Task Manager screenshot with which we “frightened” you above, so we will make a reservation two actively working flow. In general, this is obvious and quite logical since we have two virtual CPUs, it is most correct to create a situation where there will also be two threads. Four is already "overkill", because several threads begin to "fight" for each of the virtual CPUs. However, even in this case, the system with enabled Hyper-Threading managed to overtake the "single-processor" competitor.

It is always customary to talk about successes in detail and with taste, and naturally, it is even more detailed and tasty to talk about them when they are your own. We state that the "experiment with the transition to FPU instructions" was also certainly a success. It would seem that the rejection of SSE2 should have hit performance the hardest (quickly recall the devastating results of Math Solving Speed using FPU instructions in the first diagram of this section). However, what do we see? in the second line, at the very top, among the champions just such a configuration! The reasons are again clear, and this is very pleasing, because their clarity allows us to conclude that predictability behavior of systems with support for Hyper-Threading technology. The "minus" result of the Math Solving block on a system with Hyper-Threading enabled "compensated" with its contribution to the overall performance of the rendering unit, which was completely outsourced to the SSE/SSE2 executing units. Moreover, he compensated so well that, according to the results, such a system was in the forefront. It remains perhaps only to repeat what was repeatedly discussed above: the full potential of Hyper-Threading is manifested in situations where actively running programs (or threads) use different CPU executing units. In this situation, this feature was especially pronounced, since we were dealing with well, carefully optimized CPU RM code. However, the main conclusion is that in principle Hyper-Threading works means it will work in other programs. Naturally, the better, the more their developers spend time optimizing the code.

conclusions

Once again, to the delight of all progressive mankind, Intel has released a new Pentium 4, the performance of which is even higher than that of the previous Pentium 4, but this is not the limit, and soon we will see an even faster Pentium 4 H-yes Not that it's not true Indeed, it is. However, we have already agreed that we will not consider in this article the performance of the above Pentium 4 3.06 GHz in conjunction with other processors for the very reason that see above in the text. You see, we are interested in Hyper-Threading. That's how fastidious we are we don't care about the predictable results of increasing the operating frequency of a long-familiar and predictable processor core by another 200 MHz, give us a "fresh thing" that has not been considered before. And as far-sighted readers have probably already guessed, our conclusions will again be devoted to this very obsessive technology and everything connected with it. Why? Probably because you know everything else very well yourself ..

And since we are talking about Hyper-Threading, let's first define the main thing for ourselves: how to relate to it? What is she like? Without claiming to be the ultimate truth, let's formulate the general opinion that we have based on the test results: Hyper-Threading is not SMP. "Aha!!!" fans of the alternative will scream. “We knew it!!!” they will scream with all their might. "Hyper-Threading is dishonest SMP!!!" These cries will be heard for a long time over the boundless expanses of the Runet We, as elders wise by saxauls (or vice versa? :), will object: “Guys, who, in fact, promised?”. Who uttered this seditious abbreviation? SMP, recall is Symmetric Multi-Processing, that is multiprocessor architecture. And we, sorry, only one processor. Yes, it is equipped with a certain, vulgarly speaking, “feature”, which allows you to pretend that there are two processors. However, does anyone make a secret of the fact that in fact this is not so? It seems that we did not notice this Therefore, we are dealing with a “feature”, and nothing more. And it is worth treating it in this way, and nothing else. Therefore, let us not overthrow the idols that no one erects, and calmly consider whether this feature some sense.

Test results show that in some cases has. In fact, what we discussed purely theoretically in the first part of the article has found its practical confirmation Hyper-Threading technology allows increase processor efficiency in certain situations. In particular, in situations where applications of different nature are executed at the same time. Let's ask ourselves the question: "Is this a plus?". Our answer: "Yes, that's a plus." Is it comprehensive and global? It seems not, because the effect of Hyper-Threading is observed only in some cases. However, is it so important if we consider the technology as a whole? It is clear that the appearance of a CPU capable of doing everything that was done before is twice as fast this is a huge breakthrough. However, as the ancient Chinese said, "God forbid us to live in an era of change." Intel did not initiate such an era, simply by adding the ability to something do faster. Classic Western principle, not well received in our "ball-loving" society: "You can get something better if you pay a little more."

Returning to practice: Hyper-Threading cannot be called a "paper" technology, because with certain combinations it gives quite a noticeable effect. Let's add even a much greater effect than is sometimes observed when comparing, for example, two platforms with the same processor on different chipsets. However, it should be clearly understood that this effect is not always observed, and essentially depends on probably the most acceptable term would be "style". From style user interaction with the computer. And it is here that what we said at the very beginning is manifested: Hyper-Threading is not SMP. The "classic SMP-style", where the user is counting on the reaction of an equally classic "honest" multiprocessor system, will not give the desired result here.

"Hyper-Threading Style" is a combination of, let's not be afraid of the word, "entertainment" or "utility" processes with "worker" processes. You won't get much speedup from a CPU with this technology in most classic multi-processor tasks, or if you habitually run only one application at a time. But you will most likely get reducing the execution time of many background tasks, performed as a "makeweight" to regular work. In fact, Intel just reminded us all one more time that the operating systems we run on multitasking. And she suggested a way to speed up but not so much one process in itself, but a set of concurrent applications. This is an interesting approach, and, it seems to us, quite popular. Now he has his own name. Without further ado, I want to say: it's just good that this original idea came to someone's head. It is all the more good that he was able to translate it into a specific product. Otherwise, as always, time will tell.

As you know, revolutions in computer

world are becoming rarer. And are they really necessary where, in general, "everything

good", where the capabilities of systems and products more than cover the needs of most

modern users. This fully applies to the corporation's processors.

Intel, the industry leader. The company has a full line of high performance

CPUs of all levels (server, desktop, mobile), clock speeds have long been

exceeded the "sky-high" 3 GHz, sales are just "with a bang."

And probably, if it were not for the revived competitors (more precisely, competitor), then all

it would be really good.

But the "gigahertz race" does not stop. Leaving aside questions like " Who needs it?" And " How much is it in demand?"- let's just take it as a fact: in order to stay afloat, CPU manufacturers are simply forced to spend their energy on releasing ever faster (or at least more high frequency) products.

Intel celebrated the beginning of February with the introduction of a whole range of new processors. Company

released seven new CPUs at once, including:

- Pentium 4 3.40 GHz ("old" Northwood core);

- Pentium 4 Extreme Edition 3.40 GHz;

- as many as four representatives of the new line with the Prescott core (by the way, the emphasis

on the first syllable) - 3.40E, 3.20E, 3.0E and 2.80E GHz, manufactured on 90-nanometer

technology and equipped with a 1 MB L2 cache.

All these CPUs are designed for the 800 MHz bus and support Hyper-Threading technology. In addition, Intel released a Pentium 4 based on the Prescott core with a frequency of 2.8A GHz, also manufactured using a 90nm process, but designed for a FSB frequency of 533 MHz and does not support Hyper-Threading. According to Intel, this processor is designed specifically for PC OEMs in response to their wishes. Let's add on our own - and to the delight of overclockers, who will certainly appreciate its overclocking capabilities.

With the release of new CPUs, the Pentium 4 family has expanded significantly and now looks like the one shown in Table 1. 1. Naturally, for the time being Intel is not going to end the production of Pentium 4 based on the Northwood core with FSB 533 and 800 MHz. In addition, several models designed for the 400 MHz bus (five processors from 2A to 2.60 GHz) remain in the line.

By developing 90-nanometer technologies that should provide normal

functioning of Prescott-class processors, Intel engineers are forced to

had to overcome serious obstacles. The nature of these barriers was

not in the insufficient resolution of the production equipment, but in the problems

physical nature, associated with the impossibility of manufacturing such small

transistors by traditional technologies.

The first to appear was a charge leakage from the transistor gate through a thinner

dielectric layer between gate and channel. At a resolution of 90 nm, it "degenerated"

into a barrier of four SiO2 atoms 1.2 nm thick. There was a need

in new insulating materials with a higher dielectric constant

permeability (high-K dielectric). Due to their greater permeability, they allow

build up a thick (up to 3 nm) insulating layer without creating obstacles

for the gate electric field. These are oxides of hafnium and zirconium.

Unfortunately, they turned out to be incompatible with the currently used polycrystalline

gates, and phonon oscillations arising in the dielectric cause

decrease in electron mobility in the channel.

At the boundary with the shutter, another phenomenon is observed, which is expressed in a significant

raising the threshold voltage level required to change the state

conductance of the transistor channel. The solution was found in the form of a metal

shutter. Last year, the corporation's specialists finally picked up two

suitable metal, which made it possible to design new miniature

NMOS and PMOS transistors. What kind of metals did they use?

is still kept secret.

To increase the speed of transistors (it is determined by the speed

transition to the open / closed state), Intel resorted to the formation

channel from a single crystal of strained silicon. "Voltage"

in this case means the deformation of the crystal lattice of the material.

As it turned out, electrons (+10%

for NMOS) and holes (+25% for PMOS) pass through with less resistance.

Improving mobility increases the transistor's maximum open current.

condition.

For NMOS and PMOS transistors, the voltage state is achieved by various

methods. In the first case, everything is very simple: usually the transistor is on top

"covered" with a layer of silicon nitride, which acts as a protective

masks, and to create a voltage in the channel, the thickness of the nitride layer is increased

twice. This leads to an additional load on the source area.

and drain and, accordingly, stretches, deforms the channel.

PMOS transistors are "volted" in a different way. Zones first

The source and drain are etched, and then a layer of SiGe is built up in them. atoms

germanium exceed silicon atoms in size and therefore germanium interlayers

have always been used to create a voltage in silicon. However, the feature

Intel technology lies in the fact that in this case, the compression of silicon

channel occurs in longitudinal section.

The new technological process also allowed for an increase in the number of layers

plating from six to seven (copper connections). Curiously, in the production

lines "shoulder to shoulder" work like lithographic devices

new generation with a wavelength of 193 nm, and their predecessors with a wavelength

waves 248 nm. In general, the percentage of reused equipment has reached 75,

which allowed to reduce the cost of modernization of factories.

Prescott Features

In the discussions that preceded the release of the processor based on the Prescott core, it was jokingly referred to as "Pentium 5". Actually, this was the typical answer of a computer pro to the question "What is Prescott?". Of course, Intel did not change the trademark, and there were no good reasons for this. Recall the practice of releasing software - where a change in the version number occurs only with a radical revision of the product, while less significant changes are indicated by fractional version numbers. In the processor industry, fractional numbers have not yet been adopted, and the fact that Prescott continued the Pentium 4 line is just a reflection of the fact that the changes are not so radical.

Processors based on the Prescott core, although they contain many innovations and modifications compared to

with Northwood, but based on the same NetBurst architecture, have the same packaging,

like the previous Pentium 4, are installed in the same Socket 478 and, in principle,

should work on most motherboards that support 800 MHz FSB and

providing proper supply voltages (naturally, an update will be required

BIOS).

We will leave a detailed study of practical issues related to Prescott for a separate material. In the meantime, let's try to consider what changes have appeared in Prescott, and understand how this processor differs from its predecessor and what can be expected as a result.

The main innovations implemented in the Prescott core are as follows:

- Transfer of crystal production to the 90 nm process technology.

- Increased conveyor length (from 20 to 31 stages).

- Doubled L1 caches (data cache from 8 to 16 KB) and L2 caches (from 512 KB to

1MB). - Architecture changes:

-modified branch prediction block;

-improved L1 cache logic (improved prefetching

data);

-appearance of new blocks in the processor;

-increased volume of some buffers. - Advanced Hyper-Threading Technology.

- Emergence of support for a new set of SIMD instructions SSE3 (13 new commands).

The main differences between the three processor cores used in the Pentium 4 are summarized in Table. 2. The number of transistors in Prescott has more than doubled - by 70 million. Of these, according to rough estimates, about 30 million can be attributed to the doubled L2 cache (an additional 512 KB, 6 transistors per cell). Moreover, there is still quite a solid number, and even this value alone can indirectly judge the scale of the changes that have occurred in the core. Note that, despite such an increase in the number of elements, the core area not only did not increase, but even decreased compared to Northwood.

WITH 90nm process technology everything, in general, is clear (of course, at a simplified, "user" level). Smaller transistors will reduce the processor voltage and reduce the power dissipated by it, and hence the heat. This will open the way for a further increase in clock frequencies, which, although it will be accompanied by an increase in heat release, but the "reference point" for this increase will be different, somewhat lower. Note that, taking into account the larger number of transistors in Prescott compared to Northwood, it would be more correct to speak not about a decrease, but about conservation or lower magnification dissipated power.

Extended conveyor. As can be seen from Table. 2, Prescott (31 stages) is more than half as long as Northwood. What lies behind this is quite clear: this is not the first case when Intel increases the length of the pipeline, aiming at increasing clock frequencies - it is known that the longer the pipeline, the better the processor core "overclocks". In principle, it's hard to say unequivocally whether such an "elongation" is really necessary at the current stage, at frequencies around 3.5 GHz - overclocking enthusiasts overclocked Pentium 4 (Northwood) to even higher values. But sooner or later, an increase in the number of stages would be inevitable - so why not combine this event with the release of a new kernel?

Increased caches and buffers. In principle, this point is directly related to the previous one. In order to run a long pipeline at high frequencies, it is desirable to have a large "handy store" in the form of a cache to reduce the number of idle times when the processor waits for the required data to be loaded from memory. In addition, it is well known that, other things being equal, out of two processors with different pipeline lengths, the one with the smaller this parameter will be more productive. When branch prediction errors occur, the processor is forced to "reset" its pipeline and load it with work in a new way. And the greater the number of stages it contains, the more painful such mistakes are. Of course, they cannot be completely ruled out, and at the same frequencies Northwood and Prescott the latter would have turned out to be less productive... if it hadn't had a larger L2 cache, which largely compensates for the lag. Naturally, everything here depends on the specifics of specific applications, which we will try to check in the practical part.

As mentioned above, Prescott has increased not only the total L2 cache, but also the L1 data cache, the size of which has grown from 8 to 16 KB. Its organization and part of the logic of work have also changed - for example, a mechanism has been introduced forced promotion (force forwarding) that reduces latency in cases where a dependent cache load operation cannot speculatively execute before the preceding cache load operation completes.

In addition to the volume of caches, the capacity of two schedulers responsible for storing micro-operations has also increased ( oops) that are used in x87/SSE/SSE2/SSE3 instructions. This, in particular, made it possible to find parallelism in multimedia algorithms more efficiently and execute them with better performance.

Actually, we have already touched upon some of the innovations in the Pentium 4 architecture implemented in Prescott, since they are "scattered" over the processor core and affect many of its blocks. The next major change is...

Modified branch prediction block. As you know, the accuracy

operation of this unit is critical to high performance

modern processor. "Looking through" the program code following

currently executing, the processor can in advance perform parts

of this code is a well-known speculative execution. If

the program encounters a branch as a result of a conditional jump ( if-then-else),

then the question arises as to which of the two branches is "better" to execute in advance.

The algorithms at Northwood were relatively simple: transitions back supposed

committed, forward- No. This mostly worked for loops,

but not for other types of transitions. Prescott uses the concept length

transition: studies have shown that if the transition distance exceeds

a certain limit, then the transition with a high degree of probability will not be made

(accordingly, it is not necessary to execute this part of the code speculatively). Also in Prescott

a more thorough analysis of the transition conditions themselves has been introduced, on the basis of which

decisions about the probability of making the transition. In addition to static prediction algorithms,

dynamic algorithms have also undergone changes (by the way, new ideas were partially

borrowed from the mobile Pentium M).

The appearance of new blocks in the processor. Two new blocks in Prescott are block of bit shifts and cyclic shifts(shifter/rotator) and dedicated integer multiplication block. The first allows you to perform the most typical shift operations on one of the two fast ALUs running at twice the CPU core frequency (in previous modifications of the Pentium 4, these operations were performed as integers and took several cycles). To implement integer multiplication, FPU resources were previously used, which was quite a long time - it was necessary to transfer data to the FPU, perform a relatively slow multiplication there and transfer the result back. To speed up these operations, Prescott has added a new block responsible for such multiplication operations.

Improved Hyper-Threading. Of course, all the innovations listed above are introduced in Prescott for a reason. According to Intel experts, most of the modifications in the logic of caches, command queues, etc. are somehow related to processor speed when using Hyper-Threading, that is, when several program threads are running simultaneously. At the same time, these innovations have only a minor impact on the performance of "single-threaded" applications. Also, Prescott has increased the set of instructions that are "allowed" to execute on the processor in parallel (for example, a page table operation and a memory operation that breaks a cache line). Again, for single-threaded applications, the impossibility of combining such operations had practically no effect on performance, while when executing two threads, such a restriction often became a bottleneck. Another example is if Northwood experienced a "cache miss" and needed to read data from RAM, the next cache lookups were delayed until that action was completed. As a result, one application, often "overshooting" the cache, could significantly slow down the work of other threads. In Prescott, this conflict is easily overcome, operations can be performed in parallel. Also in Prescott, the logic of arbitration and resource sharing between threads was redone in order to increase overall performance.

SSE3 instructions. As we remember, the last time the extension of the set of SIMD instructions

Intel spent by releasing the first Pentium 4 (Willamette) and implementing SSE2 in it.

Another extension, called SSE3 and containing 13 new instructions,

done at Prescott. All but three use SSE registers.

and are designed to improve performance in the following areas:

- fast conversion of a real number to an integer ( fisttp);

- complex arithmetic calculations ( addsubps, addsubpd, movsldup, movshdup,

movddup); - video encoding ( lddqu);

- graphics processing ( haddps, hsubps, haddpd, hsubpd);

- thread synchronization ( monitor, mwait).

Naturally, a detailed review of all new instructions is beyond the scope of the material, this information is given in the relevant programmer's manual. The instructions of the first four categories serve both to speed up the execution of the operations themselves, and to make them more "economical" in terms of using processor resources (and, therefore, optimizing the operation of Hyper-Threading and the speculative execution mechanism). At the same time, the program code is also significantly reduced and, importantly, simplified. For example, an instruction to quickly convert a real number to an integer fisttp replaces seven (!) commands of the traditional code. Even compared to SSE2 instructions (which themselves also speed up code execution and reduce code size), SSE3 instructions provide significant savings in many cases. The two instructions of the last group are − monitor And mwait- allow the application (more precisely flow) to tell the processor that it is not currently doing useful work and is in standby mode (for example, writing to a specific memory location, an interrupt or an exception). In this case, the processor can be put into a low power mode or, when using Hyper-Threading, give all resources to another thread. In general, with SSE3, new opportunities for code optimization open up for programmers. The problem here, as always in such cases, is one: until the new instruction set becomes a generally accepted standard, software developers will have to maintain two code branches (with and without SSE3) in order for applications to work on all processors...

Are you coming?..

In general, the volume of innovations implemented in the Prescott core can be called

significant. And although it falls short of the "real Pentium 5", but to

"four and a half" may well come close. Transition from the core of Northwood

to Prescott - in principle, an evolutionary process that fits well into the general

Intel strategy. Gradual changes in the Pentium 4 architecture are clearly visible on

scheme: the architecture is modified and supplemented with new features - there is a consistent

processor optimization for a specific set of software.

So what can you expect from Prescott? Perhaps, first of all (although this may seem somewhat strange) - new frequencies. Intel itself admits that at equal frequencies, the performance of Prescott and Northwood will differ little. The positive impact of the large L2 cache and other Prescott innovations is largely "compensated" by its much longer pipeline, which reacts painfully to branch prediction errors. And even taking into account the fact that the block of this very transition predictor has been improved, it still cannot be ideal. The main advantage of Prescott is different: the new core will allow further frequency increase - to values previously unattainable with Northwood. According to Intel's plans, the Prescott core is designed for two years until it is replaced by the next core manufactured using 65 nm (0.065 micron) technology.

Therefore, the currently released processor based on the new Prescott core does not pretend to be the performance champion right from the start and should show itself in all its glory in the future. Another confirmation of this is the positioning of the processor: the Pentium 4 based on the Prescott core is designed for mainstream systems, while the top CPU was and remains the Pentium 4 Extreme Edition. By the way, although the frequency bar for Intel processors nominally rose to 3.4 GHz with the release of Prescott, the appearance of the first OEM systems based on the Pentium 4 3.4 GHz on the new core will occur somewhat later this quarter (and commercial deliveries of Prescott have already begun in the fourth quarter of 2003).

Another area where Prescott can (and probably will) shine is in SSE3-optimized software. The optimization process has already begun, and today there are at least five applications that support the new instruction set: MainConcept (MPEG-2/4), xMPEG, Ligos (MPEG-2/4), Real (RV9), On2 (VP5/VP6) . During 2004, SSE3 support should appear in packages such as Adobe Premiere, Pinnacle MPEG Encoder, Sony DVD Source Creator, Ulead MediaStudio and VideoStudio, various audio and video codecs, etc. Remembering the optimization process for SSE / SSE2, you can to understand that we will see the results of SSE3, but by no means immediately - again, this is in a certain sense "a reserve for the future".

But what about "on the other side of the front line"? Intel's main competitor is still going its own way, moving further and further away from the "general line". AMD continues to increase "bare performance", while making do with significantly lower frequencies. The memory controller, which migrated from the northbridge to the processor in Athlon 64, added fuel to the fire, providing an unprecedented speed of access to RAM. And recently a processor with a rating of 3400+ was released (no, no one talks about full compliance with the competitor's product in terms of frequency ...).

However, Intel and AMD are now in roughly equal situations - their top-end processors are waiting for the release of appropriate optimized software in order to prove themselves at full capacity. Intel is more and more "going into multimedia": Pentium 4 performance is more than enough for office software, and for Prescott to realize its potential, you need optimized multimedia applications (and / or high clock speeds, which you can be sure of). It is worth noting that the conversion of codecs for SSE3 is perhaps not the most difficult operation, and all applications using such codecs will immediately feel the effect of this (and the conversion of the applications themselves is not at all necessary).

On the other hand, in the middle of 2004, a 64-bit version of Windows for the AMD64 platform will be released, on which the capabilities of Athlon 64 should manifest themselves. Of course, here the usual question will arise about a set of applications for the new OS, without which the system remains practically useless. But remember that at least the same codecs compiled for 64-bit Athlon already exist. So there is a possibility that in the near future the AMD platform will have a place to show itself. In general, it seems that while the titans are just pumping up their muscles, building defensive structures and preparing the rear in front of the main ... no, rather, next battle...

Introduction