For starters, NVIDIA installed the G80 on 2 video cards: GeForce 8800 GTX and GeForce 8800 GTS.

| GeForce 8800 Series Graphics Cards Specifications | ||

| GeForce 8800 GTX | GeForce 8800 GTS | |

| Number of transistors | 681 million | 681 million |

| Core frequency (including allocator, texture units, ROPs) | 575 MHz | 500 MHz |

| Shader (Stream Processor) Frequency | 1350 MHz | 1200 MHz |

| No. of shaders (stream processors) | 128 | 96 |

| Memory frequency | 900 MHz (1.8 GHz effective) | 800 MHz (1.6 GHz effective) |

| Memory interface | 384 bit | 320 bit |

| Memory bandwidth (GB / s) | 86.4 GB / s | 64 GB / s |

| Number of ROP blocks | 24 | 20 |

| Memory | 768 MB | 640 MB |

As you can see, the number of transistors for the GeForce 8800 GTX and 8800 GTS is the same, this is because they are absolutely the same GPU G80. As already mentioned, the main difference between these GPU options is 2 disabled banks of stream processors - only 32 shaders. At the same time, the number of working shader units has been reduced from 128 for the GeForce 8800 GTX to 96 for the GeForce 8800 GTS. NVIDIA also disabled 1 ROP (rasterization unit).

The frequencies of the cores and memory of these video cards are also slightly different: the core frequency of the GeForce 8800 GTX is 575 MHz, the frequency of the GeForce 8800 GTS is 500 MHz. The GTX shader units operate at 1350 MHz, the GTS at 1200 MHz. For the GeForce 8800 GTS, NVIDIA also uses a narrower 320-bit memory interface and 640MB of slower memory that runs at 800MHz. The GeForce 8800 GTX has a 384-bit memory interface, 768 MB of memory / 900 MHz. And, of course, a completely different price.

The video cards themselves are very different:

|

As you can see in these photos, the reference GeForce 8800 cards are black (for the first time for NVIDIA). With a cooling module, the GeForce 8800 GTX and 8800 GTS are dual-slot. The GeForce 8800 GTX is slightly longer than the GeForce 8800 GTS: its length is 267 mm, versus 229 mm for the GeForce 8800 GTS, and, as announced earlier, the GeForce 8800 GTX 2 PCIe power connector. Why 2? The maximum power consumption of the GeForce 8800 GTX is 177 watts. However, NVIDIA says that this can be only as a last resort, when all the functional blocks of the GPU are maximally loaded, and in normal games during testing, the video card consumed an average of 116 - 120 W, maximum - 145 W.

|

|

Since each external PCIe power connector on the video card itself is designed for a maximum of 75 W, and the PCIe slot is also designed for a maximum of 75 W, 2 of these connectors will not be enough to supply 177 W, so we had to make 2 external PCIe power connectors. By adding a second connector, NVIDIA has provided the 8800 GTX with a solid headroom. By the way, the maximum power consumption of the 8800 GTS is 147 W, so it can get by with one PCIe power connector.

Another feature added to the design of the reference GeForce 8800 GTX is the second SLI slot, a first for an NVIDIA GPU. NVIDIA does not officially announce anything about the purpose of the second SLI slot, but journalists managed to get the following information from the developers: “The second SLI slot in the GeForce 8800 GTX is intended for hardware support for a possible expansion of the SLI configuration. Only one SLI slot is used with current drivers. Users can connect an SLI bridge to both the first and second contact groups. ”

Based on this and the fact that nForce 680i SLI motherboards come with three slots PCI Express(PEG), we can conclude that NVIDIA plans to support three SLI video cards in the near future. Another option may be to increase the power for SLI physics, but this does not explain why the GeForce 8800 GTS does not have a second SLI connector.

It can be assumed that NVIDIA reserves its GX2 “Quad SLI” technology for the less powerful GeForce 8800 GTS, while the more powerful GeForce 8800 GTX will operate in a triple SLI configuration.

If you remember, original NVIDIA Quad SLI video cards are closer to the GeForce 7900 GT in their characteristics than to the GeForce 7900 GTX, since the 7900 GT video cards have lower power consumption / heat dissipation. It is natural to assume that NVIDIA will follow the same path in the case of the GeForce 8800. Gamers with motherboards with three PEG slots will be able to increase the speed of the graphics subsystem by assembling a triple SLI 8800 GTX configuration, which in some cases will give them better performance than Quad SLI system, judging by the characteristics of the 8800 GTS.

Again, this is just an assumption.

The cooling unit of the GeForce 8800 GTS and 8800 GTX is made of a two-slot, ducted, which removes hot air from the GPU outside the computer case. The cooling heatsink consists of a large aluminum heatsink, copper and aluminum heatpipes, and a copper plate that is pressed against the GPU. This whole structure is blown by a large radial-type fan, which looks a little intimidating, but is actually quite quiet. The cooling system of the 8800 GTX is similar to the cooling system of the 8800 GTS, only the former has a slightly longer heatsink.

|

|

In general, the new cooler copes with cooling the GPU quite well, and at the same time it is almost silent - like in the GeForce 7900 GTX and 7800 GTX 512MB video cards, but the GeForce 8800 GTS and 8800 GTX are heard a little stronger. In some cases, you will need to listen well to hear the noise from a graphics card fan.

Production

All production of the GeForce 8800 GTX and 8800 GTS is carried out under an NVIDIA contract. This means that whether you buy a graphics card from ASUS, EVGA, PNY, XFX or any other manufacturer, they are all made by the same company. NVIDIA does not even allow manufacturers to overclock the first batches of GeForce 8800 GTX and GTS video cards: they all go on sale with the same clock speeds regardless of the manufacturer. But they are allowed to install their own cooling systems.

For example, EVGA has already released its e-GeForce 8800 GTX ACS3 Edition with its unique ACS3 cooler. The ACS3 video card is hidden in a single large aluminum cocoon. It bears the letters E-V-G-A. For additional cooling, EVGA has placed an additional heatsink on the back of the graphics card, right in front of the G80 GPU.

In addition to cooling, manufacturers of the first GeForce 8800 video cards can customize their products only with warranty obligations and bundle - games and accessories. For example, EVGA bundles its graphics cards with the game Dark messiah, the GeForce 8800 GTS BFG graphics card is sold with a BFG T-shirt and mouse pad.

In addition to cooling, manufacturers of the first GeForce 8800 video cards can customize their products only with warranty obligations and bundle - games and accessories. For example, EVGA bundles its graphics cards with the game Dark messiah, the GeForce 8800 GTS BFG graphics card is sold with a BFG T-shirt and mouse pad.

It will be interesting to see what will happen next - many NVIDIA partners believe that for the next releases of GeForce 8800 video cards, NVIDIA limits will not be so strict, and they will be able to compete in overclocking.

It will be interesting to see what will happen next - many NVIDIA partners believe that for the next releases of GeForce 8800 video cards, NVIDIA limits will not be so strict, and they will be able to compete in overclocking.

Since all video cards come off the same pipeline, all GeForce 8800s support 2 dual-link DVI and HDCP connectors. In addition, it became known that NVIDIA is not planning to change the memory capacity of the GeForce 8800 GTX and GTS (for example, 256 MB GeForce 8800 GTS or 512 MB 8800 GTX). At least for now, the standard configuration for the GeForce 8800 GTX is 768 MB, and the GeForce 8800 GTS is 640 MB. NVIDIA also has no plans to make an AGP version of the GeForce 8800 GTX / GTS video cards.

Since all video cards come off the same pipeline, all GeForce 8800s support 2 dual-link DVI and HDCP connectors. In addition, it became known that NVIDIA is not planning to change the memory capacity of the GeForce 8800 GTX and GTS (for example, 256 MB GeForce 8800 GTS or 512 MB 8800 GTX). At least for now, the standard configuration for the GeForce 8800 GTX is 768 MB, and the GeForce 8800 GTS is 640 MB. NVIDIA also has no plans to make an AGP version of the GeForce 8800 GTX / GTS video cards.

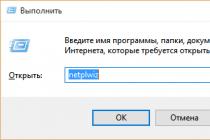

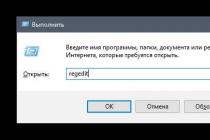

Driver for 8800

NVIDIA made several changes in the GeForce 8800 driver, which must be mentioned. First of all, the traditional Coolbits overclocking utility has been removed, instead of it - NVIDIA nTune. That is, if you want to overclock a GeForce 8800 video card, you will need to download the nTune utility. This is probably good for owners of motherboards based on the nForce chipset, since the nTune utility can be used not only for overclocking a video card, but also for configuring the system. Otherwise, those, for example, who managed to upgrade to Core 2 and have a motherboard with a 975X or P965 chipset, will have to download a 30 MB application to overclock the video card.

Another change in the new driver that we noticed is that there is no option to switch to the classic NVIDIA control panel. I would like to believe that NVIDIA will return this feature to its video driver, as it was liked by many, in contrast to the new NVIDIA control panel interface.

In our last article, we took a look at what is happening in the lower price range, in which new products from AMD have recently appeared - the Radeon HD 3400 and HD 3600 series. Today we will slightly raise our gaze in the hierarchy of cards and talk about the older models. The hero of our review this time will be GeForce 8800 GTS 512MB.

What is the GeForce 8800 GTS 512 MB? The name, frankly, does not give an unambiguous answer. On the one hand, it somehow resembles the past top solutions based on the G80 chip. On the other hand, the memory size is 512 MB, which is less than that of the corresponding predecessors. As a result, it turns out that the GTS index, coupled with the number 512, is simply misleading a potential buyer. But in fact we are talking, if not the fastest, then, in any case, one of the fastest graphic solutions provided so far by NVIDIA. And founded this card not at all on the outgoing G80, but on the much more progressive G92, on which, by the way, the GeForce 8800 GT was based. To make the description of the essence of the GeForce 8800 GTS 512 MB even more clear, let's go directly to the characteristics.

Comparative characteristics of GeForce 8800 GTS 512 MB

| Model | Radeon HD 3870 | GeForce 8800 GT | GeForce 8800 GTS 640 MB | GeForce 8800 GTS 512MB | GeForce 8800 GTX |

|---|---|---|---|---|---|

| Kernel codename | RV670 | G92 | G80 | G92 | G80 |

| Those. process, nm | 65 | 65 | 90 | 65 | 90 |

| Number of stream processors | 320 | 112 | 96 | 128 | 128 |

| Number of texture units | 16 | 56 | 24 | 64 | 32 |

| Number of blending blocks | 16 | 16 | 20 | 16 | 24 |

| Core frequency, MHz | 775 | 600 | 513 | 650 | 575 |

| Shader unit frequency, MHz | 775 | 1500 | 1180 | 1625 | 1350 |

| Effective video memory frequency, MHz | 2250 | 1800 | 1600 | 1940 | 1800 |

| Video memory size, MB | 512 | 256 / 512 / 1024 | 320 / 640 | 512 | 768 |

| Data exchange bus width, Bit | 256 | 256 | 320 | 256 | 384 |

| Video memory type | GDDR3 / GDDR4 | GDDR3 | GDDR3 | GDDR3 | GDDR3 |

| Interface | PCI-Express 2.0 | PCI-Express 2.0 | PCI-Express | PCI-Express 2.0 | PCI-Express |

Take a look at the table above. Based on these data, we can conclude that we have a well-formed and solid solution that can compete on a par with the current tops of the Californian company. In fact, we have a full-fledged G92 chip with raised frequencies and very fast GDDR3 memory operating at almost 2 GHz. By the way, we spoke about the fact that in the initial version the G92 was supposed to have exactly 128 stream processors and 64 texture units in our review of the GeForce 8800 GT. As you can see, for the model with the GT index, a somewhat stripped-down chip was allocated for a reason. This move made it possible to somewhat delimit the segment of productive video cards and create an even more perfect product in it. Moreover, right now the production of G92 is already well established and the number of chips capable of operating at frequencies over 600 MHz is quite large. However, even in the first revisions, the G92 easily conquered 700 MHz. You yourself understand that now this line is not the limit. We will have time to make sure of this in the course of the material.

So, we have before us a product from XFX - a video card GeForce 8800 GTS 512 MB. It is this model that will become the object of our close study today.

Finally, before us is the board itself. The XFX product is based on a reference design, so everything that will be said regarding of this product, can be attributed to most of the solutions of other companies. After all, it's no secret that today the overwhelming majority of manufacturers base their product designs on exactly what NVIDIA offers.

Regarding the GeForce 8800 GTS 512 MB, it should be noted that the printed circuit board is borrowed from the GeForce 8800 GT. It has been greatly simplified as compared to video cards based on the G80, but it fully meets the requirements of the new architecture. As a result, we can conclude that the release of the GeForce 8800 GTS 512 MB was hardly complicated by anything. We have the same PCB and almost the same chip as its predecessor with the GT index.

As for the dimensions of the video card in question, in this respect it fully corresponds to the GeForce 8800 GTS 640 MB. What you can see for yourself on the basis of the given photo.

NVIDIA saw the need to make changes to the cooling system. It has undergone serious changes and now resembles in its appearance the cooler of the classic GeForce 8800 GTS 640 MB, rather than the GeForce 8800 GT.

The design of the cooler is quite simple. It consists of aluminum fins, which are blown by a corresponding turbine. The heat sink fins are pierced by copper heat pipes with a diameter of 6 mm each. Contact with the chip is made by means of a copper base. The heat is removed from the base and transferred to the radiator, where it is subsequently dissipated.

Our GeForce 8800 GTS was equipped with a revision A2 G92 chip from Taiwan. The memory is filled with 8 chips manufactured by Qimonda with a 1.0 ns access time.

Finally, we inserted the graphics card into the PCI-E slot. The board was detected without any problems and allowed to install the drivers. There were no difficulties in relation to auxiliary software... GPU-Z displayed all the necessary information quite correctly.

GPU frequency is 678 MHz, memory - 1970 MHz, shader domain - 1700 MHz. As a result, we again see that XFX does not change its habit of raising frequencies above the factory ones. However, this time the increase is small. But usually the company's assortment has a lot of cards of the same series, differing in frequencies. Some of them, for sure, have great indicators.

Riva Tuner also did not let us down and displayed all the information on the monitoring.

After that, we decided to check how well the stock cooler copes with its task. The GeForce 8800 GT had serious problems with this. The cooling system had a rather dubious margin of efficiency. This, of course, was enough to use the board at its nominal value, but for more it required replacing the cooler.

However, the GeForce 8800 GTS is all right with this. After a long load, the temperature only rose to 61 ° C. This is a great result for such a powerful solution. However, each barrel of honey has its own fly in the ointment. Not without it here. Unfortunately, the cooling system is very noisy. Moreover, the noise becomes rather unpleasant already at 55 ° C, which even surprised us. After all, the chip barely warmed up. Why on earth does the turbine start to work intensively?

Here Riva Tuner came to our rescue. This utility it is useful not only because it allows you to overclock and adjust the operation of the video card, but also has functions for controlling the speed of the cooler, if, of course, the board itself has support for this option.

We decided to set the RPM to 48% - this is absolutely quiet operation of the card, when you can completely forget about its noise.

However, these adjustments led to an increase in temperature. This time it was 75 ° C. Of course, the temperature is a bit high, but in our opinion, it is worth it to get rid of the howling of the turbine.

However, we did not stop there. We decided to check how the video card will behave when installing any alternative cooling system.

The choice fell on Thermaltake DuOrb - an efficient and stylish cooling system, which at one time coped well with Cooled GeForce 8800 GTX.

The temperature dropped by 1 ° C relative to the standard cooler operating at automatic speed (in the load, the speed automatically increased to 78%). Well, of course, the difference in efficiency is negligible. However, the noise level has also become significantly lower. True, Thermaltake DuOrb cannot be called silent either.

As a result, the new stock cooler from NVIDIA leaves only favorable impressions. It is very effective and, moreover, allows you to set any RPM level, which is suitable for lovers of silence. Moreover, even in this case, although he is worse, he copes with the task at hand.

Finally, let's mention the overclocking potential. By the way, it turned out to be the same for a regular cooler and for DuOrb. The core was overclocked to 805 MHz, the shader domain to 1944 MHz, and the memory to 2200 MHz. It is good news that the top solution, which initially has high frequencies, still has a solid margin, and this applies to both the chip and the memory.

So, we reviewed the GeForce 8800 GTS 512 MB video card from XFX. At this point, we could smoothly move on to the test part, but we decided to tell you about another interesting solution, this time from the ATI mill, manufactured by PowerColor.

The main feature of this video card is DDR3 memory. Let me remind you that most companies ship the Radeon HD 3870 with DDR4. The reference version was equipped with the same type of memory. However, PowerColor quite often chooses non-standard ways and endows its products with features that are different from the rest.

However, another type of memory is not the only difference between the PowerColor board and third-party solutions. The fact is that the company also decided to equip the video card with not a standard turbine, by the way, a rather efficient one, but an even more productive product from ZeroTherm - the GX810 cooler.

PCB simplified. For the most part, this affected the power supply system. There should be four ceramic capacitors in this area. Moreover, on most boards they are located in a row, close to each other. There are three of them left on the PowerColor board - two of them are located where they should, and one more is brought to the top.

The presence of an aluminum radiator on the power circuits somewhat smoothes the impression of such features. During load, they are capable of seriously heating up, so cooling will not be superfluous.

The memory chips are covered with a copper plate. At first glance, this may be good news, because it is an element capable of lowering the temperature when the memory heats up. However, this is not really the case. Firstly, it was not possible to remove the plate on this copy of the card. Apparently, it adheres tightly to hot melt glue. Perhaps, if you make an effort, the radiator can be removed, but we did not become zealous. Therefore, we never found out what kind of memory is installed on the card.

The second thing that PowerColor cannot be praised for is that the cooler has a design capable of directing air onto the PCB, thereby reducing its temperature. However, the plate partially prevents this blowing. This is not to say that it completely interferes. But any memory heatsinks would be a much better option. Or, at least, you should not use a thermal interface, after which the plate cannot be removed.

The cooler itself was not without problems. Yes, of course, the ZeroTherm GX810 is a good cooler. Its efficiency is excellent. This is largely due to the fully copper bowl as a radiator with a long copper tube penetrating all the plates. But the problem is that there is a 2-pin connector on the board. As a result, the cooler always works in one mode, namely the maximum. From the very moment the computer is turned on, the cooler fan runs at its maximum speed, creating a very serious noise. One could still put up with this, if it concerns only 3D, after all, we were familiar with the noisier Radeon X1800 and X1900, but when the cooler is so noisy in 2D ... It cannot but cause irritation.

The only good news is that this problem can be solved. After all, you can use the speed controller. For example, we did just that. At the minimum speed the cooler is silent, but the efficiency immediately drops dramatically. There is another option - to change the cooling. We tried it too. To do this, we took the same Thermaltake DuOrb and found that installation is not possible.

On the one hand, the mounting holes fit, only the large capacitors on the PCB prevent the fasteners. As a result, we end our examination of the board on a rather negative note. Let's move on to how the video card behaves when installed in the computer.

The core frequency is 775 MHz, which corresponds to the reference. But the memory frequency is much lower than the traditional value - only 1800 MHz. However, this is not particularly surprising. We are dealing with DDR3. So this is quite an adequate indicator. Also, keep in mind that DDR4 has significantly higher latencies. Thus, the final performance will be approximately at the same level. The difference will only show up in bandwidth-hungry applications.

By the way, I would draw your attention to the fact that the board has BIOS 10.065. This is a fairly old firmware and it probably contains a PLL VCO error. As a result, you will not be able to overclock your card above 862 MHz. However, the fix is pretty straightforward. To do this, just update the BIOS.

Riva Tuner displayed all the necessary information about frequencies and temperatures without any problems.

The load temperature was 55 ° C, which means that the card didn't get very hot. The only question that arises is: if there is such a margin of efficiency, why use only the maximum speed for the cooler?

Now about overclocking. In general, it is no secret that the frequency reserve of the RV670 is rather small, but in our sample it turned out to be terribly small. We managed to raise the frequency by only 8 MHz, i.e. up to 783 MHz. The memory showed more outstanding results - 2160 MHz. From this we can conclude that the card most likely uses memory with a 1.0 ns access time.

Researching the performance of video cards

You can see the list of test participants below:

- GeForce 8800 GTS 512 MB (650/1625/1940);

- GeForce 8800 GTS 640 MB (513/1180/1600);

- Radeon HD 3870 DDR3 512MB (775/1800);

- Radeon HD 3850 512MB (670/1650).

Test stand

To find out the performance level of the video cards discussed above, we assembled a test system with the following configuration:

- Processor - Core 2 Duo E6550 (333 × 7, L2 = 4096 KB) @ (456 × 7 = 3192 MHz);

- Cooling system - Xigmatek HDT-S1283;

- Thermal interface - Arctic Cooling MX-2;

- RAM - Corsair TWIN2в6400С4-2048;

- Motherboard - Asus P5B Deluxe (Bios 1206);

- Power supply unit - Silverstone DA850 (850 W);

- Hard drive - Serial-ATA Western Digital 500 GB, 7200 rpm;

- Operating system - Windows XP Service Pack 2;

- Video driver - Forceware 169.21 for NVIDIA video cards, Catalyst 8.3 for AMD boards;

- Monitor - Benq FP91GP.

Drivers used

The ATI Catalyst driver was configured as follows:

- Catalyst A.I .: Standart;

- MipMap Detail Level: High Quality;

- Wait for vertical refresh: Always off;

- Adaptive antialiasing: Off;

- Temporal antialiasing: Off;

The ForceWare driver, in turn, was used with the following settings:

- Texture Filtering: High quality;

- Anisotropic sample optimization: Off;

- Trilinear optimization: Off;

- Threaded optimization: On;

- Gamma correct antialiasing: On;

- Transparency antialiasing: Off;

- Vertical sync: Force off;

- Other settings: default.

Test packages used:

- Doom, Build 1.1- testing in the BenchemAll utility. For a test on one of the levels of the game, we recorded a demo;

- Prey, Build 1.3- testing through HOC Benchmark, demo HWzone. Boost Graphics switched off... Image quality Highest... Double demo run;

- F.E.A.R., Build 1.0.8- testing through the built-in benchmark. Soft shadows turned off;

- Need For Speed Carbon, Build 1.4- maximum quality settings. Motion blur disabled... Testing was done with Fraps;

- TimeShift, build 1.2- detailing was forced in two versions: High Detail and Very High Detail. Testing was done with Fraps;

- Unreal Tournament 3, build 1.2- maximum quality settings. Demo run at VCTF level? Suspense;

- World In Conflict, build 1.007- two settings were used: Medium and High. In the second case, quality filters (anisotropic filtering and anti-aliasing) were disabled. Testing was carried out using the built-in benchmark;

- Crysis, build 1.2- testing in Medium and High modes. Testing was done with Fraps.

* After the name of the game, build is indicated, i.e. the version of the game. We try to maximize the objectivity of the test, so we only use games with the latest patches.

Test results

Similarly, NVIDIA has done the same with the latest G80 chip, the world's first GPU with a unified architecture and support for Microsoft's new API, DirectX 10.

Simultaneously with the flagship GeForce 8800 GTX, a cheaper version called the GeForce 8800 GTS was released. It differs from its older sister by the reduced number of pixel processors (96 versus 128), video memory (640 MB instead of 768 MB for the GTX). The consequence of the decrease in the number of memory chips was a decrease in the bit depth of its interface to 320 bits (for GTX - 384 bits). More detailed characteristics of the graphics adapter in question can be found by examining the table:

The ASUS EN8800GTS video card got into our Test laboratory, which we will consider today. This manufacturer is one of the largest and most successful partners of NVIDIA, and traditionally does not skimp on packaging and packaging. As the saying goes, "there should be a lot of good video cards." The novelty comes in a box of impressive dimensions:

On its front side is a character from the game Ghost Recon: Advanced Warfighter. The case is not limited to one image - the game itself, as you might have guessed, is included in the package. On the reverse side of the package, there are brief characteristics of the product:

ASUS considered this amount of information insufficient, making a kind of book out of the box:

For the sake of fairness, we note that this method has been practiced for quite a long time and, by no means, not only by ASUS. But, as they say, everything is good in moderation. The maximum information content turned into a practical inconvenience. A slight breath of wind and the top of the cover opens. When transporting the hero of today's review, we had to contrive and bend the retaining tongue so that it justified its purpose. Unfortunately, folding it up can easily damage the packaging. And finally, let's add that the dimensions of the box are unreasonably large, which causes some inconvenience.

Video adapter: complete set and close inspection

Well, let's go directly to the package bundle and the video card itself. The adapter is packed in an antistatic bag and a foam container, which eliminates both electrical and mechanical damage to the board. The box contains disks, DVI -> D-Sub adapters, VIVO and additional power cords, as well as a case for disks.

Of the disks included in the kit, the GTI racing game and the 3DMark06 Advanced Edition benchmark are noteworthy! 3DMark06 was spotted for the first time in a bundle of a serial and mass video card! Without a doubt, this fact will appeal to users who are actively involved in benchmarking.

Well, let's go directly to the video card. It is based on a reference design PCB using a reference cooling system, and it differs from other similar products only with a sticker with the manufacturer's logo, which retains the Ghost Recon theme.

The reverse side of the printed circuit board is also unremarkable - a lot of smd components and voltage regulators are soldered on it, that's all:

Unlike the GeForce 8800 GTX, the GTS requires only one additional power connector:

In addition, it is shorter than the older sister, which will certainly appeal to owners of small bodies. There are no differences in terms of cooling, and ASUS EN8800GTS, like the GF 8800 GTX, uses a cooler with a large turbine-type fan. The radiator is made of a copper base and an aluminum casing. Heat transfer from the base to the fins is carried out in part through heat pipes, which increases the overall efficiency of the structure. Hot air is thrown outside the system unit, but part of it, alas, remains inside the PC due to some holes in the casing of the cooling system.

However, the problem of strong heating is easily solved. For example, a slow-speed 120mm fan improves the temperature conditions of the board quite well.

In addition to the GPU, the cooler cools the memory chips and power supply elements, as well as the video signal DAC (NVIO chip).

The latter was removed from the main processor due to the high frequencies of the latter, which caused interference and, as a result, interference in operation.

Unfortunately, this circumstance will cause difficulties when changing the cooler, so NVIDIA engineers simply had no right to make it of poor quality. Let's take a look at the video card in its "naked" form.

The PCB contains a G80 chip of revision A2, 640 MB of video memory, accumulated with ten Samsung chips. The memory access time is 1.2 ns, which is slightly faster than the GeForce 8800 GTX.

Please note that the board has two slots for chips. If they were soldered to the PCB, the total memory size would be 768 MB, and its capacity would be 384 bits. Alas, the developer of the video card considered such a step unnecessary. This scheme is used only in professional video cards of the Quadro series.

Finally, we note that the card has only one SLI slot, unlike the GF 8800 GTX, which has two.

Testing, analysis of results

The ASUS EN8800GTS video card was tested on a test bench with the following configuration:- processor - AMD Athlon 64 [email protected] MHz (Venice);

- motherboard - ASUS A8N-SLI Deluxe, NVIDIA nForce 4 SLI chipset;

- RAM - 2х512MB [email protected] MHz, timings 3.0-4-4-9-1T.

The RivaTuner utility has confirmed the compliance of the video card's characteristics with the declared ones:

The frequencies of the video processor are 510/1190 MHz, memory - 1600 MHz. The maximum heating achieved after multiple runs of the Canyon Flight test from the 3DMark06 package was 76 ° C at a fan speed of the standard cooling system equal to 1360 rpm:

For comparison, I will say that under the same conditions the GeForce 6800 Ultra AGP that came to hand heats up to 85 ° C at the maximum fan speed, and after a long time it hangs altogether.

The performance of the new video adapter was tested using popular synthetic benchmarks and some gaming applications.

Testing by Futuremark development applications revealed the following:

Of course, on a system with a more powerful central processor, for example, a representative Intel architecture Core 2 Duo, the result would be better. In our case, the morally outdated Athlon 64 (even if overclocked) does not allow the full potential of today's top video cards to be fully unleashed.

Let's move on to testing in real gaming applications.

Need for Speed Carbon clearly shows the difference between the rivals, and the GeForce 7900 GTX lags behind cards of the 8800 generation more than noticeably.

Since Half Life 2 requires not only a powerful video card, but also a fast processor to play comfortably, a clear difference in performance is observed only at maximum resolutions with anisotropic filtering and full-screen anti-aliasing enabled.

In F.E.A.R. approximately the same picture is observed as in HL2.

In the heavy modes of Doom 3, the card in question performed very well, but the weak central processor does not allow us to fully assess the gap between the GeForce 8800 GTS and its older sister.

Since Pray is made on the Quake 4 engine, which in turn is a development of Doom3, the performance results of video cards in these games are similar.

The progressiveness of the new unified shader architecture and some "cutting" of capabilities relative to its older sister put the GeForce 8800 GTS between the fastest graphics adapter from NVIDIA today and the flagship of the seven thousandth line. However, the Californians would hardly have acted differently - a novelty of this class should be more powerful than its predecessors. I am glad that the GeForce 8800 GTS is much closer to the GeForce 8800 GTX in speed capabilities than to the 7900 GTX. The support for the newest graphics technologies also inspires optimism, which should leave the owners of such adapters with a good margin of performance for the near (and, hopefully, more distant) future.

Verdict

After examining the card, we had an extremely good impression, which was greatly improved by the product cost factor. So, at the time of its appearance on the market and some time later, ASUS EN8800GTS, according to price.ru, cost about 16,000 rubles - its price was clearly overstated. Now the card is sold for about 11,500 rubles for a long period, which does not exceed the cost of similar products from competitors. However, considering the package bundle, ASUS 'brainchild is undoubtedly in a winning position.pros:

- DirectX 10 support;

- reinforced chip structure (unified architecture);

- excellent performance level;

- rich equipment;

- famous brand;

- the price is on par with products from less reputable competitors

- not always handy big box

Reviews, wishes and comments on this material are accepted in the forum site.

The 8800 GTX marks a landmark event in 3D graphics history. It was the first card to support DirectX 10 and its associated single shader model, significantly improving image quality over previous generations, and in terms of performance, it has long remained unrivaled. Unfortunately, all this power and cost accordingly. With the expected competition from ATI and the release of cheaper mid-range models based on the same technology, the GTX was considered a card aimed only at those enthusiasts who wanted to be at the forefront of modern graphics processing.

Model history

To remedy this situation, nVidia released a GTS 640MB card of the same line a month later, and a GTS 320MB a couple of months later. Both offered similar performance to the GTX, but at a much more reasonable price. However, at around $ 300-350, they were still too expensive for gamers on a budget - they weren't mid-range models, but high class... In retrospect, the GTS were worth every penny invested in them, as what followed for the rest of 2007 was disappointing after disappointment.

The first to arrive were the mid-range 8600 GTS and GT cards, which were heavily trimmed versions of the 8800 series. They were smaller and quieter and had new HD video processing capabilities, but performance was below expectations. Their acquisition was impractical, although they were relatively inexpensive. The alternative graphics card ATI Radeon HD 2900 XT matched the GTS 640MB in terms of speed, but it consumed a huge amount of power under load and was too expensive to be considered in the mid-range. Finally, ATI tried to release the DX10 series in the form of the HD 2600 XT and Pro, which were even better multimedia than the nVidia 8600, but lacked the power to be worth the attention of gamers who had already bought previous generation graphics cards such as the X1950 Pro. or 7900 GS.

And now, a year after the launch of the 8800 GTX with the release of the 8800 GT, the first real update of the model with DirectX 10 support appeared. Although this took a long time, but nVidia GeForce The 8800 GT's specs were in line with the GTS model, and the price - in the $ 200-250 range, finally reached the mid-price range that everyone has been waiting for. But what made the map so special?

Bigger is not better

With the development of technology and the number of transistors in the CPU and GPU, there is a natural need to reduce their size. This leads to lower energy consumption, which in turn means less heating. More processors fit on a single silicon crystal, which reduces their cost and theoretically gives a lower price limit for equipment made from them. However, changing production processes poses high risks for business, so it is customary to release a completely new architecture based on existing and proven technologies, as was the case with the 8800 GTX and HD 2900 XT. With the improvement in architecture, there is a transition to less power-hungry hardware, on which the new design is based again later.

This path was followed by the 8800 series with the G80 cores of the GTX and GTS, produced using 90nm technology, and the nVidia GeForce 8800 GT is based on the G92 chip, already made using the 65nm process. While the change seems small, it equates to a 34% reduction in wafer size or 34% increase in the number of processors on a silicon wafer. Eventually electronic components are becoming smaller, cheaper, more economical, which is an extremely positive change. However, the core of the G92 has not only shrunk, there is something else.

First of all, the VP2 video engine that was used in the 8600 series has now appeared in the GeForce 8800 GT 512MB. So now it is possible to enjoy high definition video without system braking. The final display engine, which is driven by a separate chip on the 8800 GTX, is also integrated into the G92. As a result, there are 73 million more transistors on the die than the 8800 GTX (754 million versus 681 million), although the number of stream processors, texture processing power and ROPs is less than that of the more powerful model.

The new version of nVidia's transparent anti-aliasing algorithm, added to the GeForce 8800 GT arsenal, is designed to significantly improve image quality while maintaining high system performance. In addition, the new processor did not add new graphics capabilities.

The manufacturing company, apparently, pondered for a long time about which functionality of the previous 8800 series cards was not fully used and could be reduced, and which should be left. The result is a GPU design that sits somewhere between GTX and GTS in terms of performance, but with GTS functionality. As a result, the 8800 GTS card has become completely redundant. The 8800 Ultra and GTX still deliver more graphics power, but with fewer features, at a much higher price point, and with higher power consumption. Against this background, the GeForce 8800 GT 512 MB card really took a strong position.

GPU architecture

The GeForce 8800 GT graphics card uses the same unified architecture that nVidia unveiled when it first announced the G80 processor. The G92 consists of 754 million transistors and is manufactured using TSMC's 65nm process. The size of the substrate is about 330 mm 2, and although it is noticeably smaller than that of the G80, it is still far from being called a small piece of silicon. There are a total of 112 scalar stream cores, which operate at 1500 MHz as standard. They are grouped into 7 clusters, each with 16 stream processors that share 8 texture address blocks, 8 texture filter sections and their own independent cache. This is the same configuration Nvidia used in the G84 and G86 chips at the shader cluster level, but the G92 is a much more complex GPU than either of them.

Each of the shader processors in one clock cycle can form two instructions MADD and MUL, the blocks combined into a single structure can process all shader operations and calculations that come in both integer and floating point form. It is curious, however, that despite the ability of stream processors to be the same as the G80 (except for the number and frequency), nVidia claims that the chip can make up to 336 GFLOPS. However, the computation of NADD and MUL requires a speed of 504 GFLOPS. As it turned out, the manufacturer used a conservative approach to computing power and did not take MUL into account when calculating overall performance. At briefings and roundtables, nVidia said that some architectural improvements should allow the chip to get closer to its theoretical maximum throughput. In particular, the task manager has been improved, distributing and balancing the data that comes in through the pipeline. NVidia announced that it will support double precision of future GPUs, but this chip only emulates it, which is associated with the need to follow IEEE standards.

ROP architecture

The ROP structure of the G92 is similar to that of any other GPU in the GeForce 8 series. This means that each section has a L2 cache and is assigned to a 64-bit memory channel. There are a total of 4 ROP sections and a 256-bit storage interface. Each of them is capable of processing 4 pixels per clock if each of them is specified by four parameters (RGB and Z color). If only the Z-component is present, then each section can process 32 pixels per clock.

ROPs support all common anti-aliasing formats used in the GPU of previous models of the eighth GeForce series. Since the chip has a 256-bit GDDR interface, nVidia decided to make some improvements in ROP compression efficiency to reduce bandwidth and graphics memory usage with anti-aliasing enabled at 1600x1200 and 1920x1200.

As a derivative of the original G80 architecture, the filter and texture address blocks, as well as the ROP sections, operate at a clock frequency different from that of the stream processors. Nvidia calls this basic speed. In the case of the GeForce 8800 GT, the characteristics of the video card are determined by the frequency of 600 MHz. In theory, this results in a fill rate of 9600 gigapixels per second (Gp / s) and a bilinear texture fill rate of 33.6 Gp / s. According to users, clock frequency is very underestimated, and an increase in the number of transistors does not guarantee the addition or preservation functionality... When the company switched from 110nm to 90nm technology, thanks to optimization, it reduced the number of transistors by 10%. Therefore, it will not be surprising if the chip will have at least 16 more stream processors disabled in this product.

Constructive execution

The reference design of the card provides for the operation of the core, shader unit and memory at 600 MHz, 1500 MHz and 1800 MHz, respectively. The 8800 GT features a single-slot cooling system, and a glossy black metal shroud almost completely hides its front side. The fan with a diameter of 50 mm corresponds to the design of the radial coolers of the top models and performs its duties very quietly in all operating modes. It doesn't matter if the computer is idle, loaded only by a worker Windows table, or your favorite game is running - it will practically not be heard against the background of other noise sources in the PC case. However, it is worth noting that the first time you turn on the computer with a new video card, you can get scared. The fan starts to howl when the GPU is at full capacity, but the noise dies down even before the desktop appears.

The metal bezel attracts fingerprints, but this should be of little concern, since once installed it will be impossible to see them. According to users, the cover helps prevent accidental damage to components such as capacitors on the face of the card. Printed circuit board, painted green, combined with a black radiator bezel, gives the 8800 GT a recognizable character. The model is marked with the GeForce logo along the top edge of the bezel. Mark Rein, vice president of the company, told reporters that this came at an additional cost, but was necessary to help users figure out which graphics card is the heart of the system at LAN parties.

Hidden under the heatsink are eight 512MB graphics memory chips for a total of 512MB of storage space. It is GDDR3 DRAM with an effective frequency of up to 2000 MHz. The GPU supports both GDDR3 and GDDR4, but this feature was never used in this series.

Heating and power consumption

The nVidia GeForce 8800 GT graphics card is very sexy. Its design is just very pleasing to the eye and, with the G92's internal changes, it gives off a sense of solid design.

More important than aesthetic aspects, however, according to users is the fact that the manufacturer has managed to fit all the power into a single-slot device. This is not just a welcome change, it is a pleasant surprise. The characteristics of the GeForce 8800 GT are such that it can be assumed that there is a cooler with a height of two slots. The reason nVidia went for such a slim design was a change in the manufacturing process that reduced heat to a level that a low-profile fan could handle. In fact, the temperature has dropped so much that even a relatively small cooler doesn't have to spin very fast, which leaves the card virtually silent even when handling intense games. However, the board temperature rises significantly, so a significant amount of air is required to prevent overheating. As a result of the downsizing of the technological process, the GeForce 8800 GT 512 MB consumes only 105 W even at full load. Thus, only one six-pin power connector is required. This is another nice change.

The card was the first to support PCIe 2.0, which allows receiving power up to 150 watts. However, the company found that for backward compatibility it is much easier to limit the power through it to 75 watts. This means that regardless of whether the card is connected to motherboards with PCIe 1.1 or PCIe 2.0, only 75W comes in through the connector, and the rest of the power goes through the secondary connector.

VP2 processor

Talking about the possibility of transmitting HDCP signals, it is worth touching on the next generation video processor, which nVidia has incorporated into the G92. VP2 is a single programmable SIMD processor that is flexible enough to expand in the future. It provides very intensive processing of H.264 encoded video, shifting the load from the CPU to the GPU. In addition to VP2, there is also an H.264 streaming processor and an AES128 decoder. The first one is specially designed to speed up the CAVLC and CABAC coding schemes - tasks that are very burdensome CPU in a purely software environment. AES128 enables faster processing of the encryption protocol required for video security schemes such as AACS and Media Foundation. Both of these schemes require video encoding (both compressed and uncompressed) when transmitted over buses like PCI-Express.

Improving image quality

Nvidia is working hard to improve on the transparent anti-aliasing technique first introduced in the 7th GeForce series. Multisampling has little effect on card performance, but is ineffective in most cases. On the other hand, supersampling provides much better and more stable image quality, but at the expense of slower speed, it is an incredibly resource intensive anti-aliasing technique.

The drivers that come with the video card contain a new multisampling algorithm. The differences are quite significant, but the final decision is made by the user himself. The good news is that since this is a driver-level change, any hardware that supports transparent anti-aliasing can use the new algorithm, including cards released after the GeForce 7800 GTX. To activate the new mode, you just need to download Latest updates on the manufacturer's website.

According to user reviews, the driver for the GeForce 8800 GT will not be difficult to update. Although the graphics card web page contains only links to files for Windows Vista and XP, a search from the main page will help you find what you need. For nVidia GeForce 8800 GT, Windows 7-10 drivers are installed with the 292MB GeForce 342.01 Driver utility.

Connectivity

The output connectors of the nVidia GeForce 8800 GT are quite standard - 2 dual-link DVI-I ports with HDCP support, which are suitable for both analog and digital interfaces of monitors and TVs, and a 7-pin analog video port provides conventional composite and component output. DVI connectors can be used in combination with a DVI-VGA and DVI-HDMI adapter, so any connection is possible. However, nVidia still makes audio support for use with HDMI connectors optional option for third party manufacturers- there is no audio processor inside VP2, so the sound is realized through the S / PDIF connector on the board. This is disappointing as the thin and quiet card is ideal for gaming home theaters.

The GeForce 8800 GT is the first graphics system to be PCI Express 2.0 compliant, which means it can access memory at up to 16 GB / s. - twice as fast as the previous standard. While this can be useful for workstations and computationally intensive tasks, it won't be useful to the average gamer. In any case, the standard is fully compatible with all previous PCIe versions, so there is nothing to worry about.

Nvidia's partner companies offer overclocked versions of the GeForce 8800 GT, as well as game packs.

BioShock by 2K Games

BioShock was one of the best games out there when the graphics card was released. It is a genetically modified first-person shooter set in the underwater city of Rapture, created at the bottom of the Atlantic by a man named Andrew Ryan as part of his 1930s art deco dream. 2K Boston and 2K Australia licensed and used Epic Games' Unreal Engine 3 to achieve the best effect, and also used some DirectX 10 features. All of this is controlled through an option in the game's graphics control panel.

The BioShock scene forced the developers to use a lot of water shaders. DirectX 10 technology has helped improve ripple when characters move through the water, and pixel shaders have been massively used to create wet objects and surfaces. In addition, the DX10 version of the game uses a depth buffer to create "soft" particle effects that interact with their surroundings and look more realistic.

nVidia GeForce 8800 GT, which has the characteristics that allow it to prove itself in BioShock with strong side, at 1680x1050 it is only slightly inferior to the GTX. As this parameter increases, the gap between the cards increases, but not by a large margin. The reason for this is probably the fact that the game did not support transparent anti-aliasing, and the 8800 GTX's massive memory bandwidth advantage becomes controversial.

According to user reviews, the 8800 GT also performs reasonably well with SLI enabled. Although its capabilities do not come close to the GTX, the Radeon HD 2900 XT with 512MB of memory in the CrossFire configuration competes with it. Perhaps even more interesting is the fact that at 1920x1200, the 8800 GT is almost as fast as the 640MB GTS!

Crysis Syngle Player Demo by Electronic Arts

This game will literally make your graphics card cry! The big surprise was her graphics - she surpassed everything that was in computer games before her. Testing with the built-in GPU runner is much faster than it actually is. Around 25 fps in the performance test is enough to get a user-friendly frame rate. Unlike other games, the low frame rate still looks pretty flat here.

The nVidia GeForce 8800 GT graphics card, whose characteristics in Crysis allow achieving sufficient frame rates at 1680x1050 resolution with high details under DirectX 10, is not as fast as the GTX, but noticeably more productive than the Radeon HD 2900 XT and 8800 GTS 640MB. The GTS 320MB struggles with Crysis and will need to lower most settings to medium to get a frame rate above 25 fps even with a 1280 x 1024 image quality.

Performance

As expected, the 8800 GTX remains unsurpassed, but overall the GeForce 8800 GT GTS outperforms in most tests. At the highest resolutions and anti-aliasing settings, the reduced memory bandwidth of the GT fails and the GTS takes the lead from time to time. However, considering the price difference and other advantages, the 8800 GT is better anyway. Conversely, comparing GeForce GTX 8800 / GT 8800 each time confirms why the first card is so expensive. While other models start to slow down significantly with an increase in the number of pixels, with transparent anti-aliasing and anisotropic filtering, the 8800 GTX continues to demonstrate excellent results. In particular, Team Fortress 2 at 1920x1200 with 8xAA and 16xAF on the 8800 GTX runs twice as fast as on the GT. However, for the most part, the GeForce 8800 GT performs well. Of course, if you ignore the incredible low frequency frames in Crysis.

Conclusion

Although the GeForce 8800 GT graphics card does not exceed the specifications of the leader of the 8800 GTX series, it provides similar performance for a fraction of the price, and also includes many additional opportunities... And if you add here the small size and quiet operation, then the model will seem simply phenomenal.